If you missed my day 3 part 3, you can view it here.

Keynote: Building the Cloud for You

It was my final keynote at Oracle AI World, and I arrived early to secure my usual spot at the front.

Clay Magouyrk, Oracle’s newly appointed CEO (formerly President of the OCI business), came onto the stage.

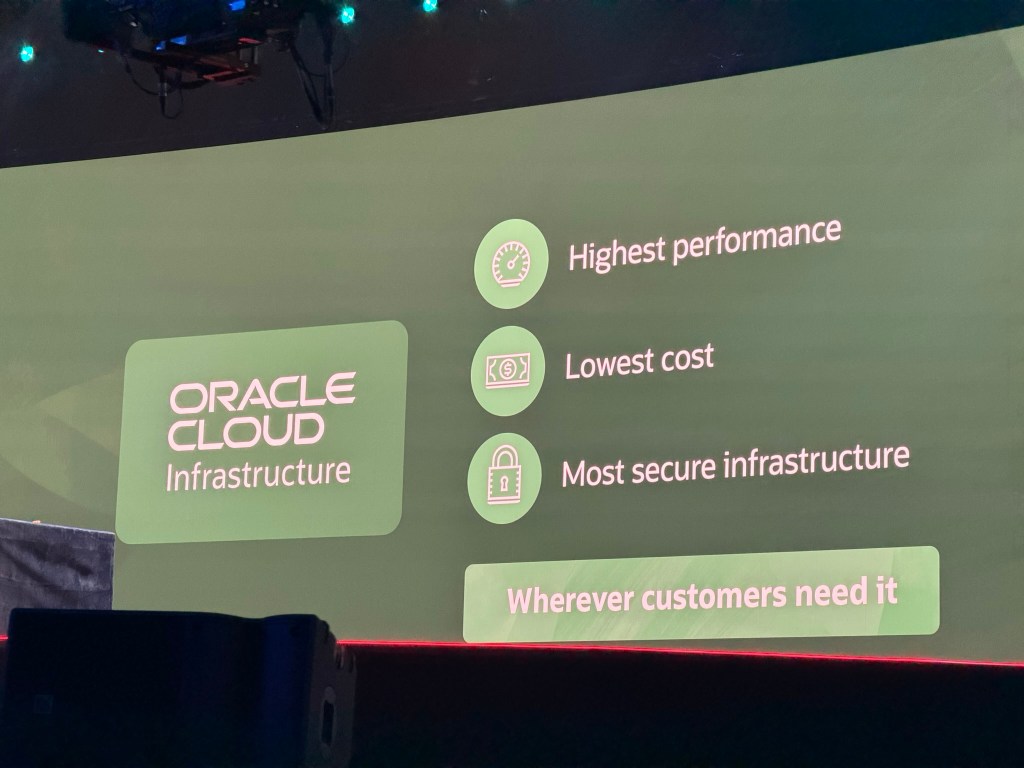

The OCI Mission and Foundational Principles

Clay explained that he joined Oracle to create the fourth major cloud provider by building Oracle Cloud Infrastructure (OCI). OCI was founded with a clear mission to deliver the highest performance, lowest cost, and most secure infrastructure possible, wherever the customers need it.

This is an absolute standard, not a relative benchmark against competitors. As Clay emphasised, “Our goal is not to be better than competitors. Our goal is to be the absolute best we can be.” This principle underpins OCI’s commitment to fundamental engineering and architectural purity.

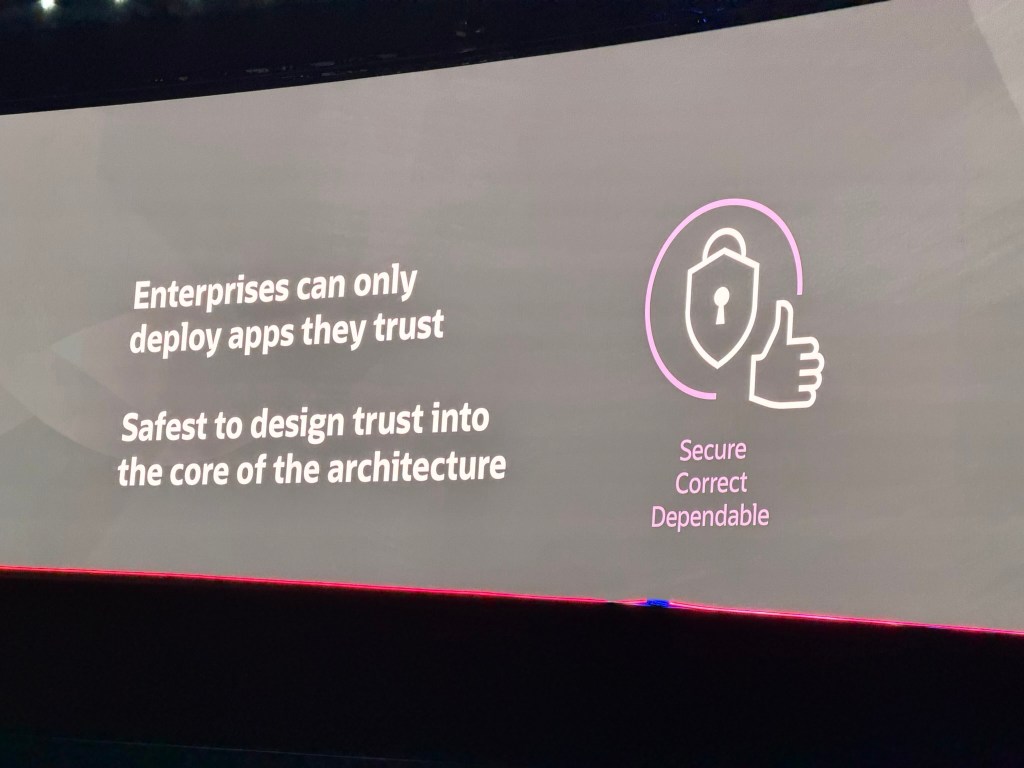

OCI adopts an architecture-first philosophy, prioritising the strength and extensibility of its foundational layers. Cloud infrastructure is seen as a series of interdependent layers, where the resilience of the entire stack depends on those beneath it. This approach demands an architecture that anticipates future requirements and accommodates unknown hardware and software advancements over time.

Core Architectural Decisions and Differentiators

OCI’s commitment to its mission is reflected in a series of key architectural decisions that distinguish it from other cloud providers.

Bare Metal First: OCI was designed with bare metal servers as first-class citizens. This forced the invention of off-box network and storage virtualisation. The key benefits are: first, security, Oracle has no software on the customer’s machine, providing complete control; second, extensibility, OCI’s VM service is built directly on the bare metal service, enabling others to build platforms on top; third, flexibility, simplifies the integration of diverse hardware, including GPUs and other accelerators.

RDMA Networking: Early and comprehensive support for Remote Direct Memory Access (RDMA) networks was driven by Oracle Database requirements (RAC, Exadata) 😎. OCI developed a secure method to dynamically hard-partition RDMA networks. The key benefits are, first performance, delivers full RDMA benefits for High Performance Computing (HPC), GPU clusters, and high-performance database workloads, second security, provides multi-tenant security enhancements expected from a fully virtualised cloud.

Universal Service Availability: All OCI services and hardware types are available in all regions! This eliminates the model where availability varies by location. The key benefit is simplicity, customers have a predictable and consistent experience regardless of region.

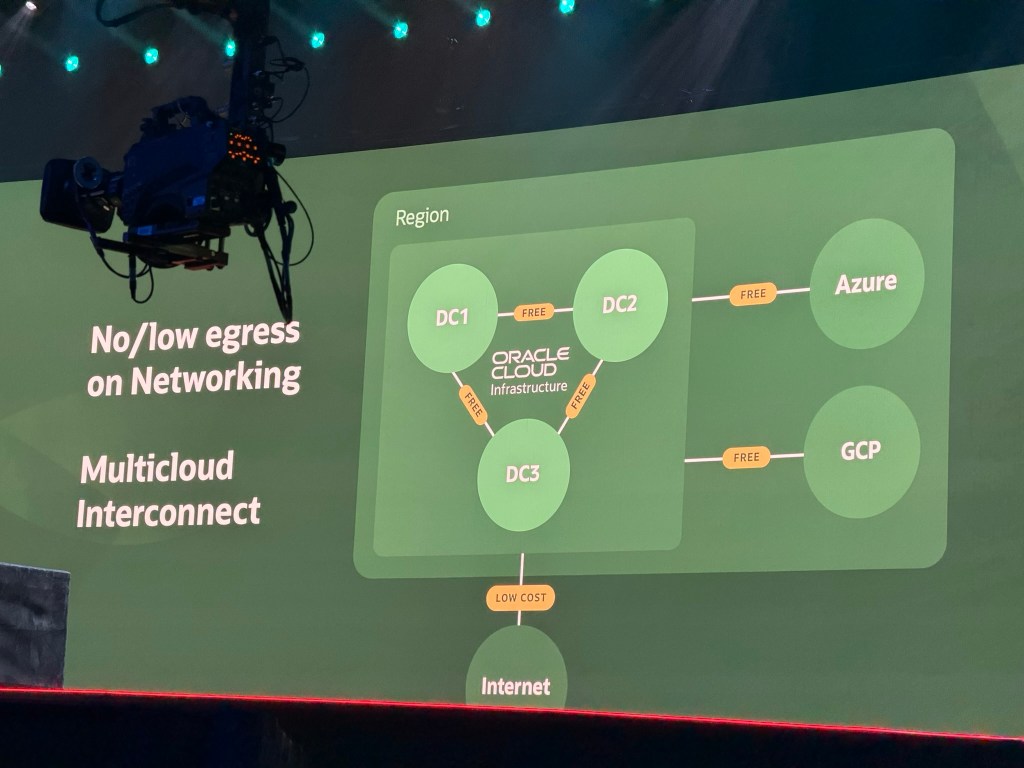

Simplified and Consistent Pricing: OCI employs a single, consistent price list across all regions. This approach extends to network costs. Key benefits are, first predictability, customers can easily understand and forecast their costs without complex tools/calculators, second cost savings, data transfer within a region is free! Internet egress fees are up to 10 times lower than competitors! Multi-cloud interconnects with partners like Microsoft and Google feature zero egress fees!

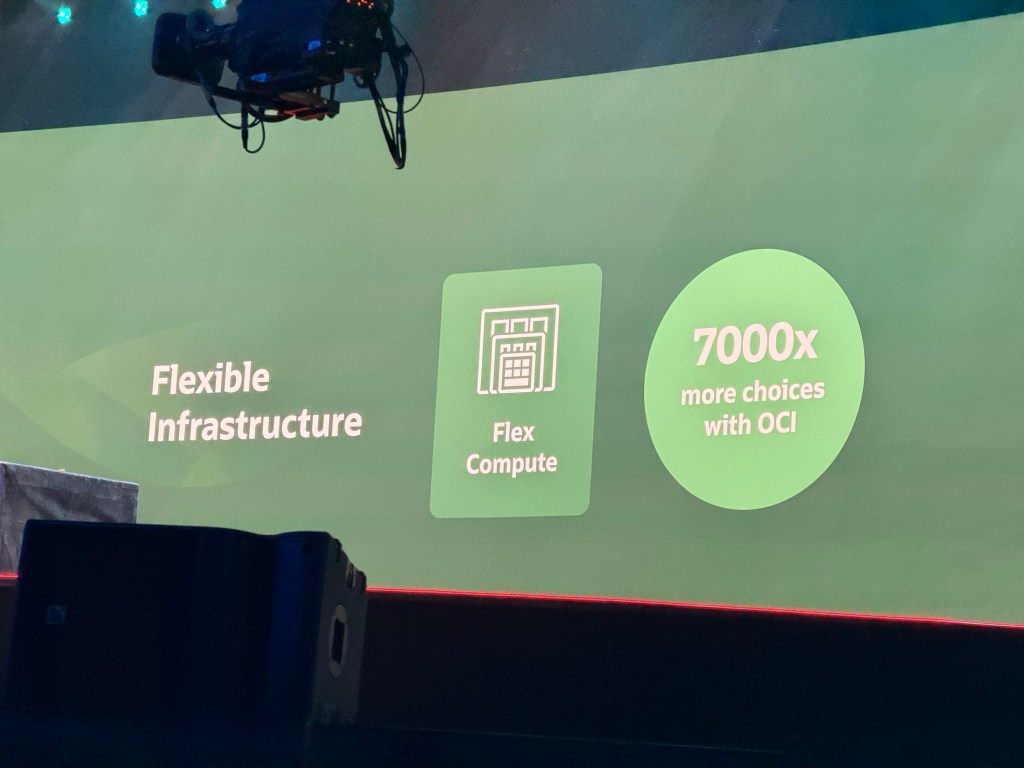

Flexible Infrastructure: Oracle designed its infrastructure building blocks for maximum flexibility. Customers can select exactly the cores and memory they need, with OCI offering 7,000 times more configuration options than competitors without added complexity, because each core and gigabyte of RAM is priced consistently. Storage is equally simple, instead of multiple block storage types, OCI provides a single option whose performance can be tuned dynamically in real time. This philosophy extends to VM availability. Oracle uses Ksplice for zero-downtime kernel upgrades and supports live migration for hardware maintenance without customer reboots, similar to VMware’s vMotion. These capabilities are also coming to Exascale for Exadata 😎.

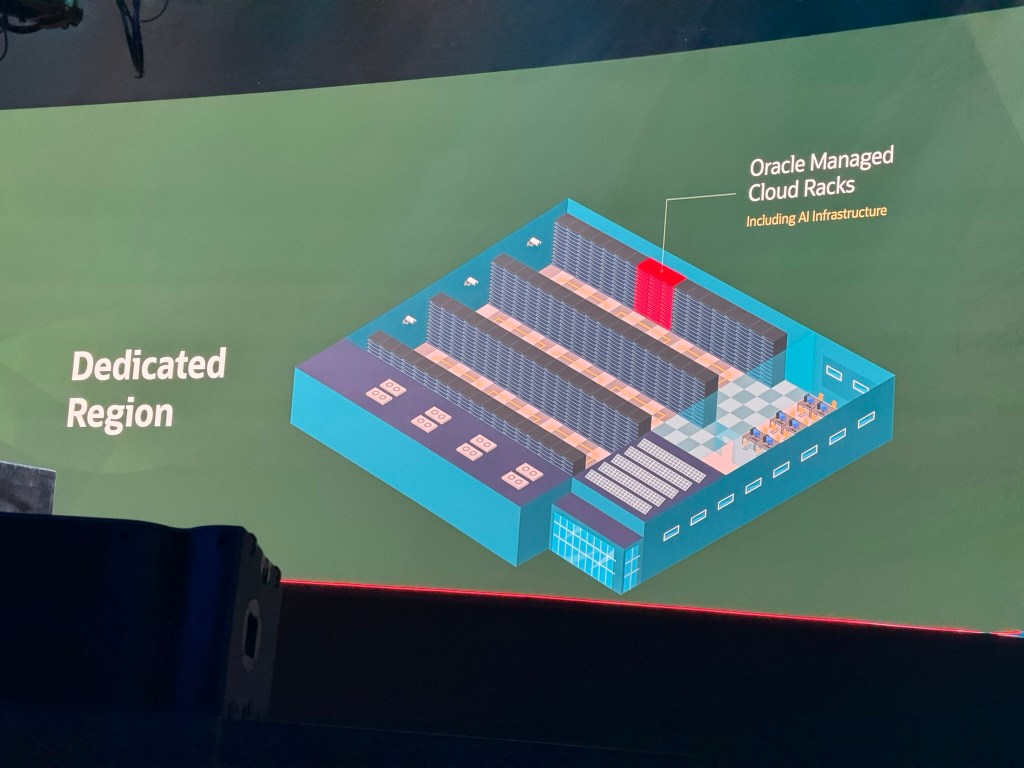

Scalability (Up and Down): The architecture was designed to scale down as effectively as it scales up. This, combined with scalable operations, enables novel deployment models such as:

- Dedicated Regions: the ability to scale down makes it feasible to provide dedicated cloud regions for individual customers in their own data centres with only three racks.

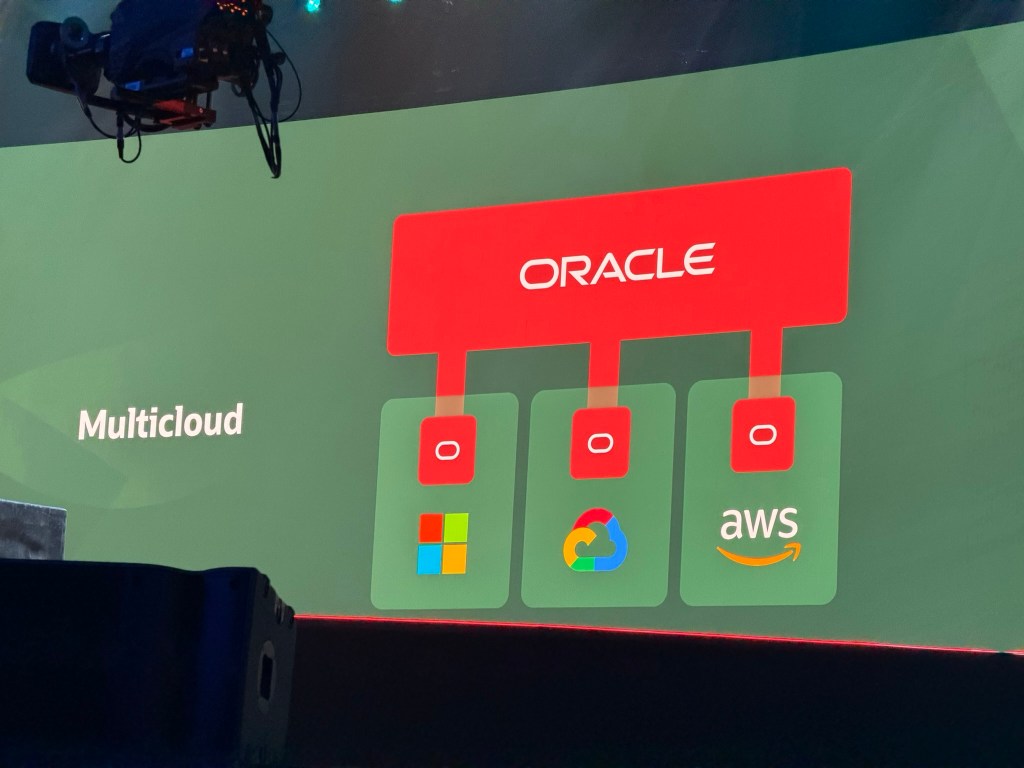

- Multicloud presence: enables OCI to place its full data platform capabilities inside other cloud environments such as Azure, GCP and AWS.

TikTok: Engineering for Global Scale and Reliability

Clay then introduced Fangfei Chen, Head of Infrastructure Engineering at ByteDance, the company that owns TikTok to the stage.

Oracle’s long-standing partnership with ByteDance, the parent company of TikTok, demonstrates OCI’s capability to operate and co-engineer at an extreme scale. Over nearly five years, Oracle has expanded OCI globally to deliver the performance, scalability, and reliability required by ByteDance.

TikTok is a platform for creativity, serving over one billion global users, including 170 million in the US, and generating around 20 million videos daily. To support this scale, TikTok requires millions of servers, zettabytes of storage, and hundreds of terabits per second of network capacity! This necessitated deep integration with OCI at the network layer, driving Oracle to become the first to launch 100 Gbps and later 400 Gbps FastConnect interconnects. They designed the network fabric together to maintain and operate reliably at this scale.

TikTok Shop offers a unique shopping experience centred on live streams. During events like Black Friday, shopping activity can double. ByteDance collaborates with OCI to plan capacity and demand, thanking Oracle for its flexibility in ensuring resources are available when needed. Oracle plays a key role in keeping TikTok customers happy. TikTok is obsessed with customer experience, users love video, watch video, and engage deeply. All telemetry flows from the infrastructure, enabling proactive operations where Oracle and ByteDance teams work closely together.

Clay concluded the segment by saying “OCI and Oracle would not be where we are today without all of the opportunity and the learnings that we’ve had from serving you and your customers.”

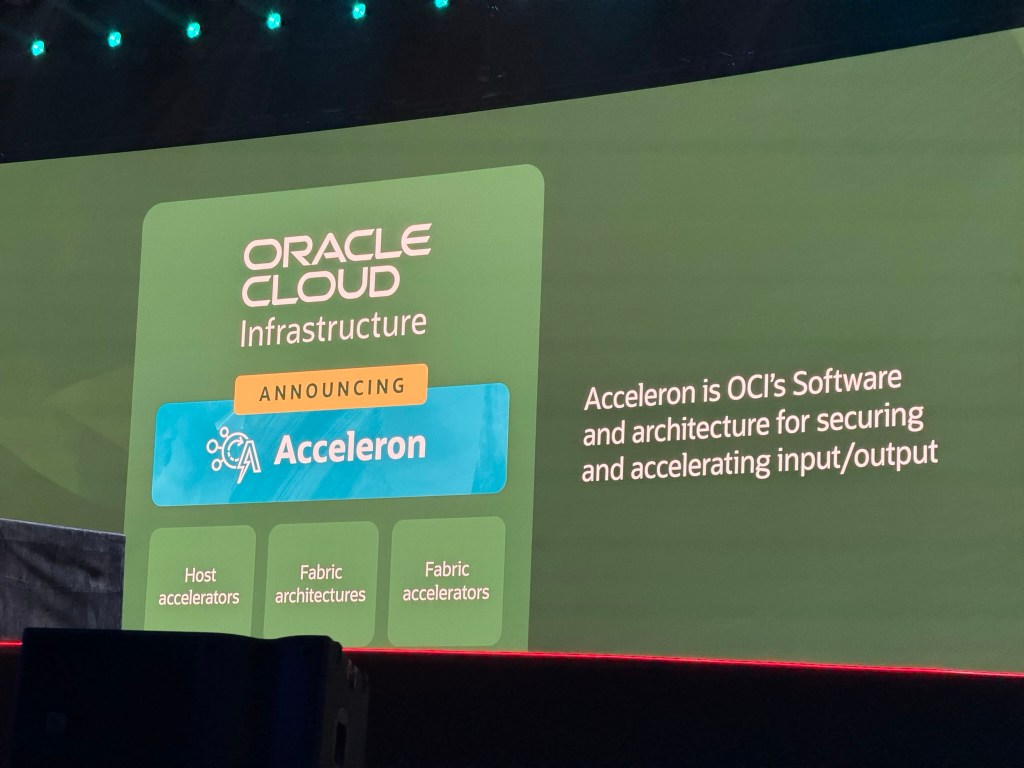

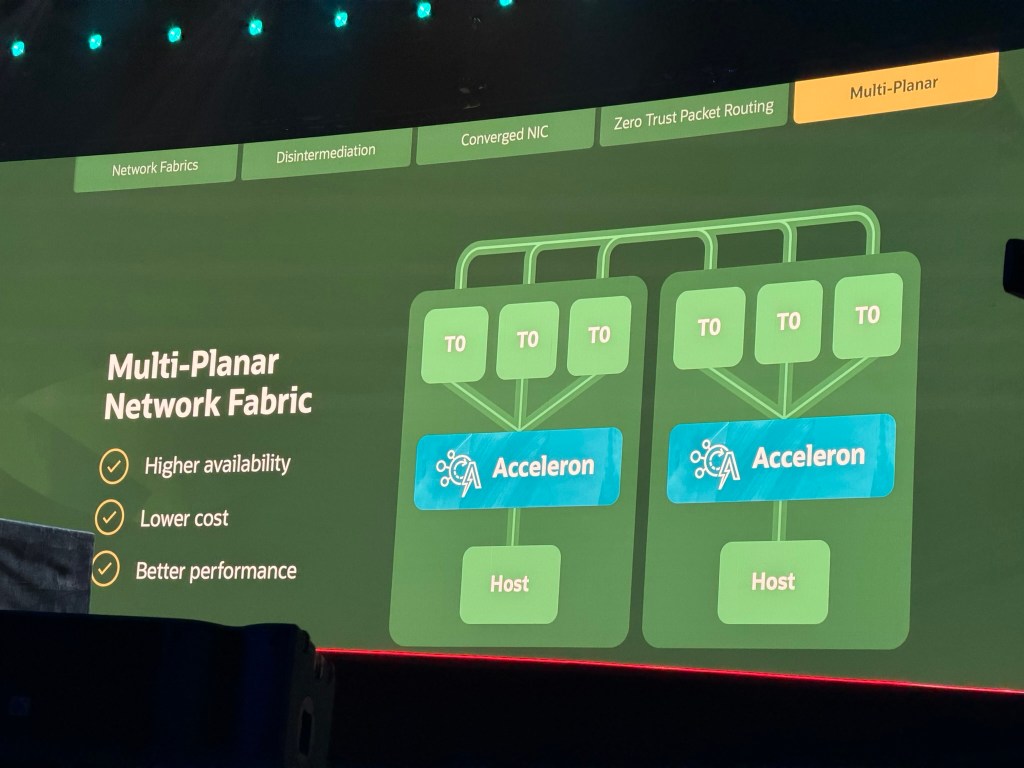

Announcing Acceleron

Acceleron is a multi-year project to upgrade OCI’s foundational software and architecture. It focuses on securing and accelerating input/output operations.

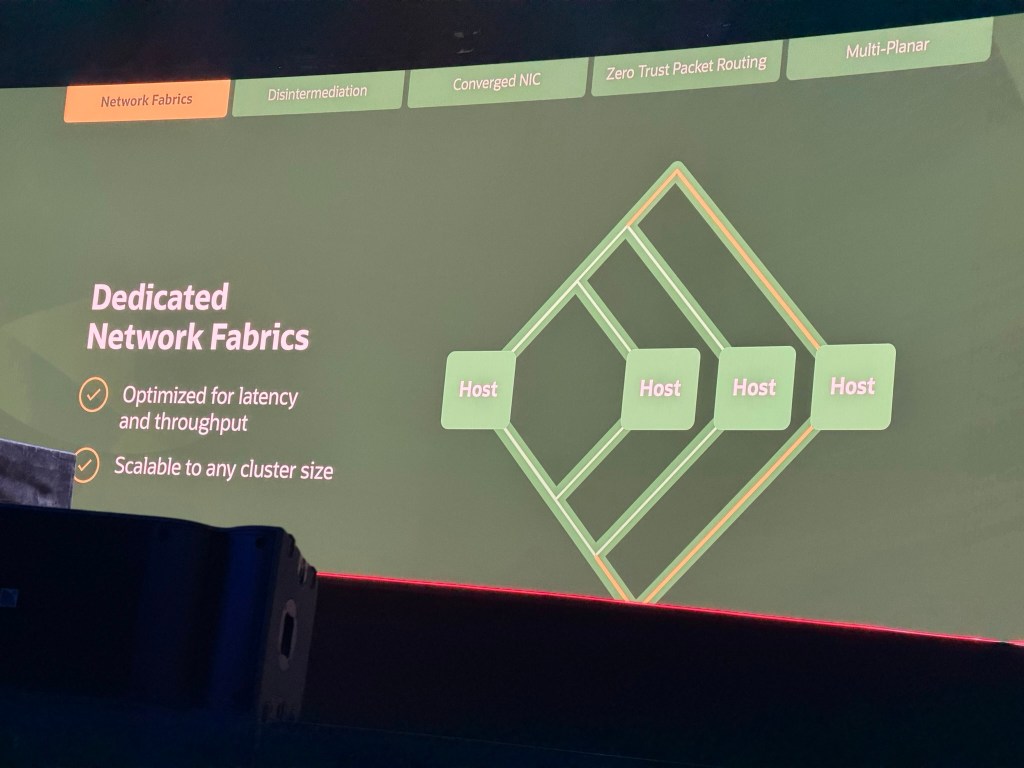

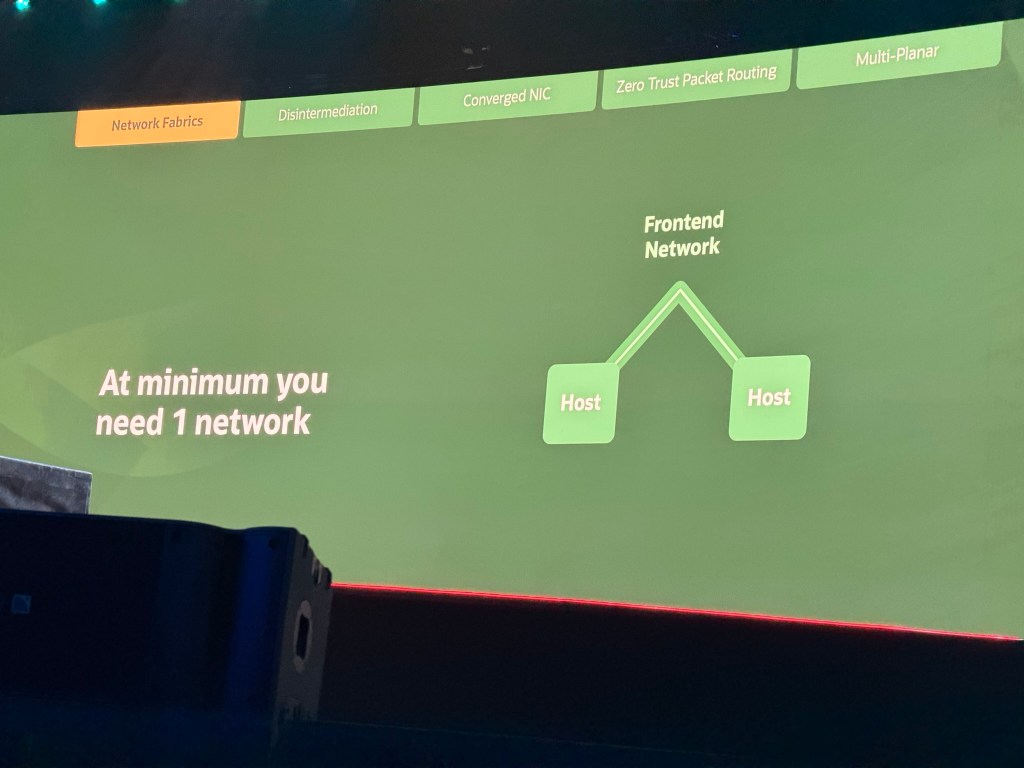

Dedicated Network Fabrics: OCI’s architecture provides a unified system for dedicated networks that can be optimised for either latency or throughput. These fabrics are securely hard-partitioned, delivering the performance of dedicated hardware with the security of a virtualised cloud. That scan scale to any cluster size.

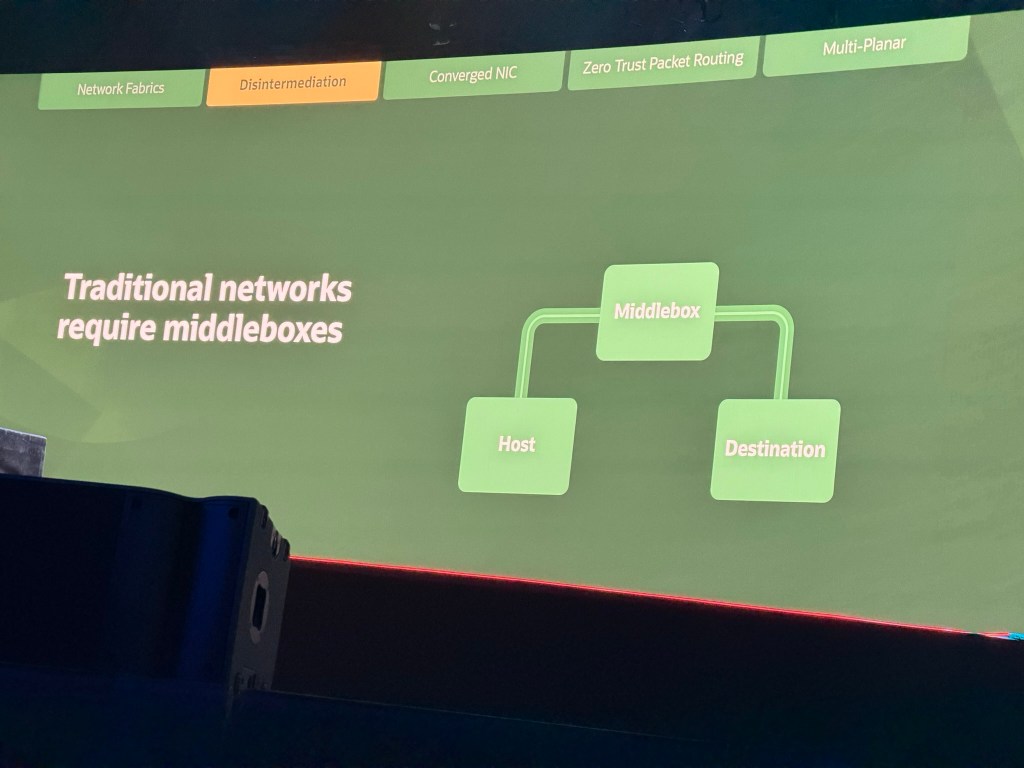

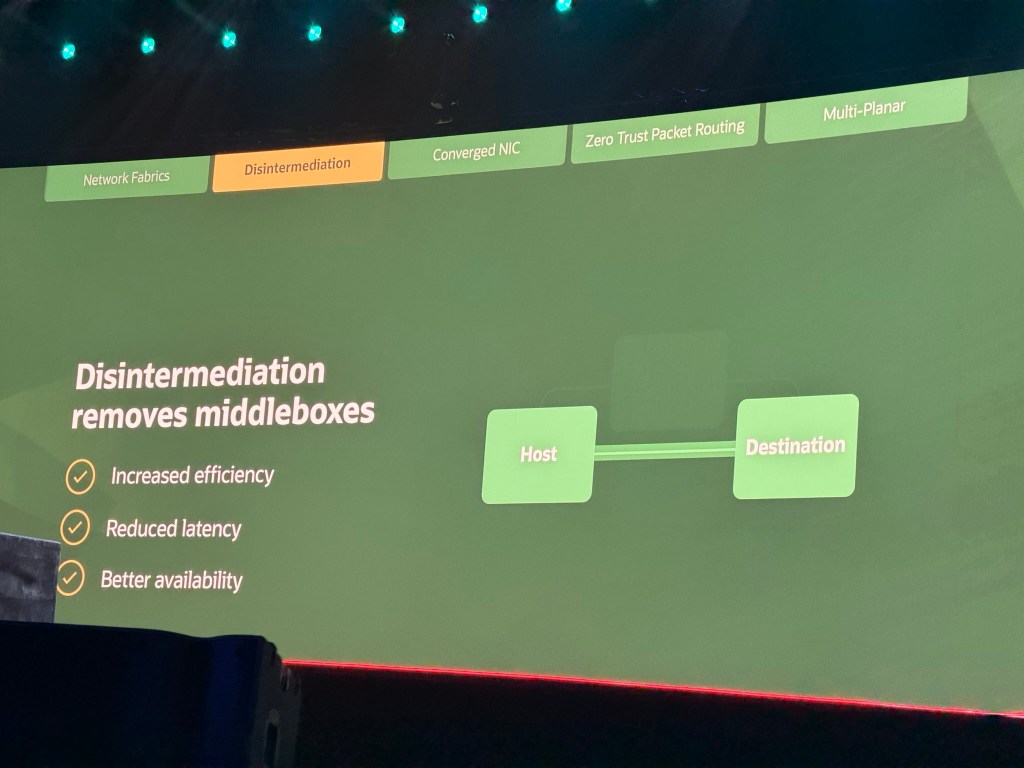

Disintermediation: This concept involves removing the “middle boxes” (physical or virtual) that traditionally handle advanced network functions. By integrating these functions into a flexible software architecture, OCI eliminates performance bottlenecks, reduces latency, and lowers costs, a key factor behind OCI’s low network pricing.

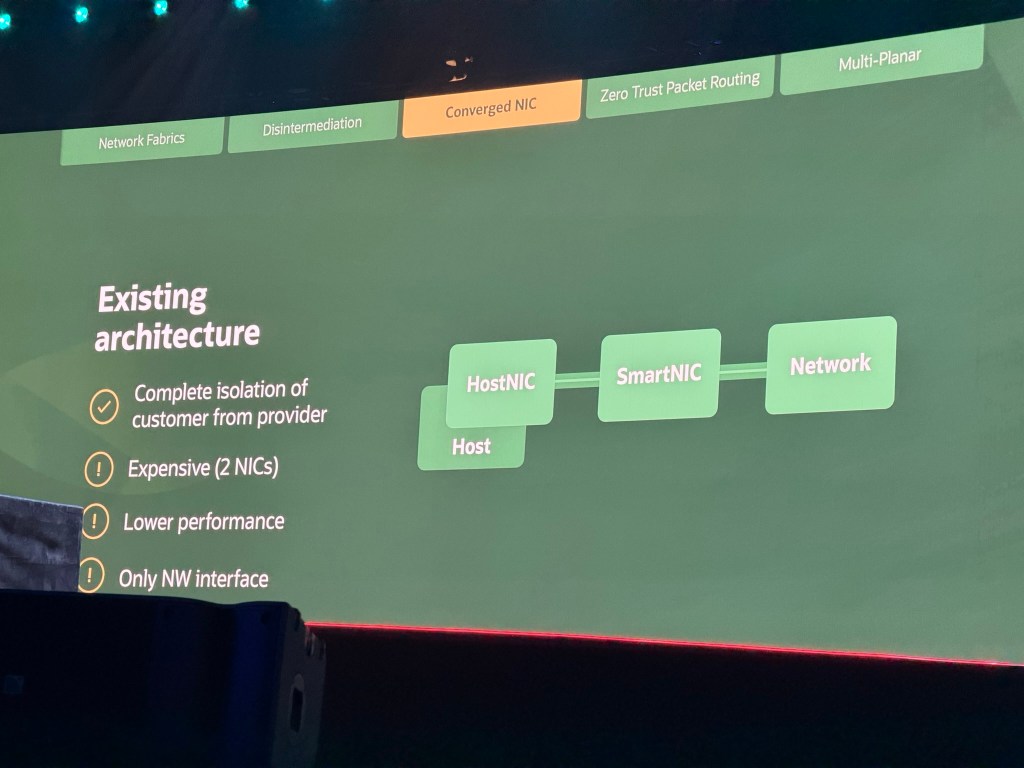

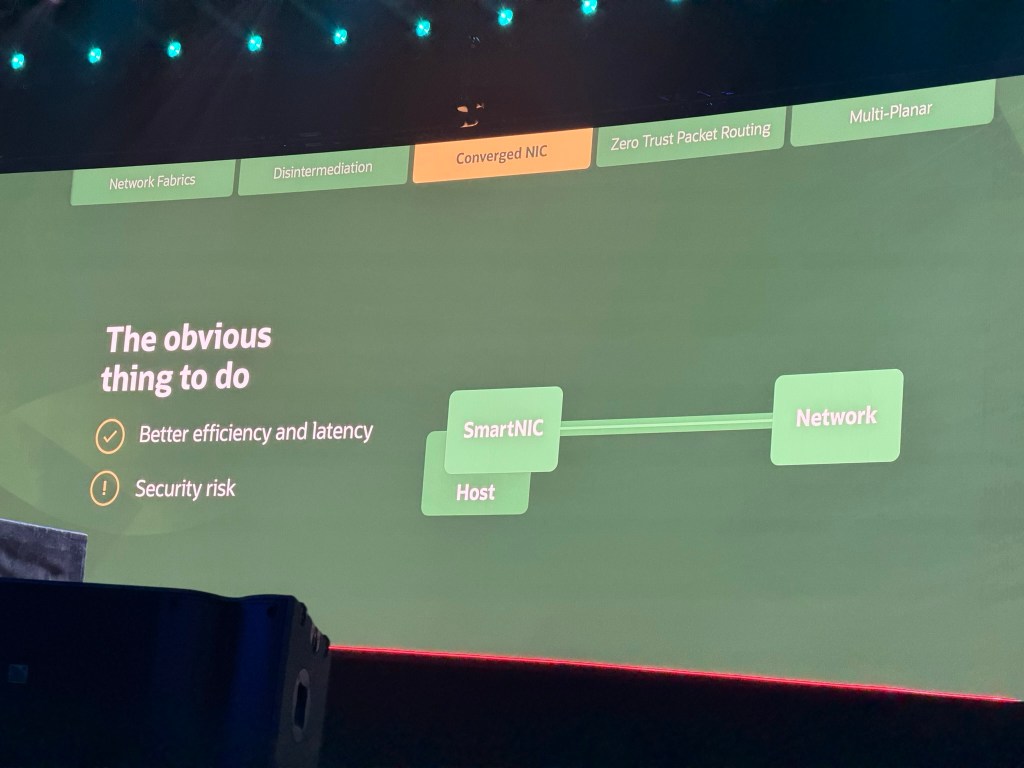

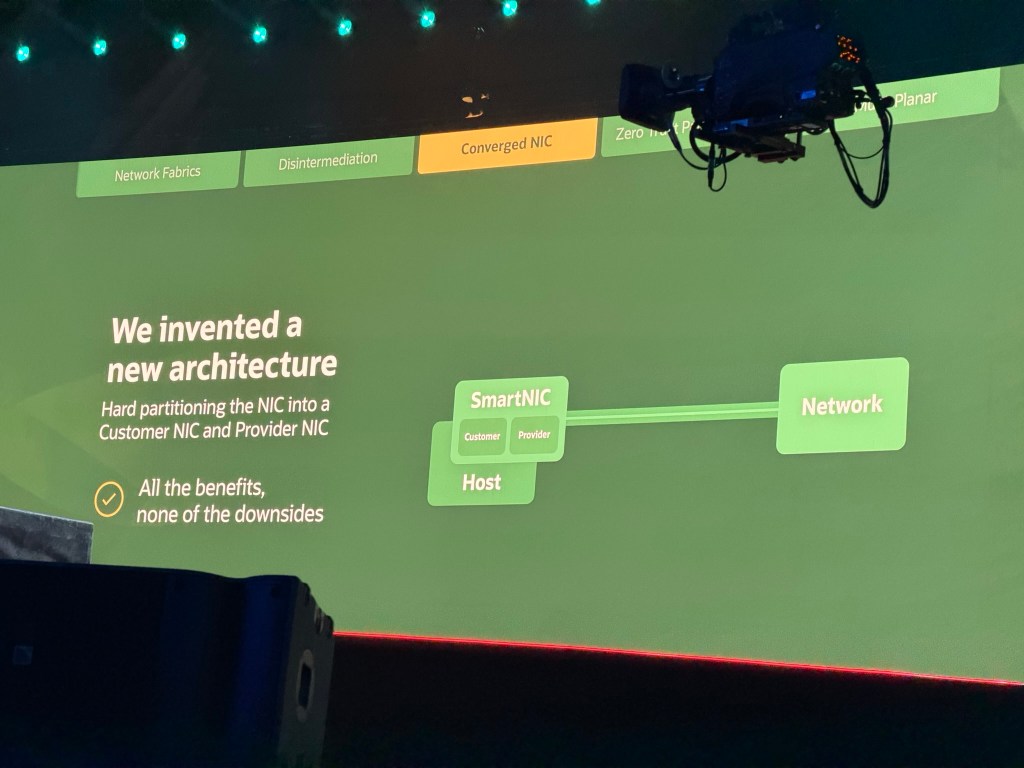

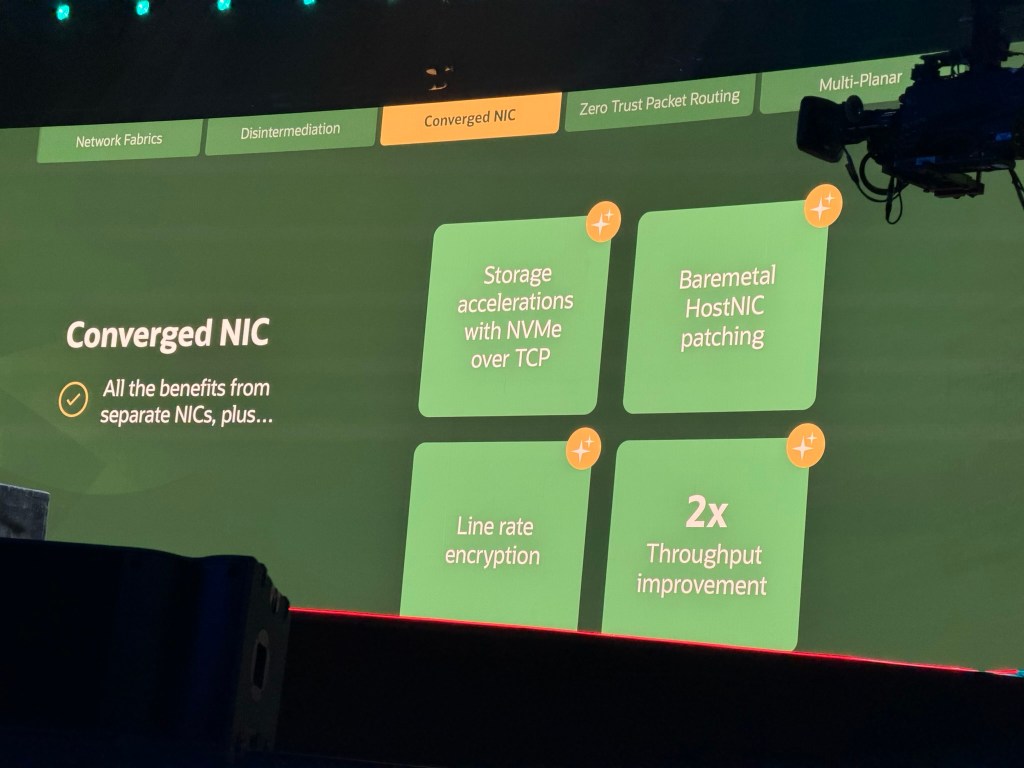

Converged NIC: In collaboration with AMD, OCI has designed a new architecture on a single smart NIC. It achieves security through hard partitioning, with dedicated cores and memory for both the customer-controlled NIC and the provider-controlled NIC. The benefits this provides:

- Maintains the high security posture of a two-NIC system

- Enables a direct NVMe interface for block storage

- Provides line-rate encryption for all traffic

- Allows for seamless patching of the host NIC, even on bare metal instances

- Delivers up to twice the available throughput for compute instances

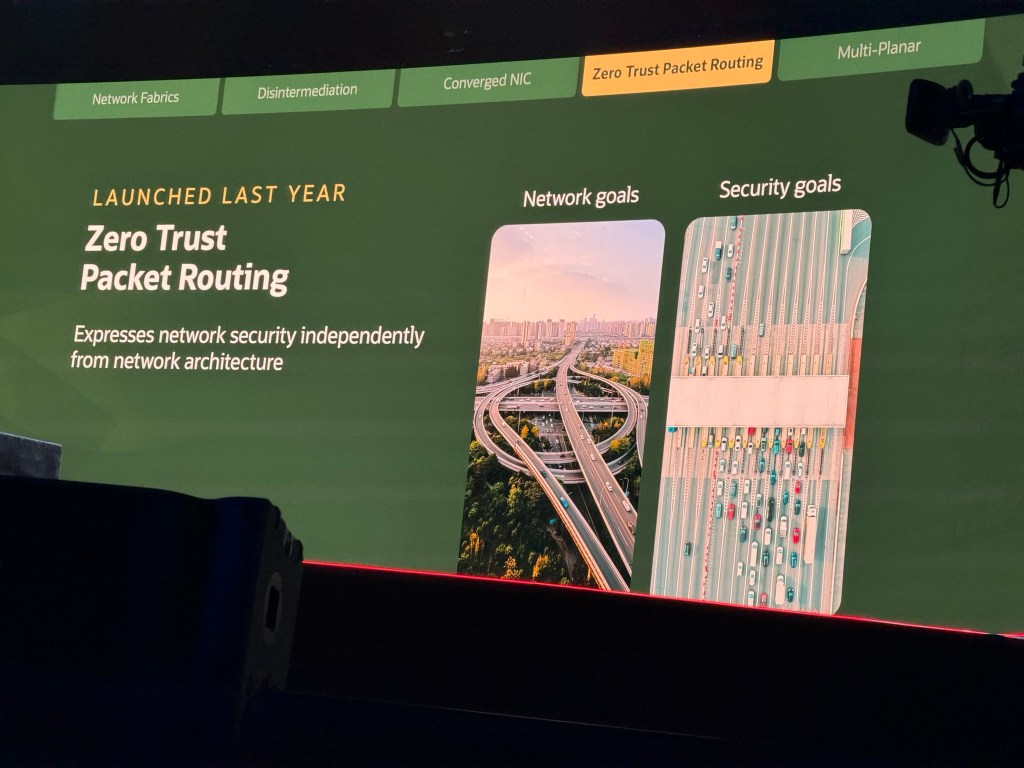

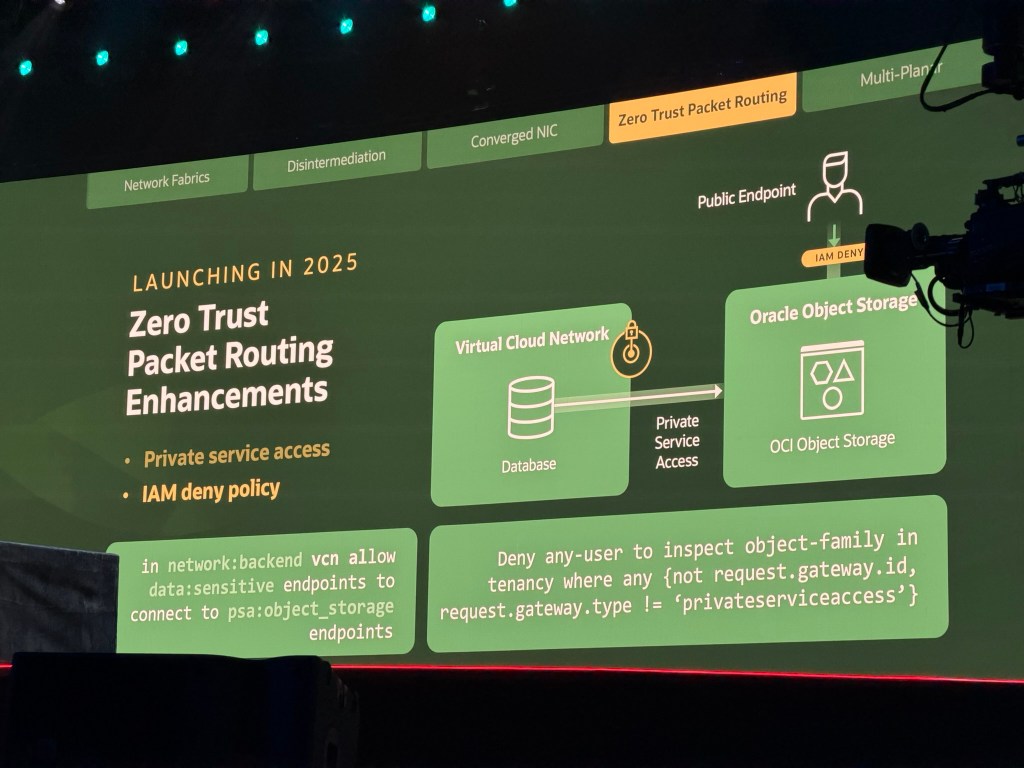

Zero Trust Packet Routing: This system decouples network security policy from the network architecture. Security rules are written in a dedicated policy language, making them easier to analyse and enforce. This prevents data exfiltration by creating explicit rules (for example allowing a database to access object storage only via a private endpoint) that are independent of network topology.

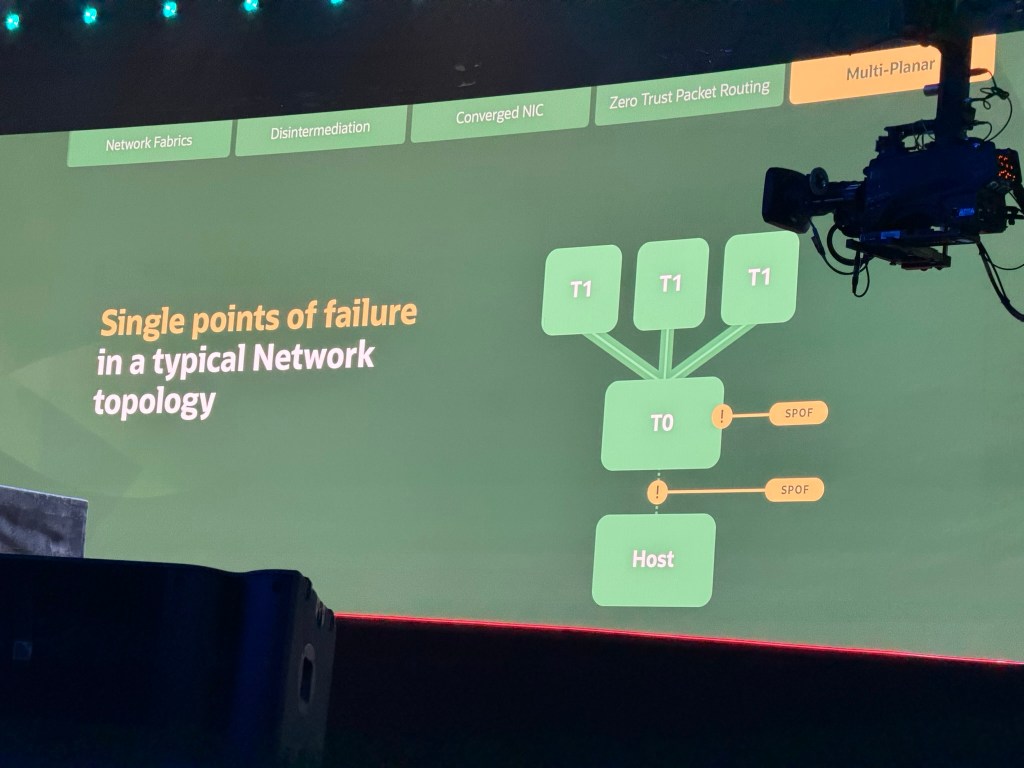

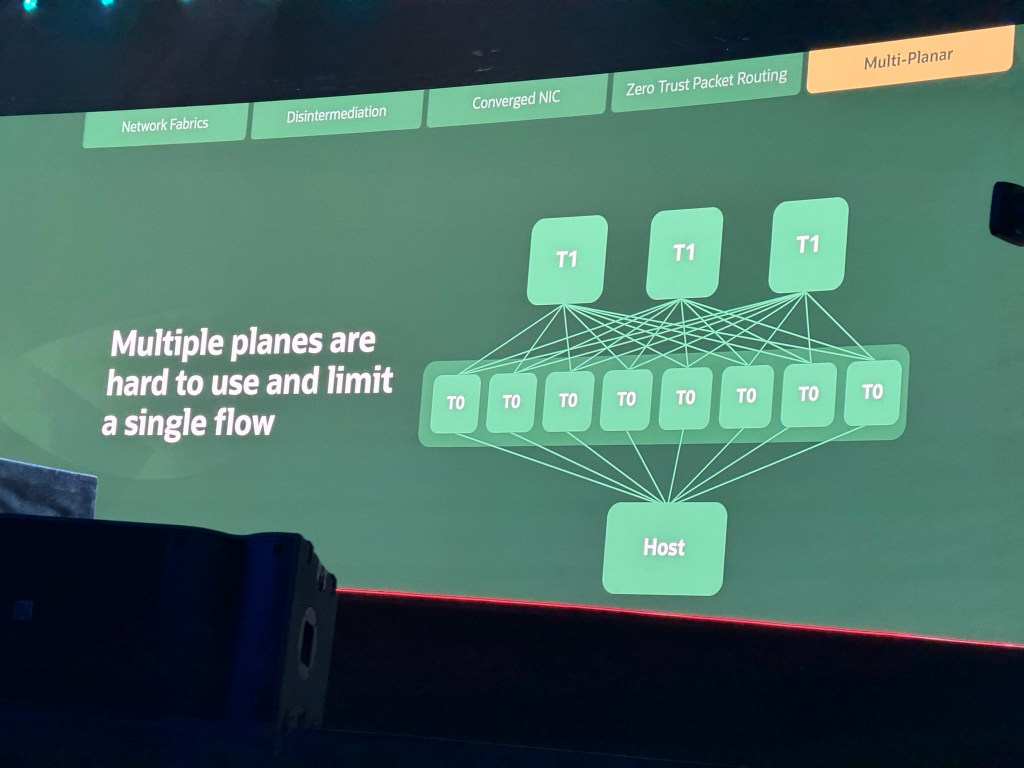

Multi-Planar Networks: OCI implements multiple redundant network planes behind the scenes while exposing a simple, single network plane to the host. The benefits this provides:

- Higher Availability: Eliminates single points of failure

- Lower Cost: Allows for the use of smaller switches

- Better Performance: Results in fewer hops across the network

- Ease of Use: Preserves simplicity for the user, who does not have to manage multiple interfaces

OpenAI: Industrialising Compute for the AI Frontier

Clay then introduced Peter Hoeschele, Vice President of Infrastructure and Industrial Compute Engineering at OpenAI, to the stage.

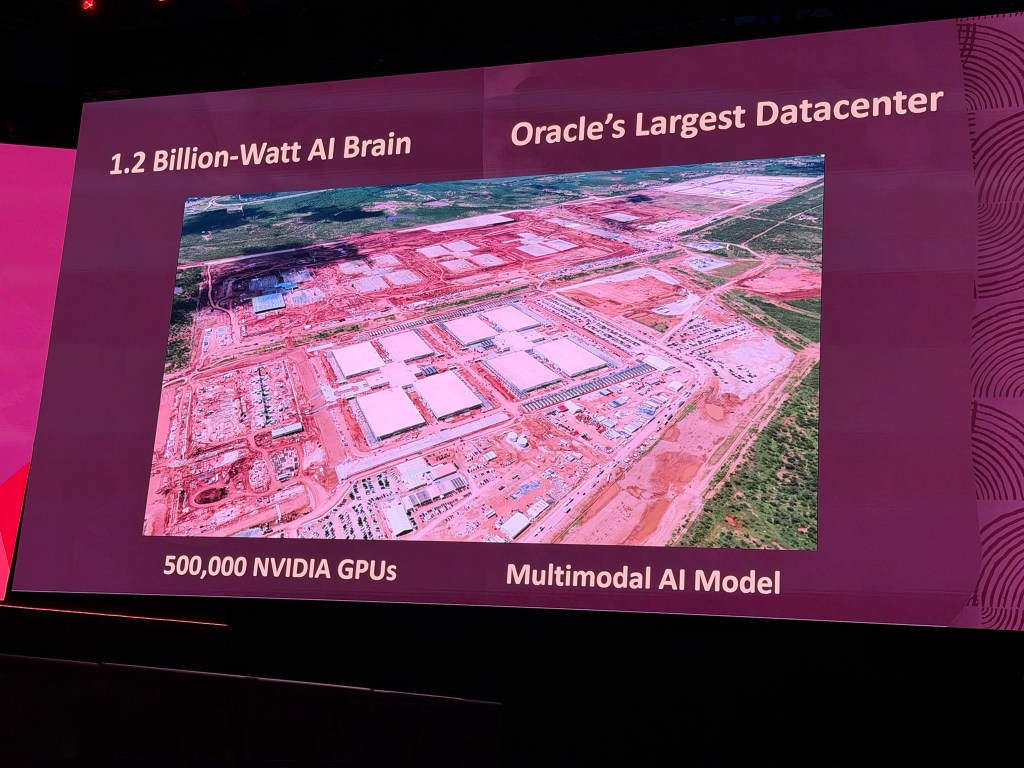

When OpenAI needed additional capacity, Oracle stepped in, understood the requirements, and delivered 200 megawatts of data centre capacity. Typically, a facility of this scale takes four years to build, but Oracle brought it online in just 11 months.

Peter described the collaboration as truly inspiring. OpenAI is targeting 10x growth over the next two years, with additional capacity dedicated to training models and serving customers. Their guiding principle is simple, more compute leads to better models. To achieve this, they sought the best GPUs and the largest clusters possible. When OpenAI wanted to develop a new model, they lacked the ability to scale internally, but with OCI, they can. As OpenAI expands globally, Oracle ensures compliance with security and policy requirements, enabling seamless deployment whenever OpenAI enters a new region.

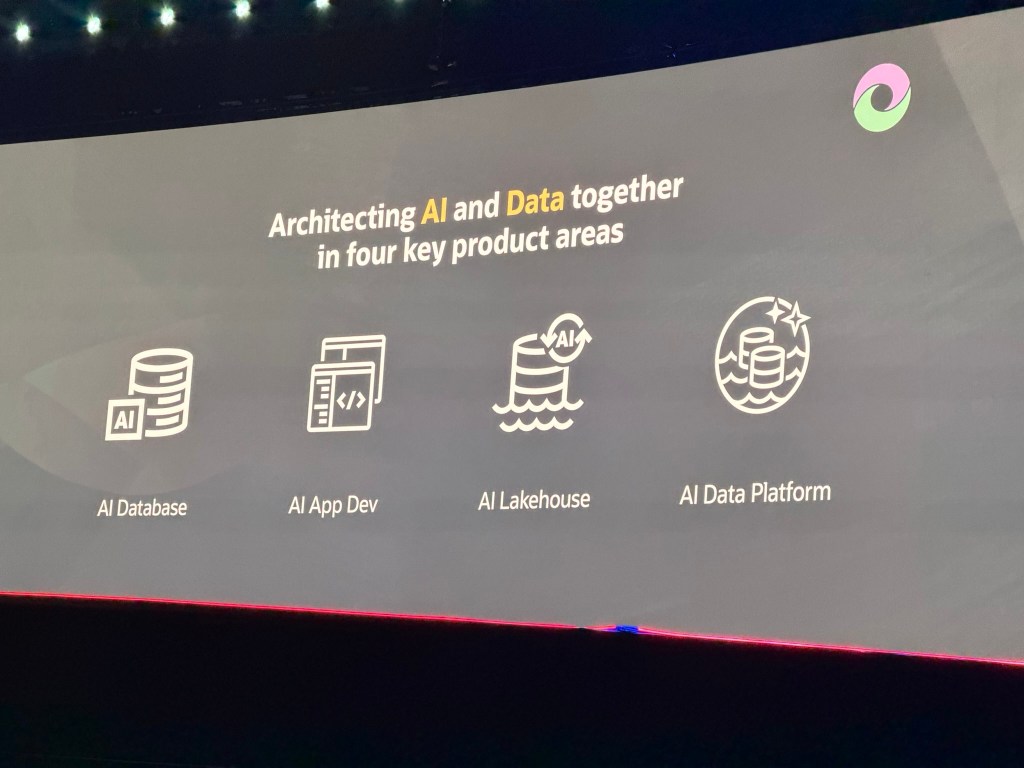

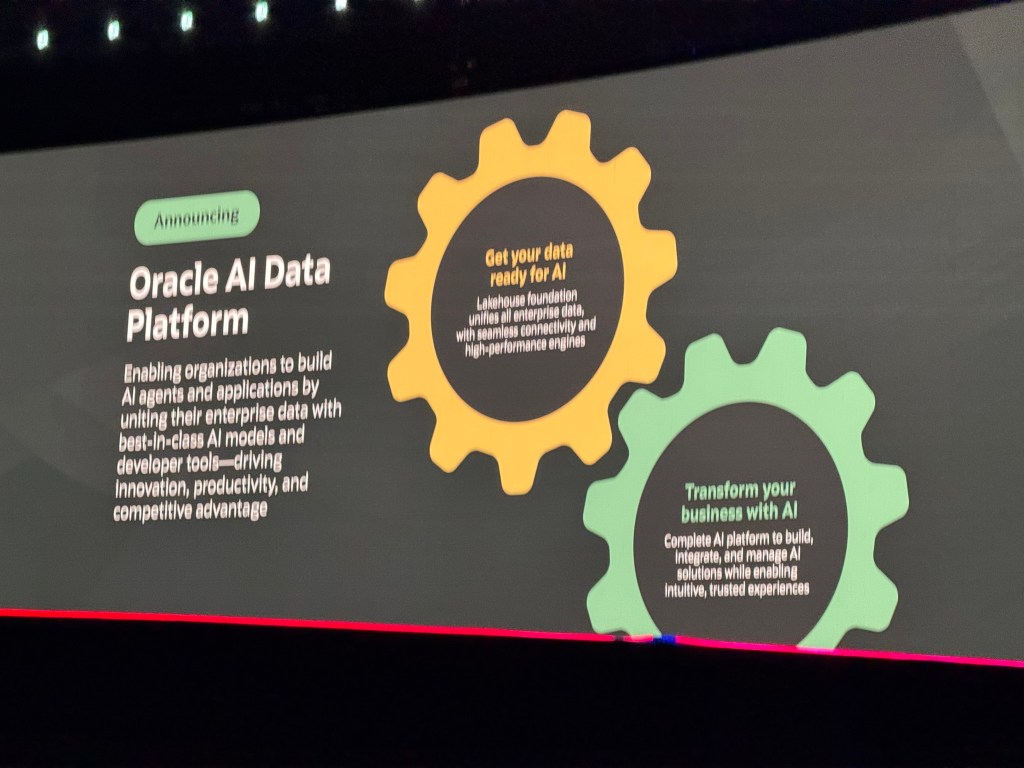

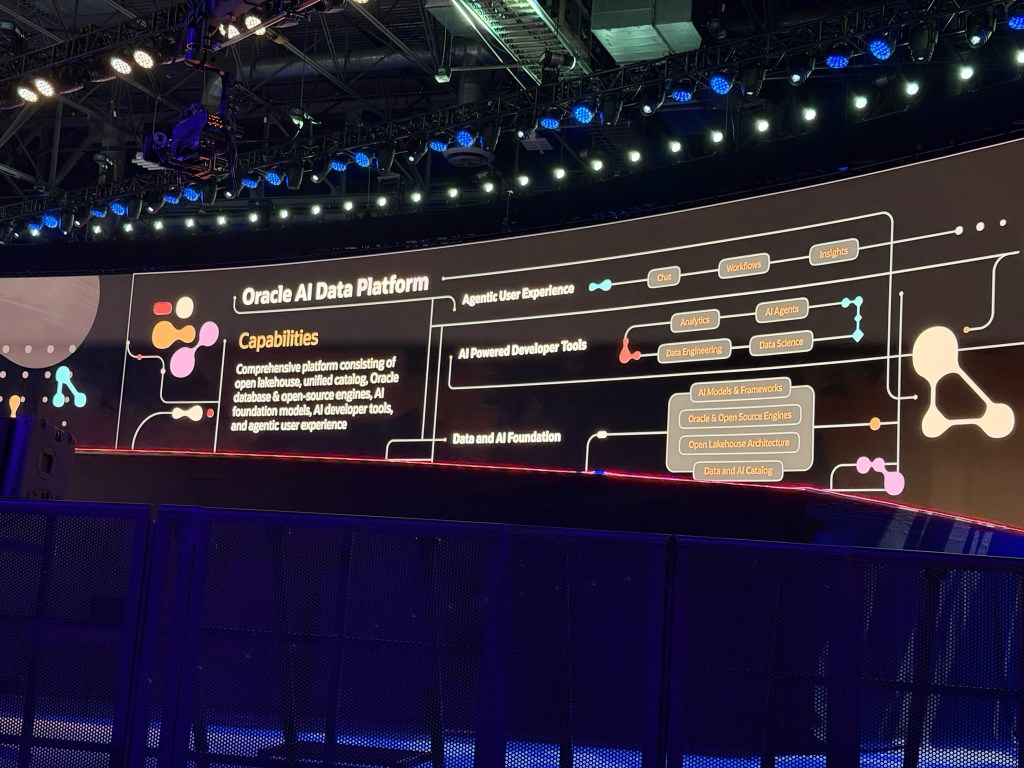

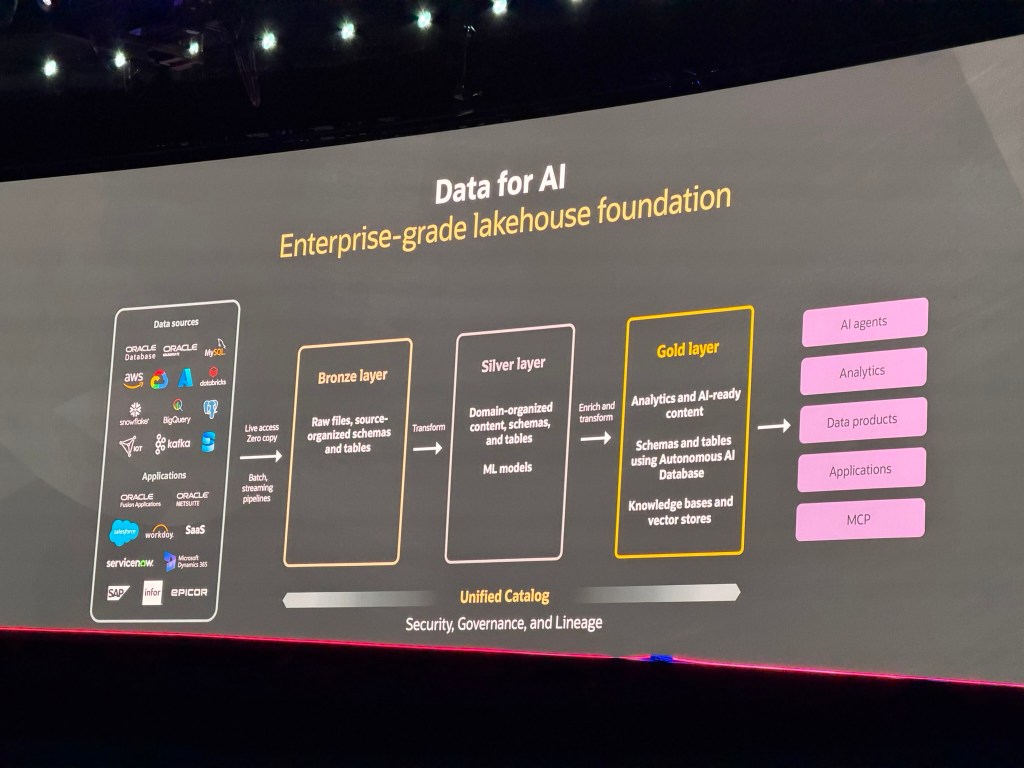

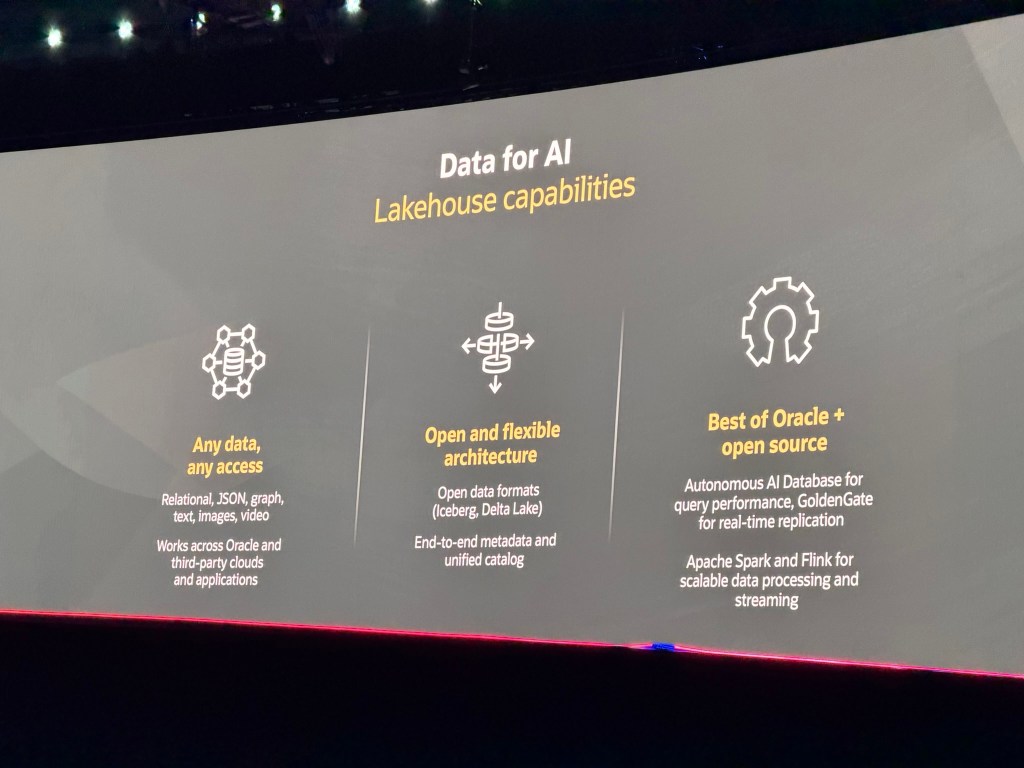

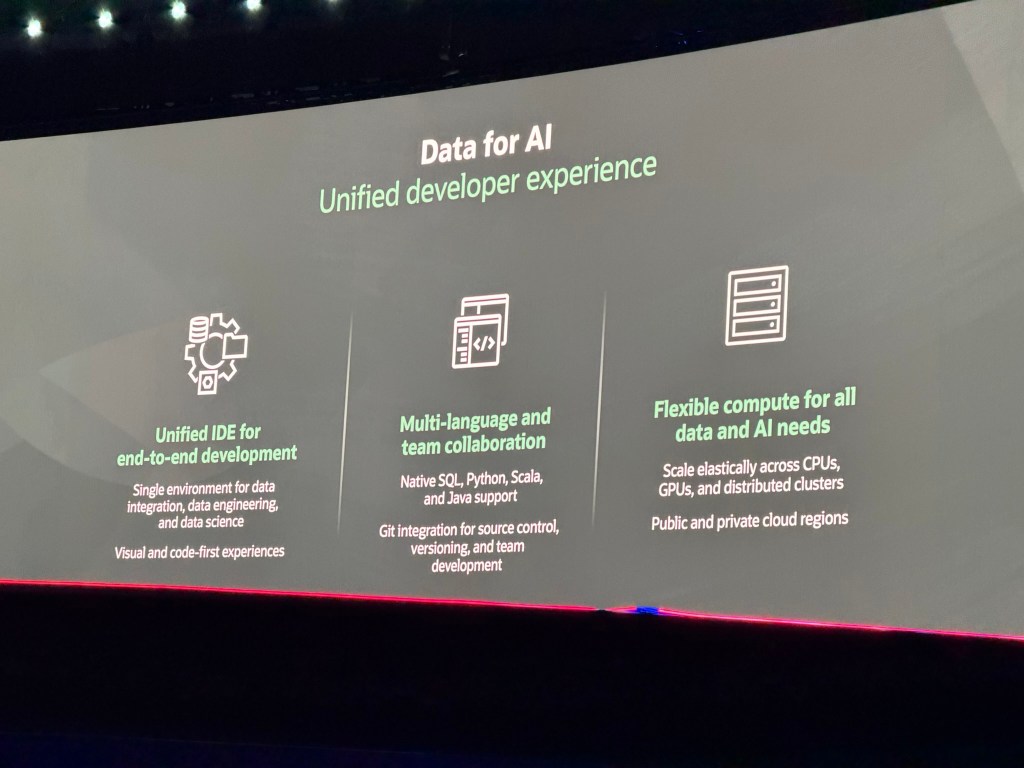

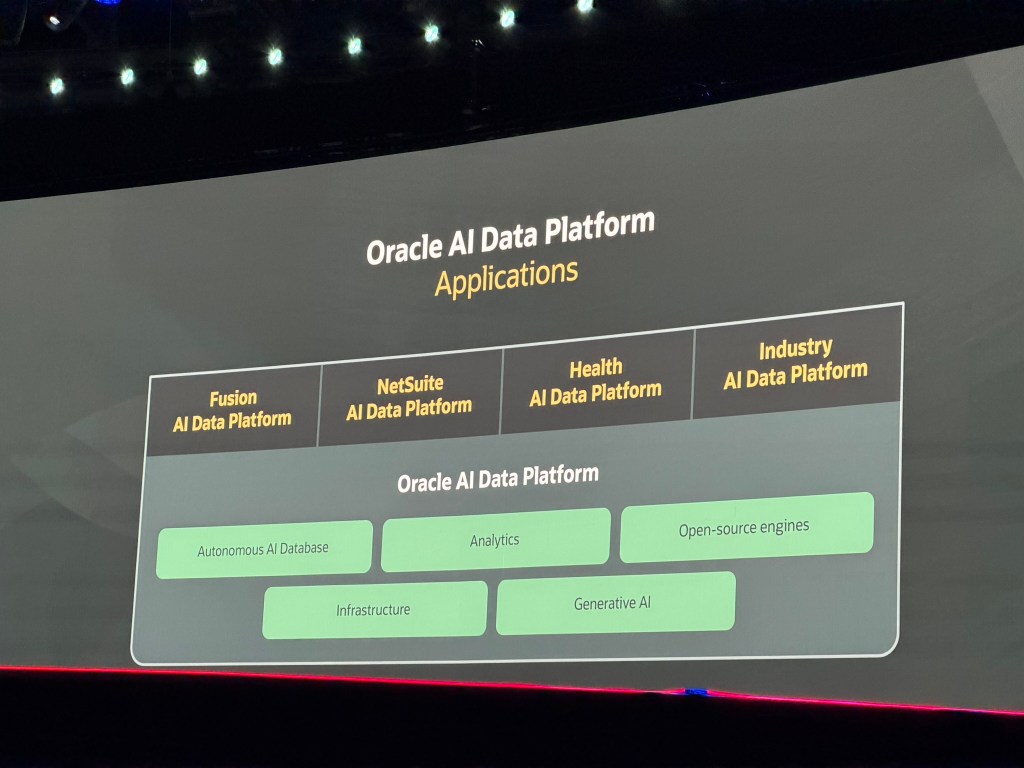

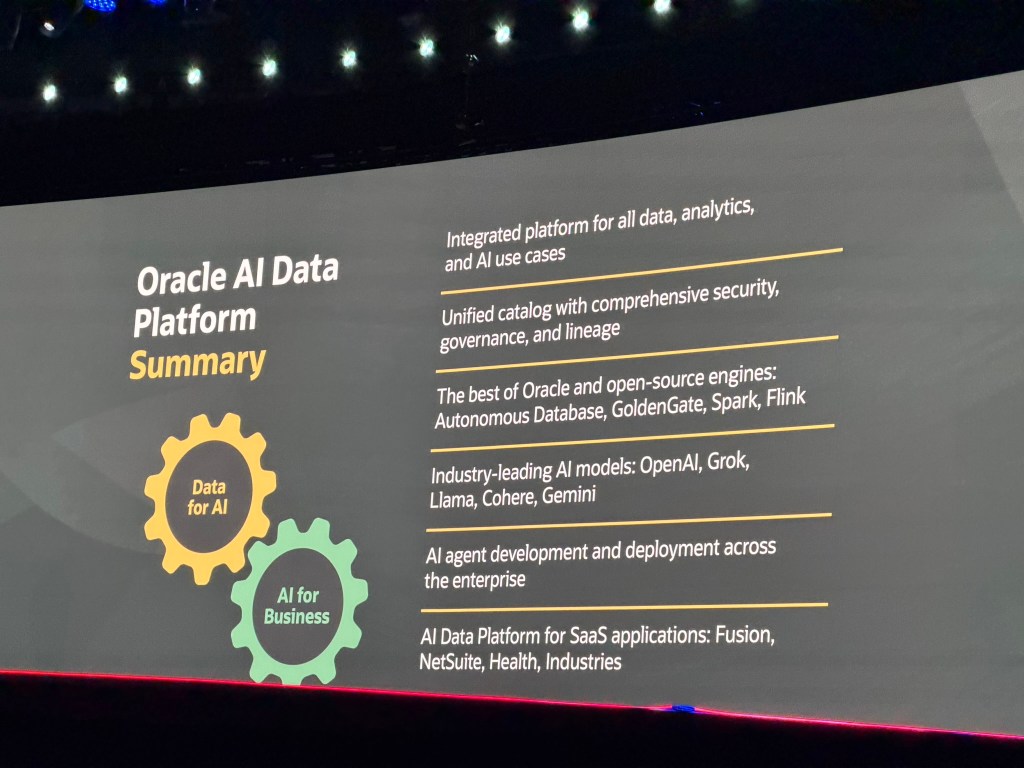

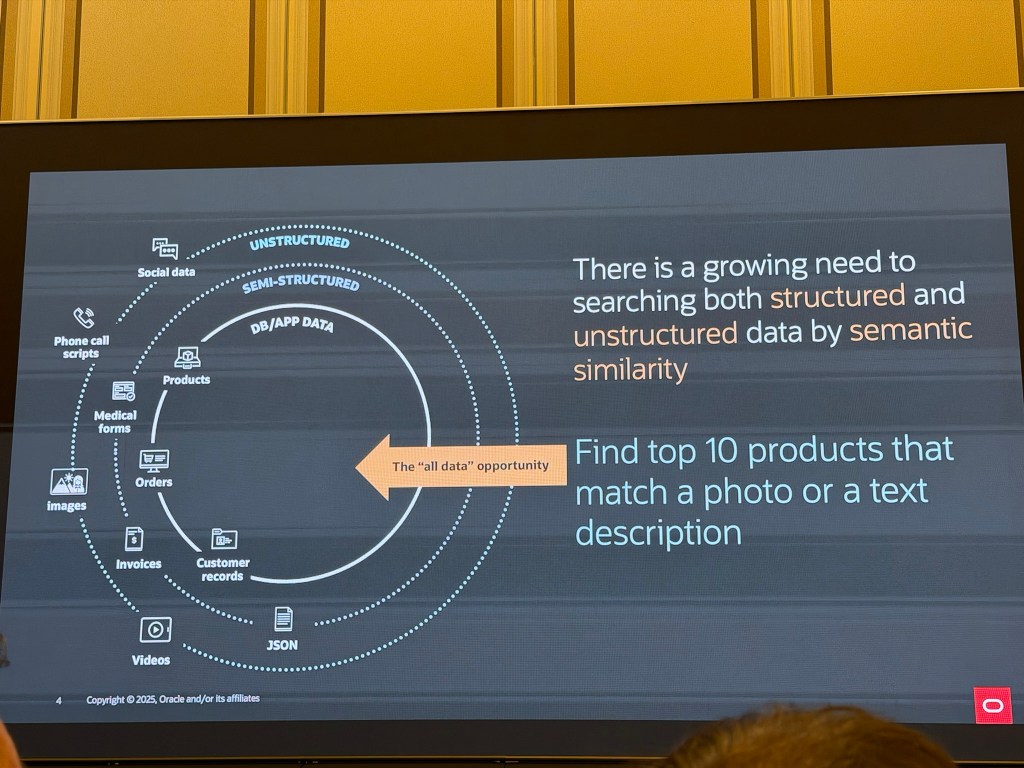

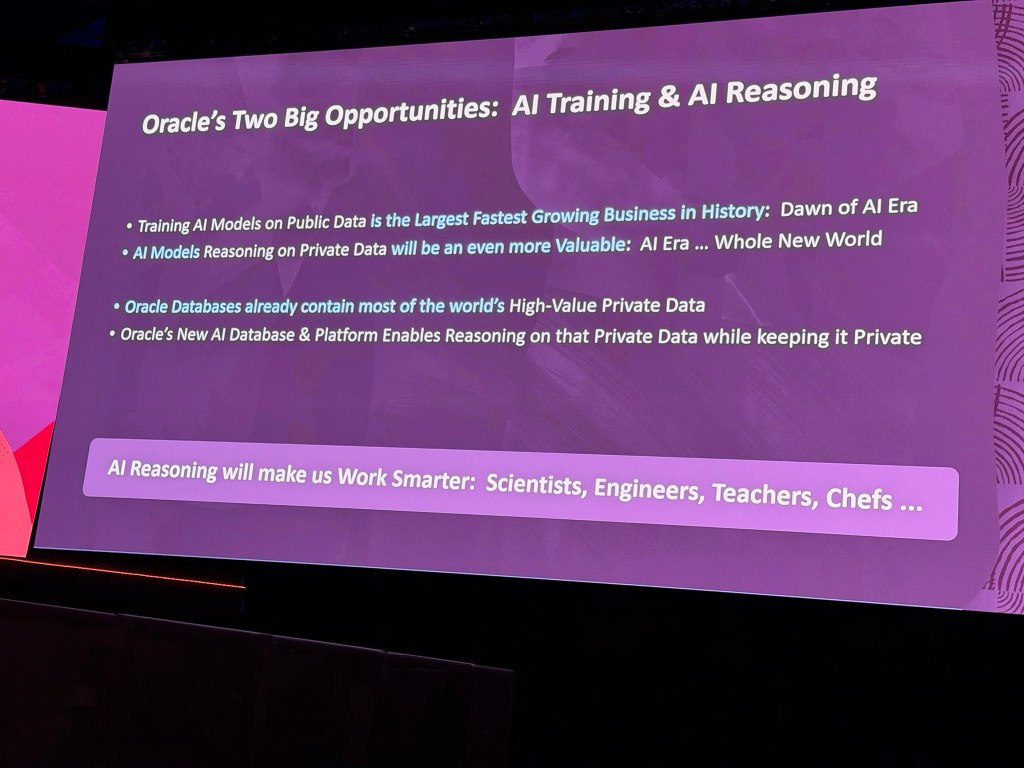

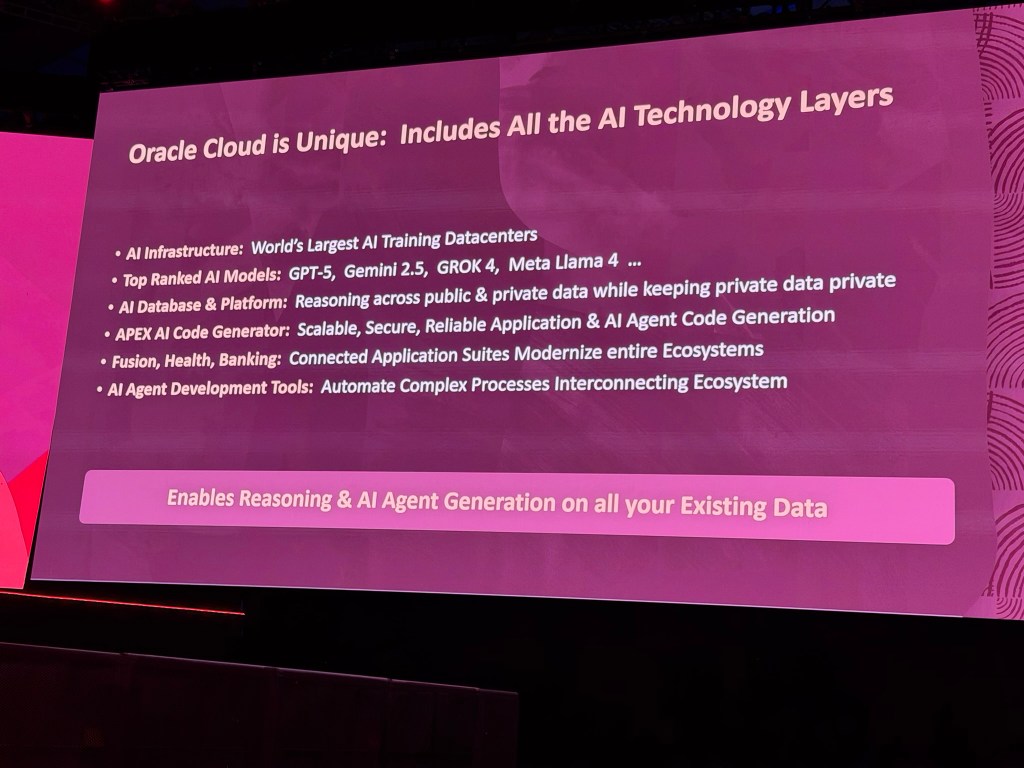

AI Data Platforms

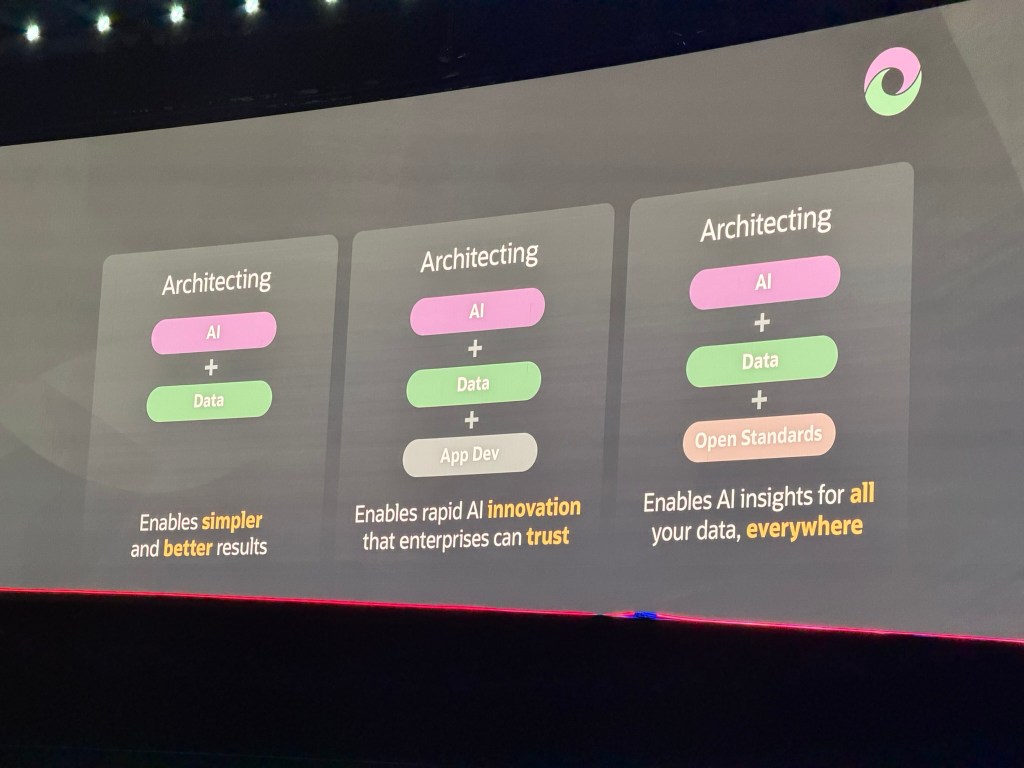

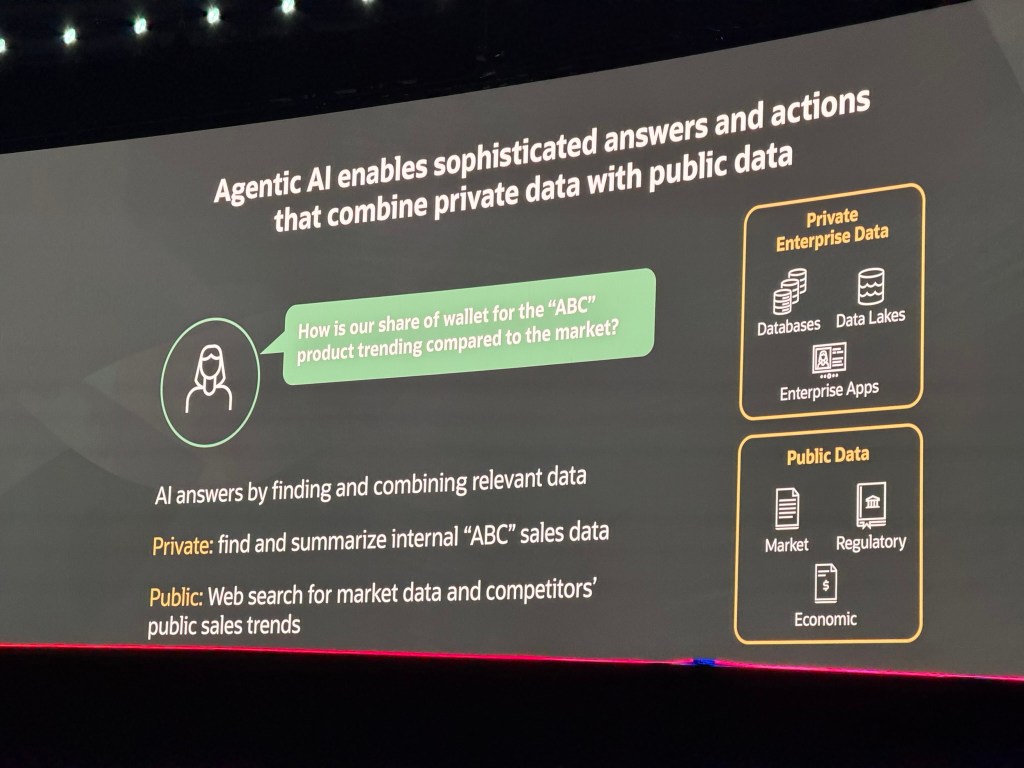

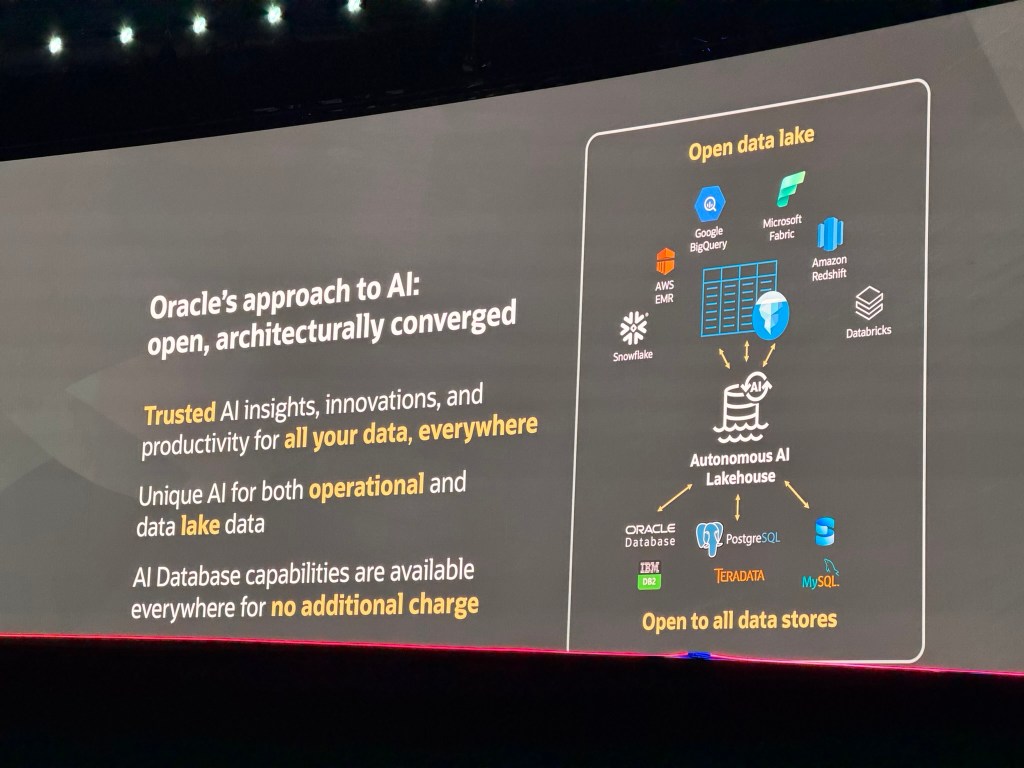

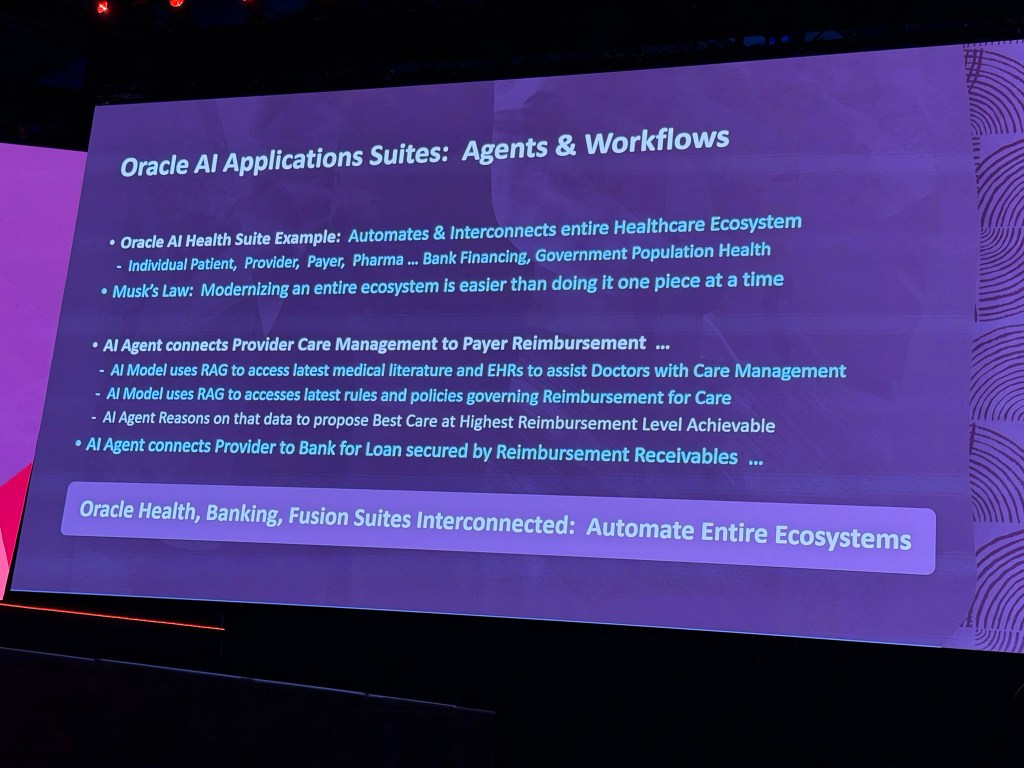

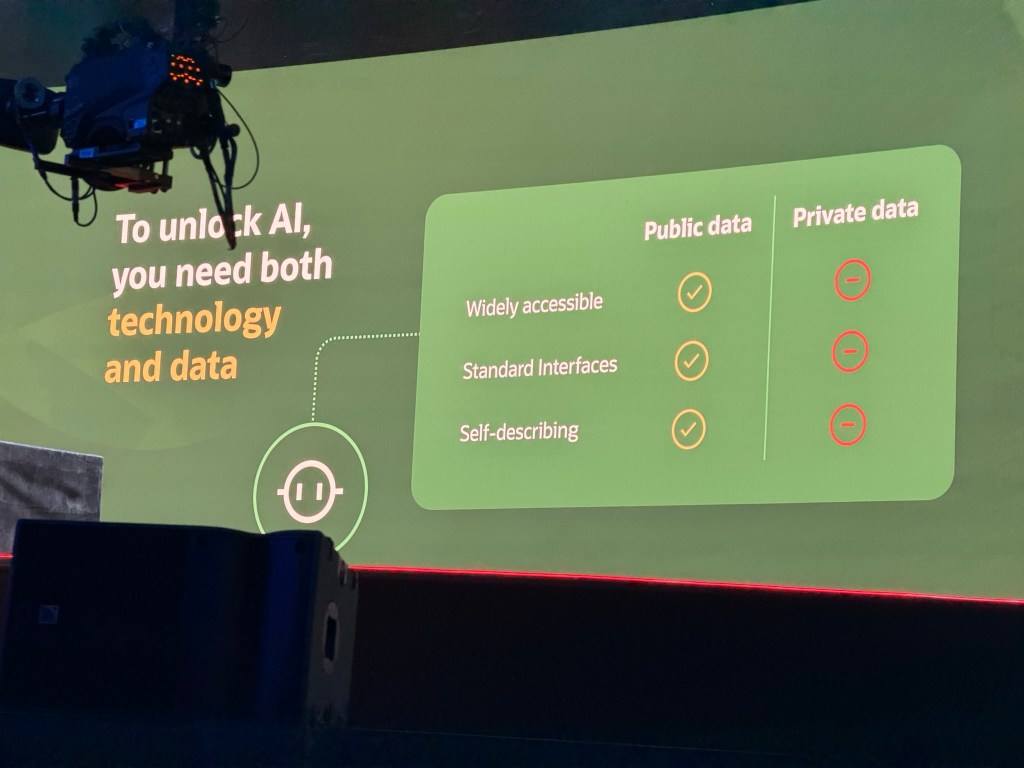

Clay concluded the keynote by announcing the AI Data Platform. He explained that unlocking AI requires both cutting-edge technology and access to data, both public and private.

And how Oracle thinks there is an efficient way to integrate both.

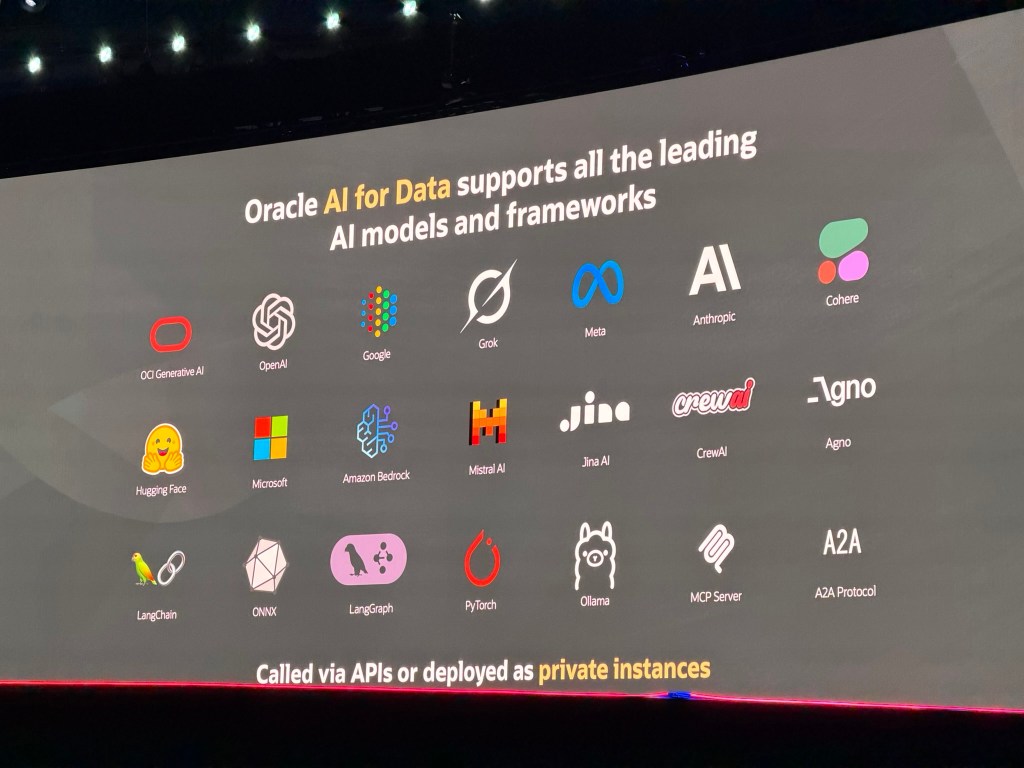

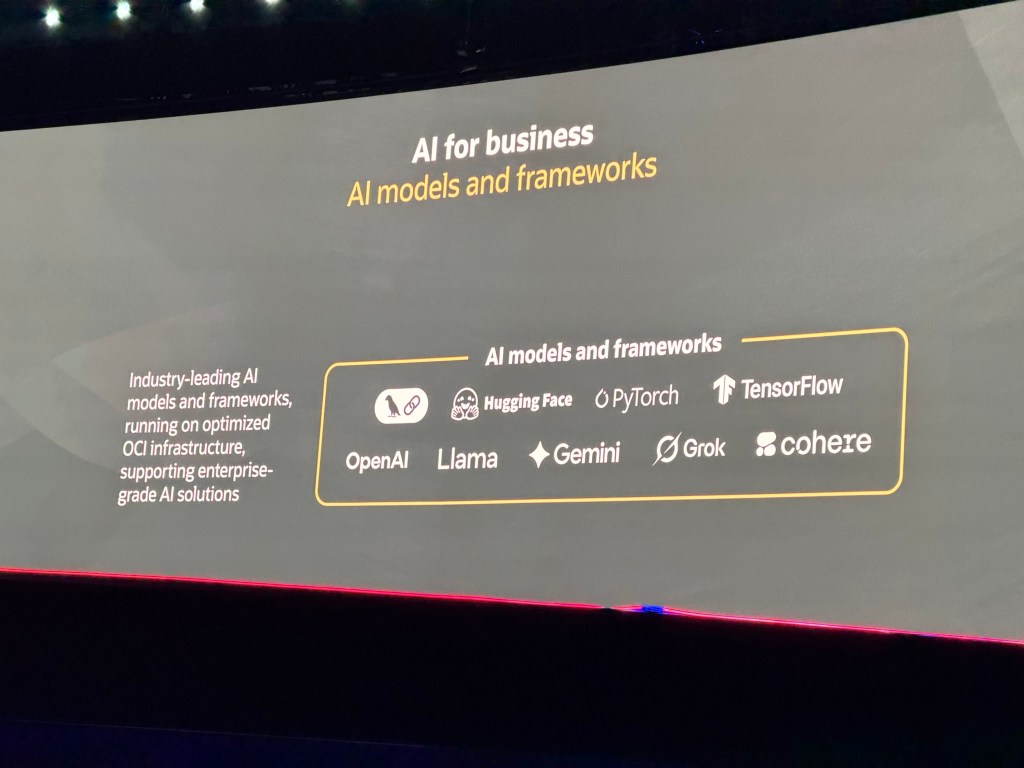

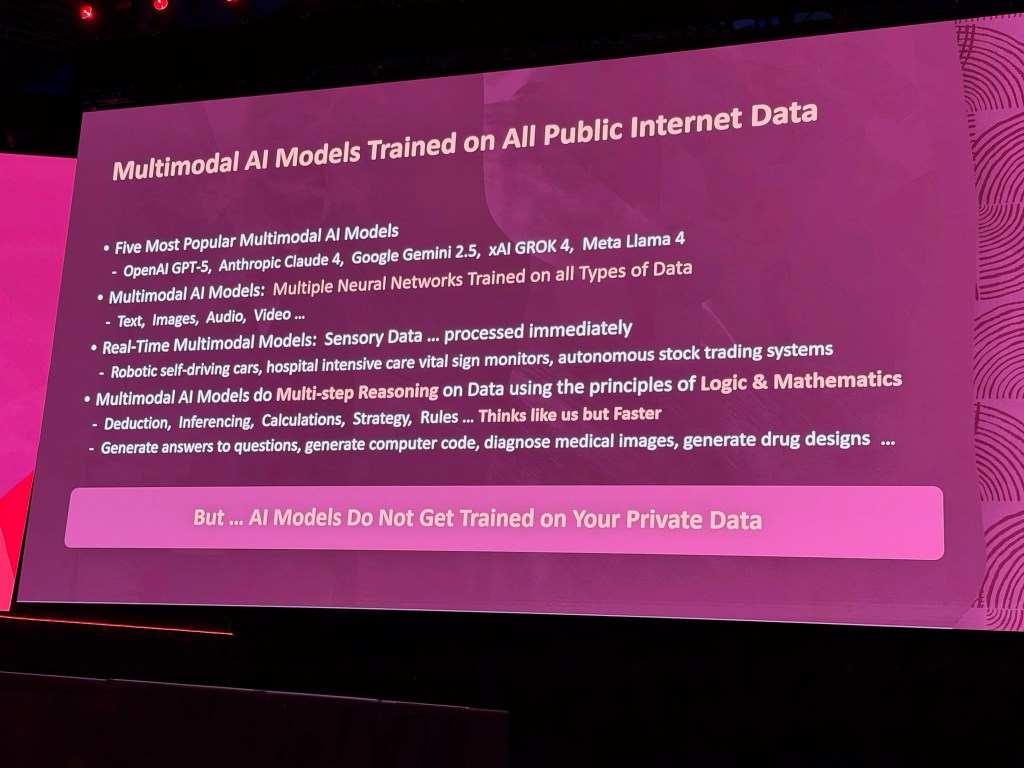

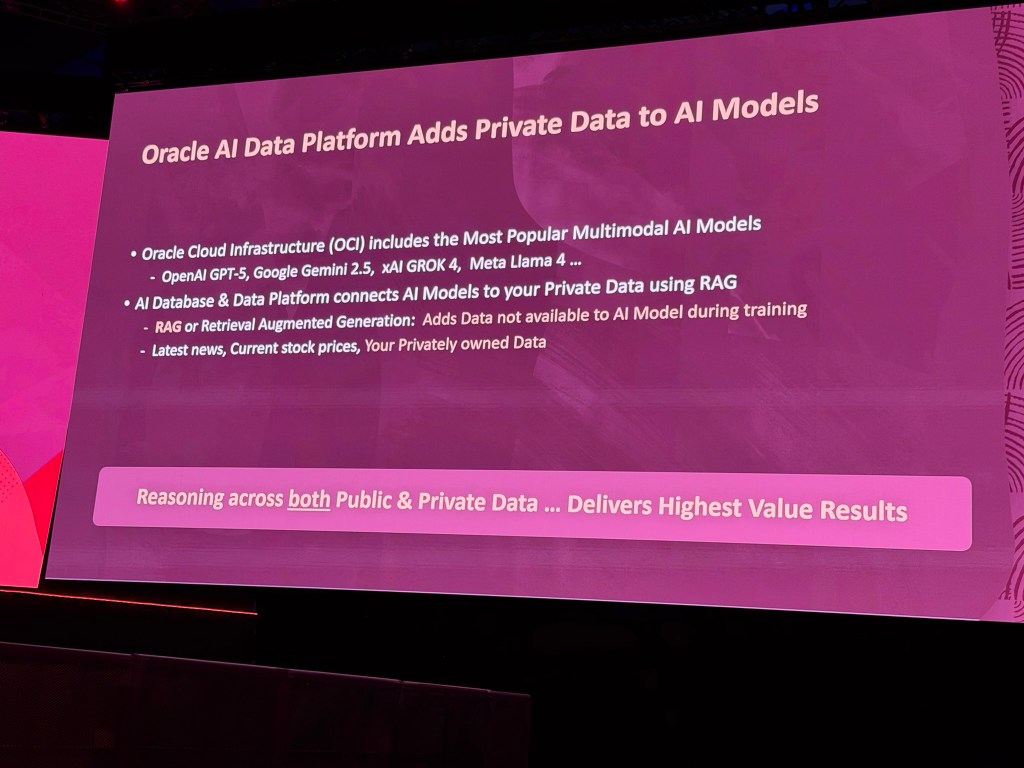

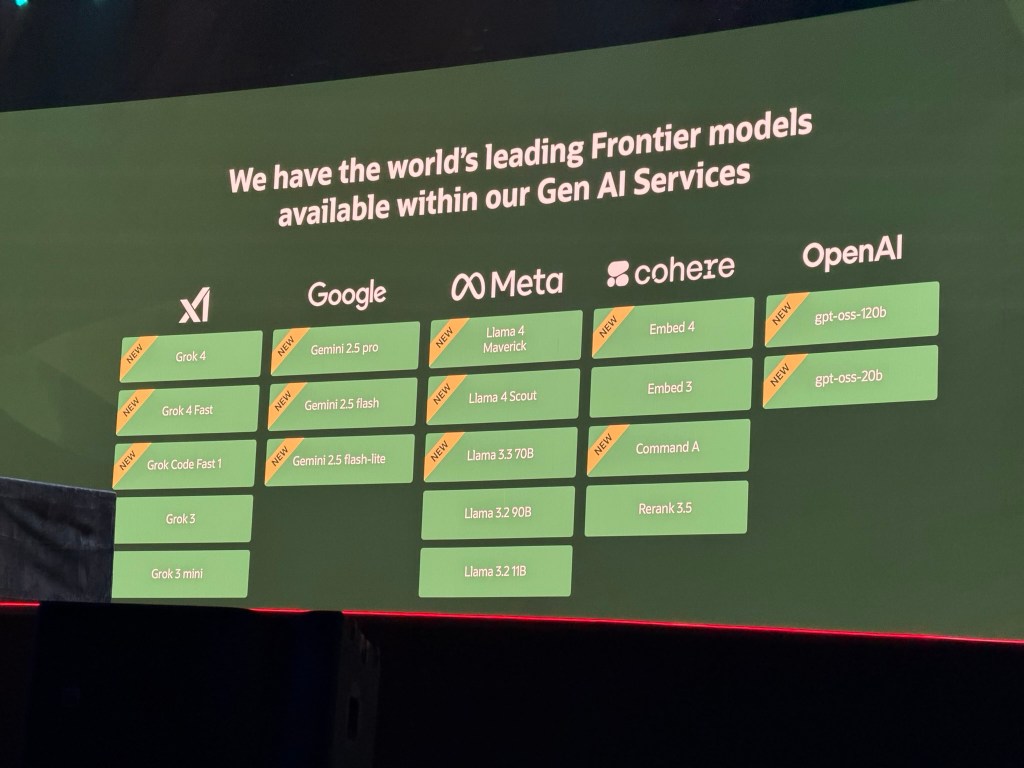

Firstly explaining how OCI has the world’s leading Frontier models available within OCI Gen AI Services.

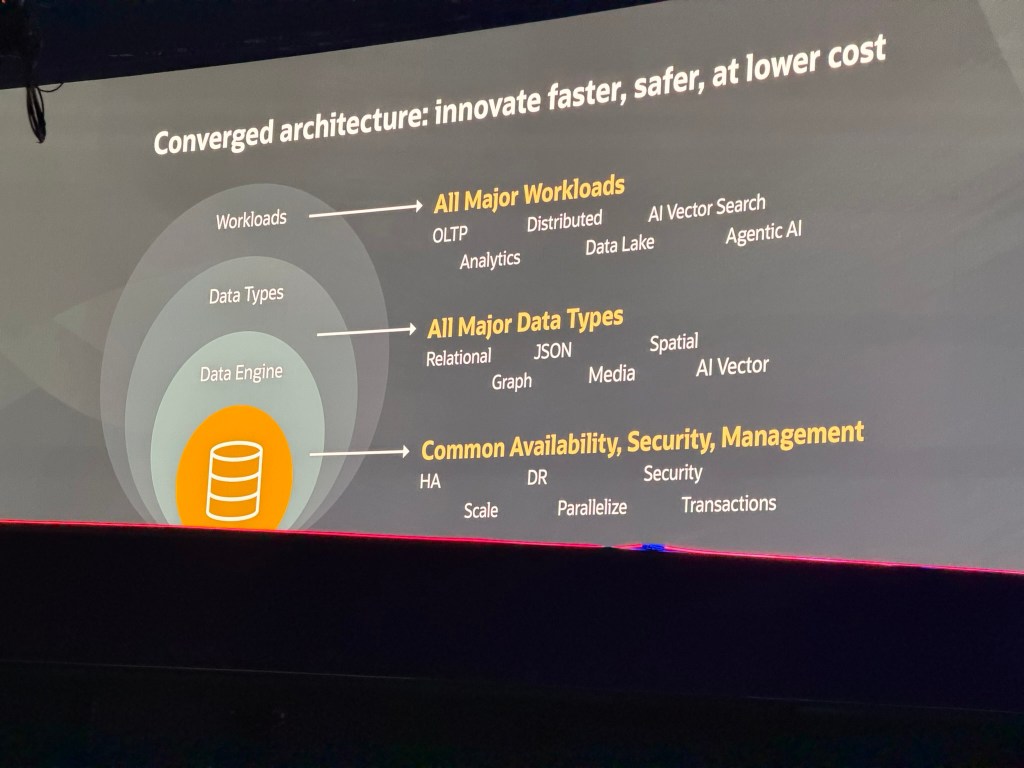

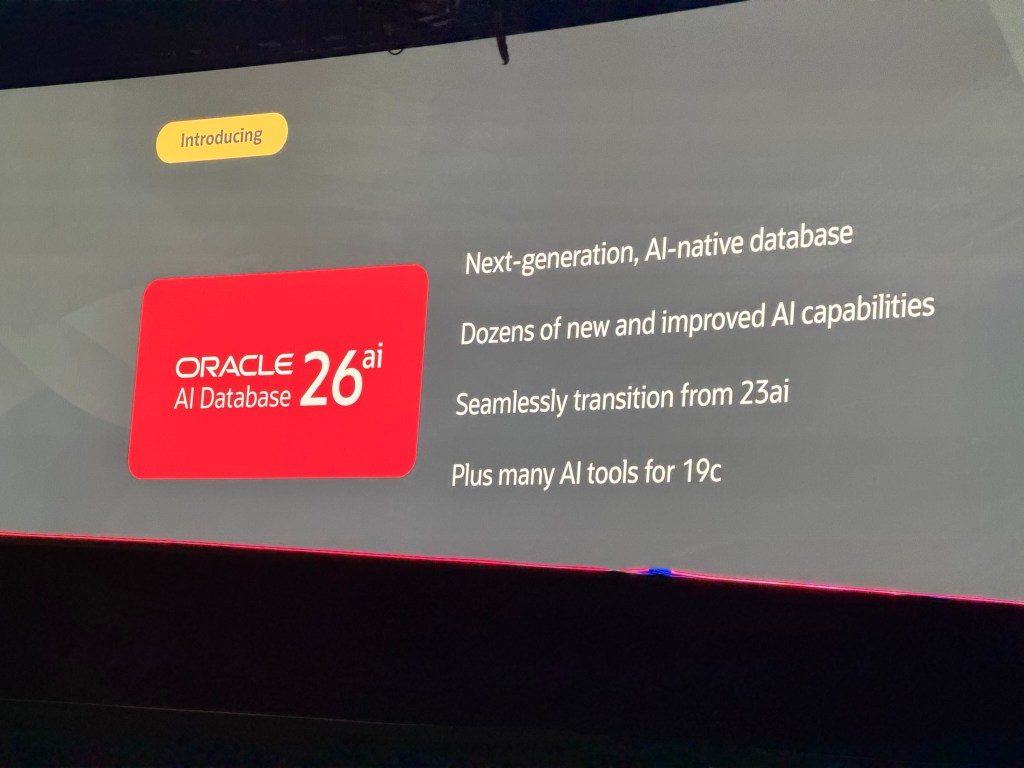

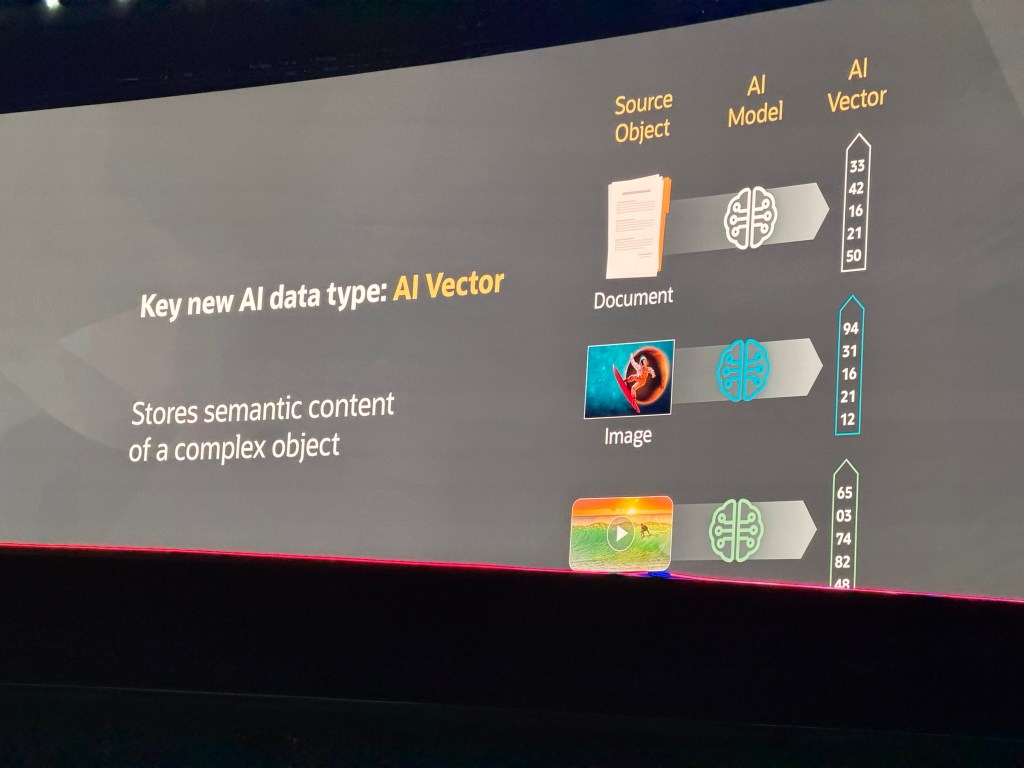

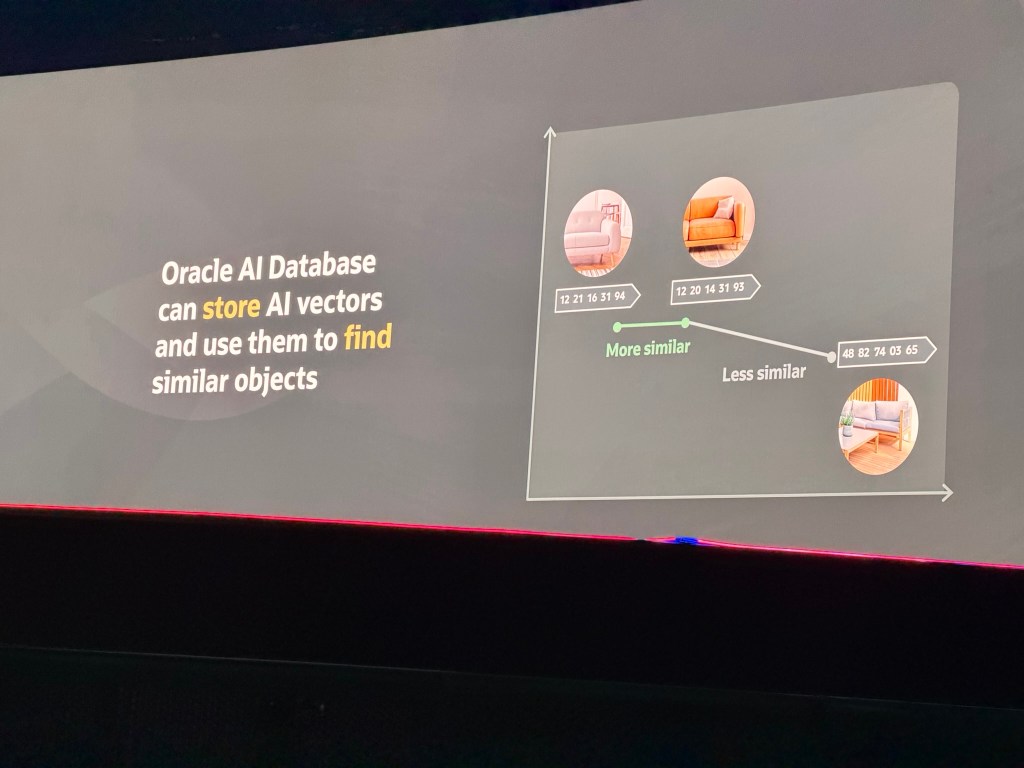

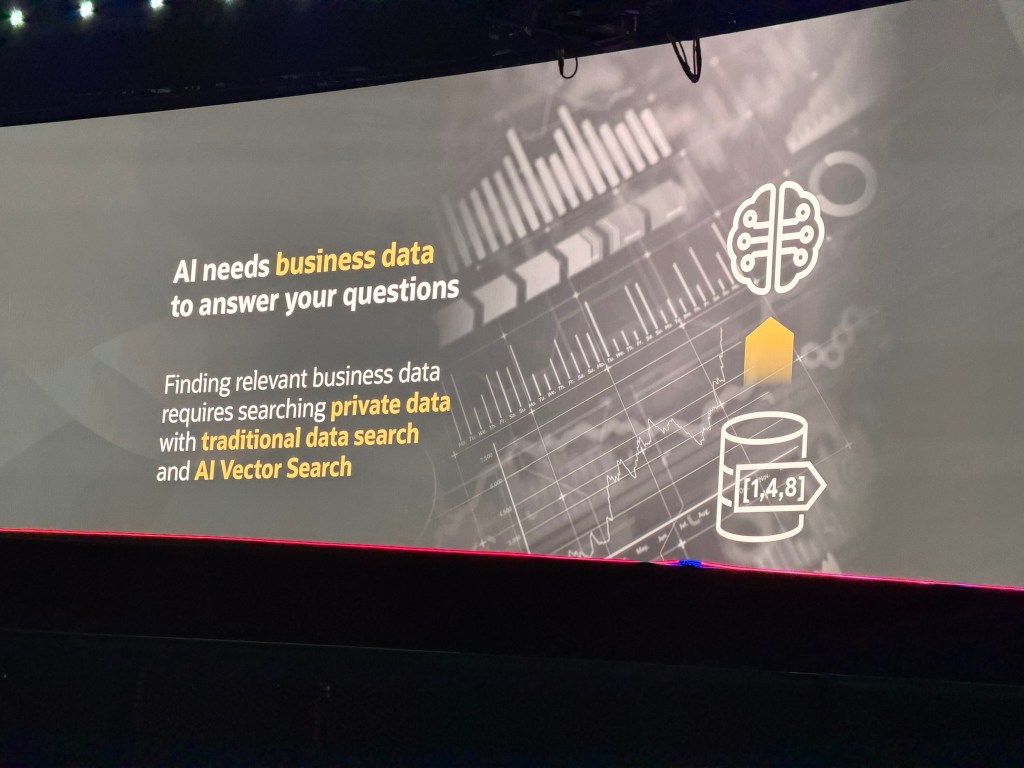

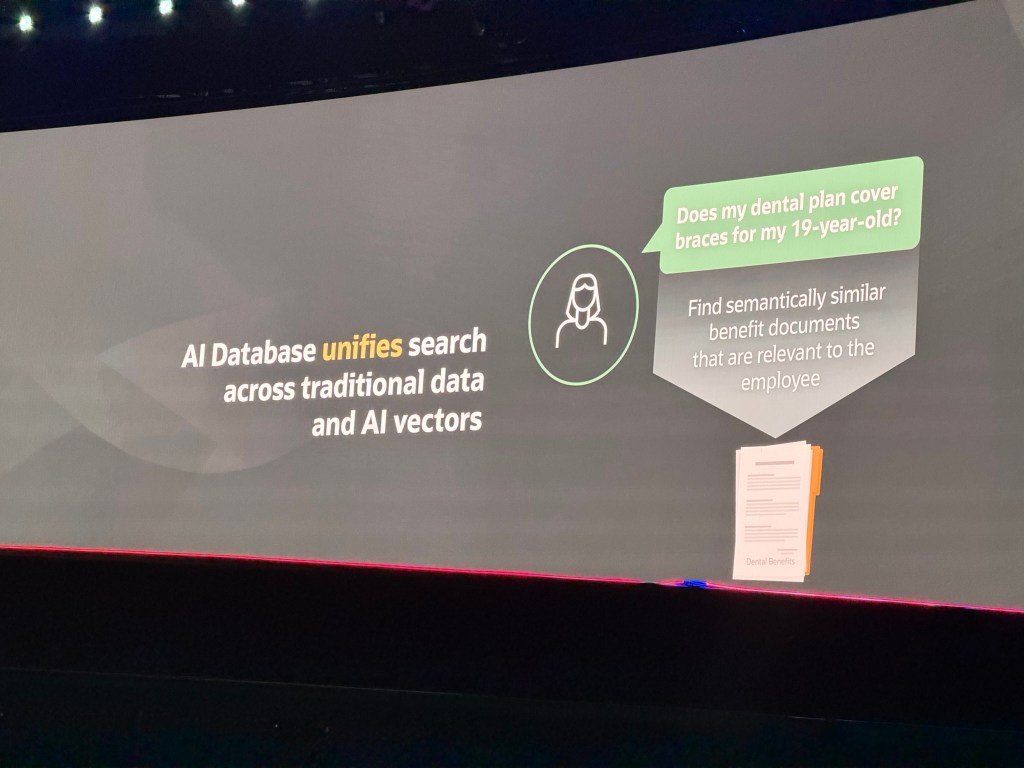

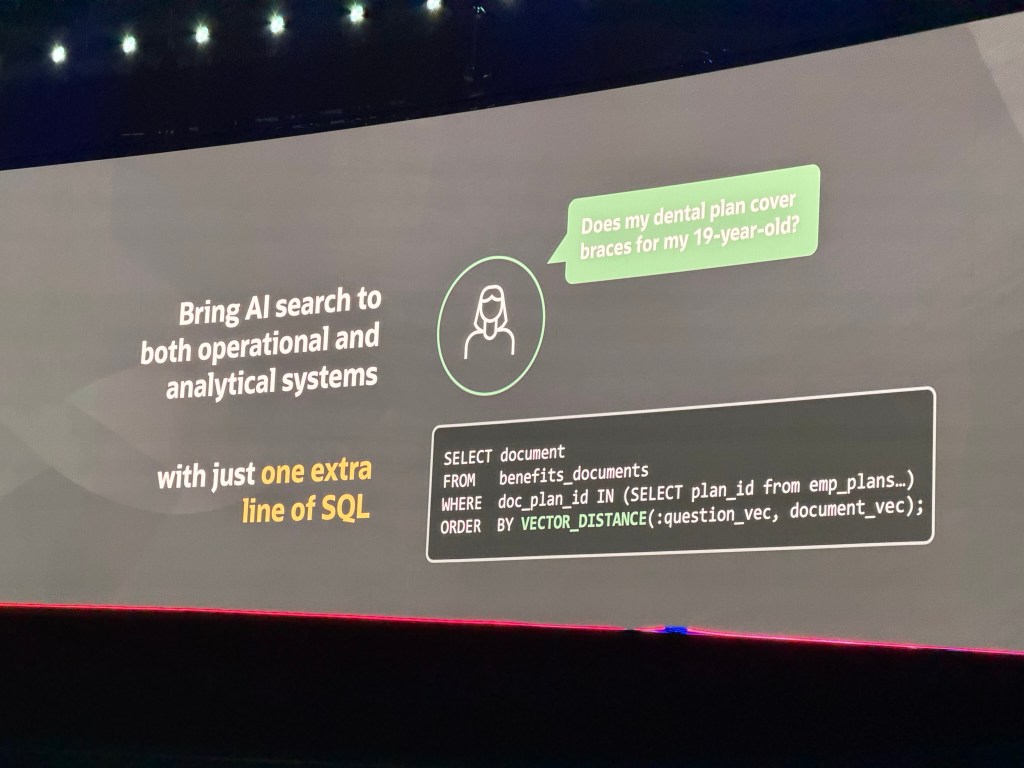

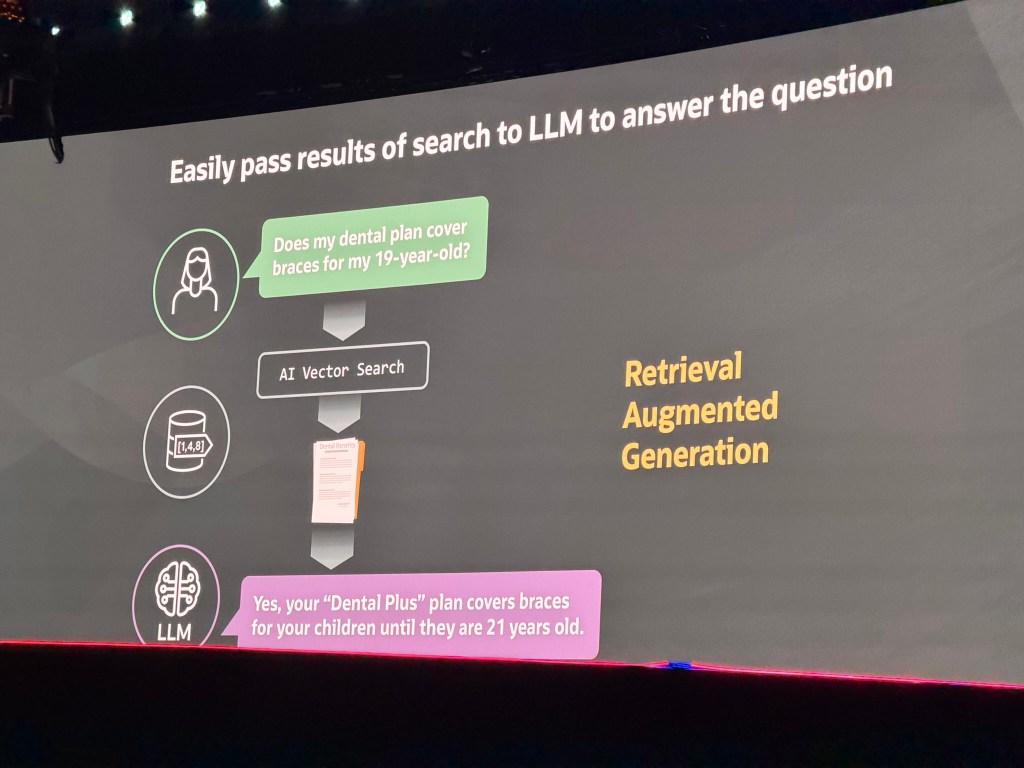

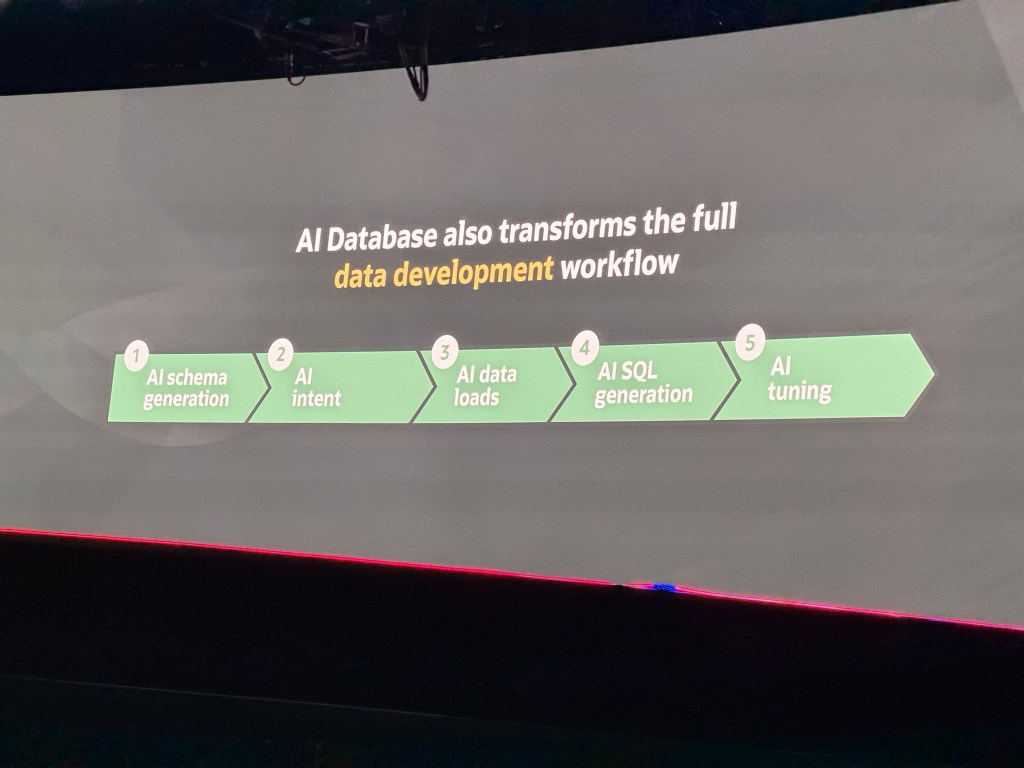

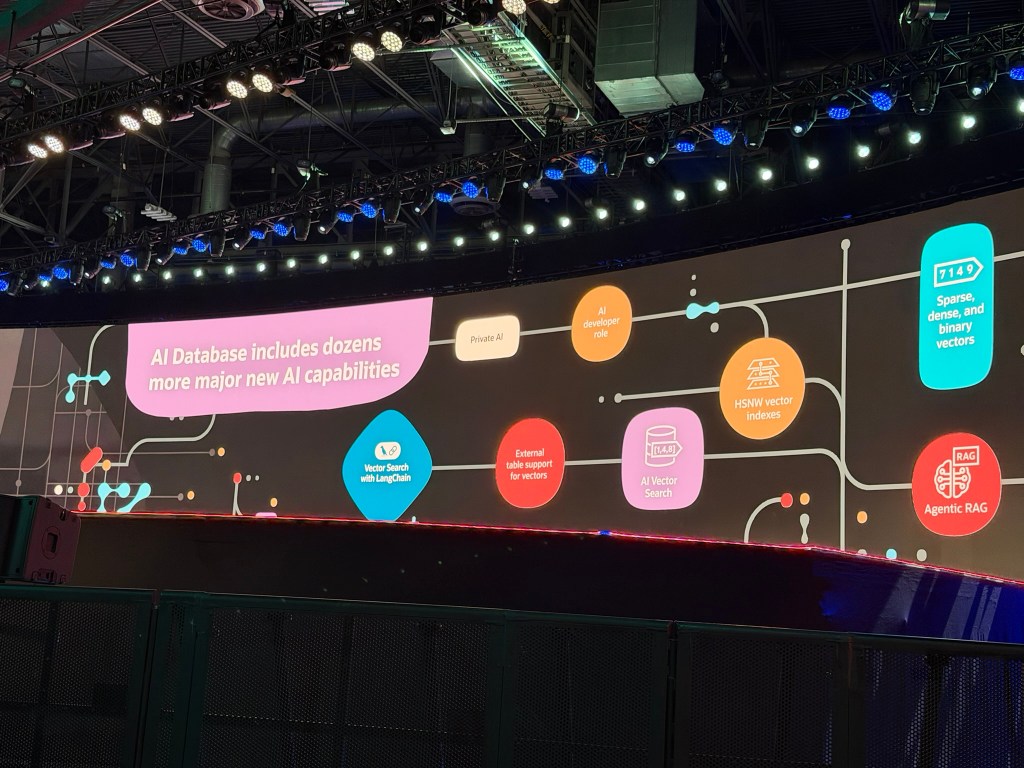

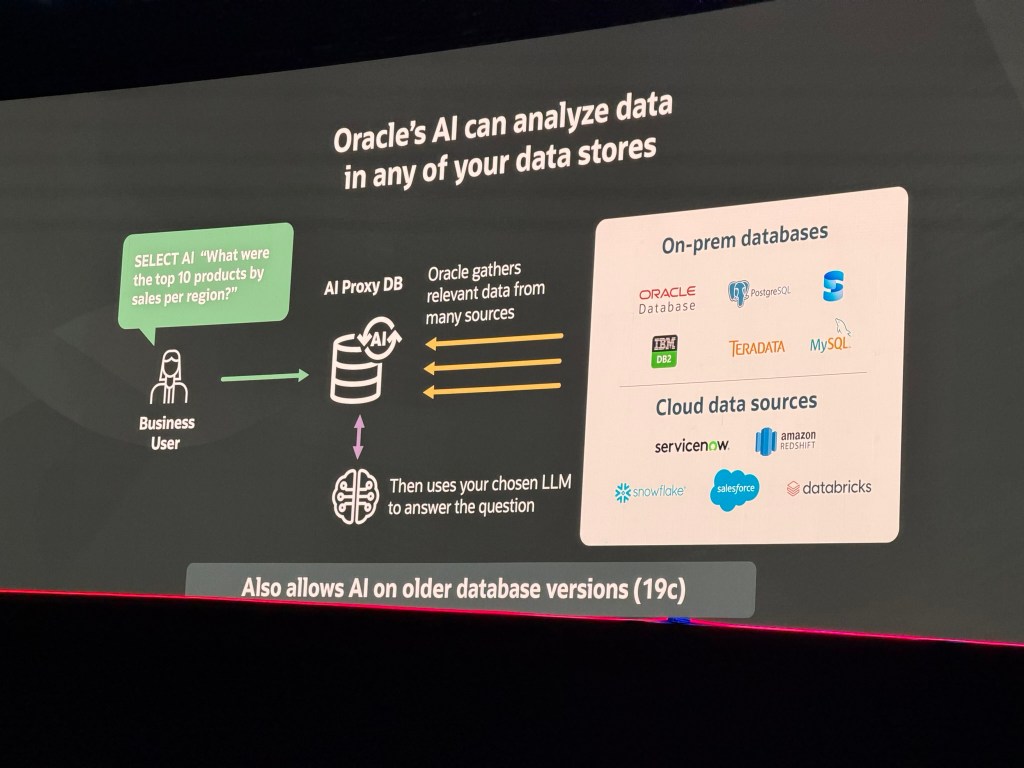

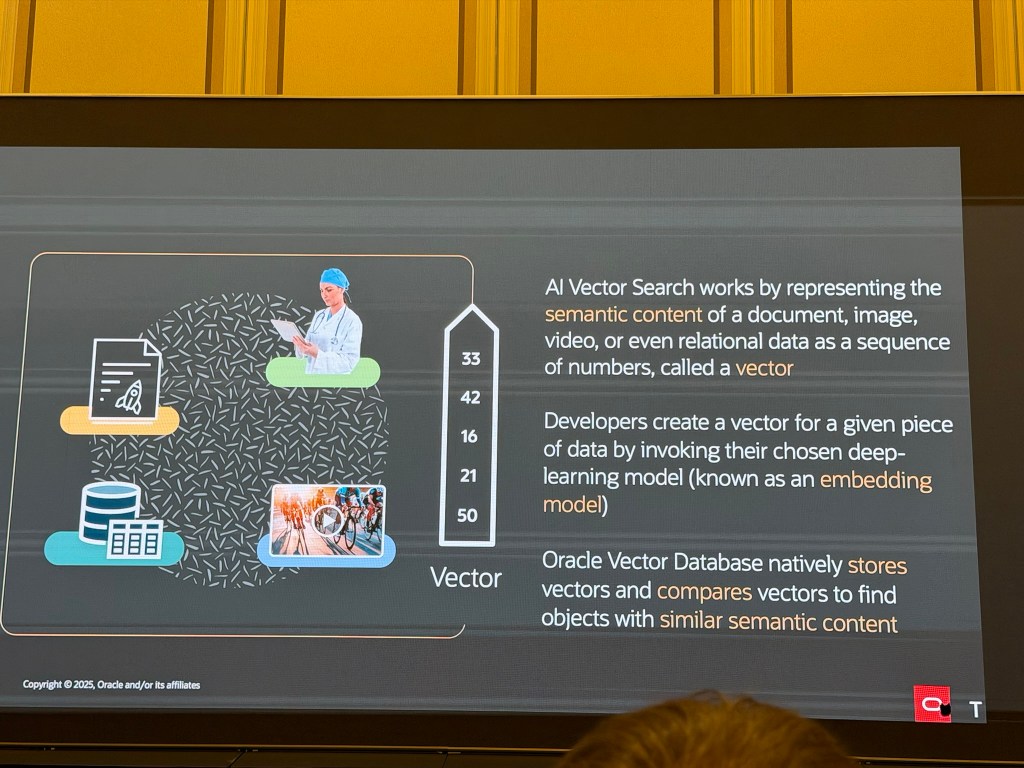

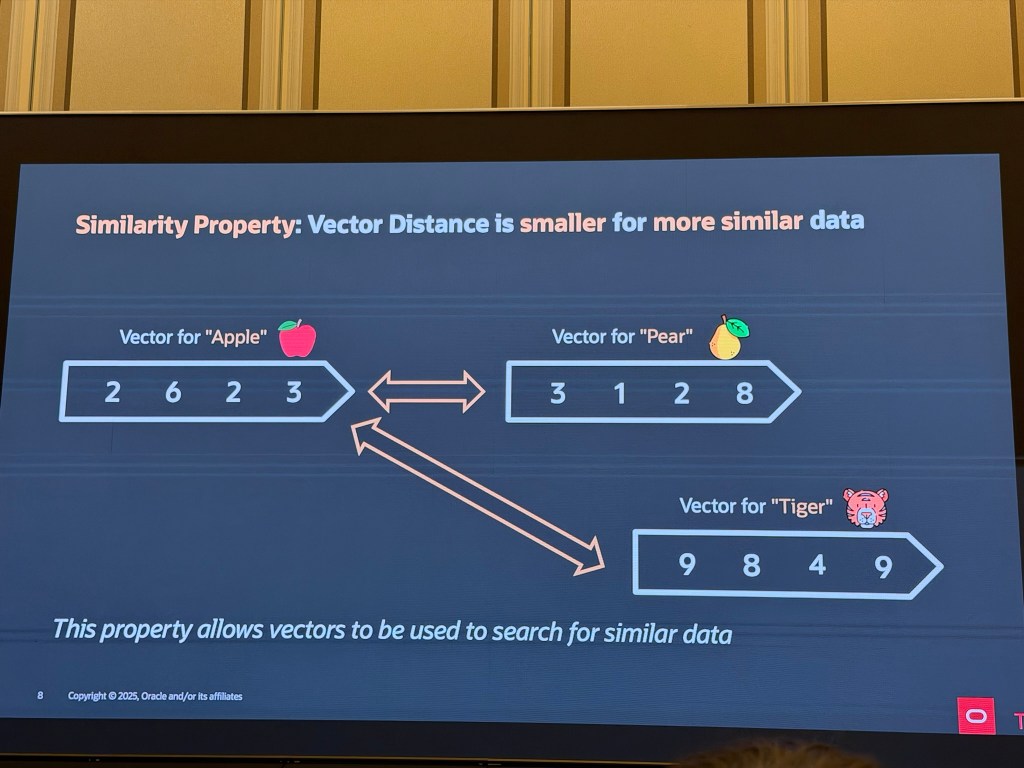

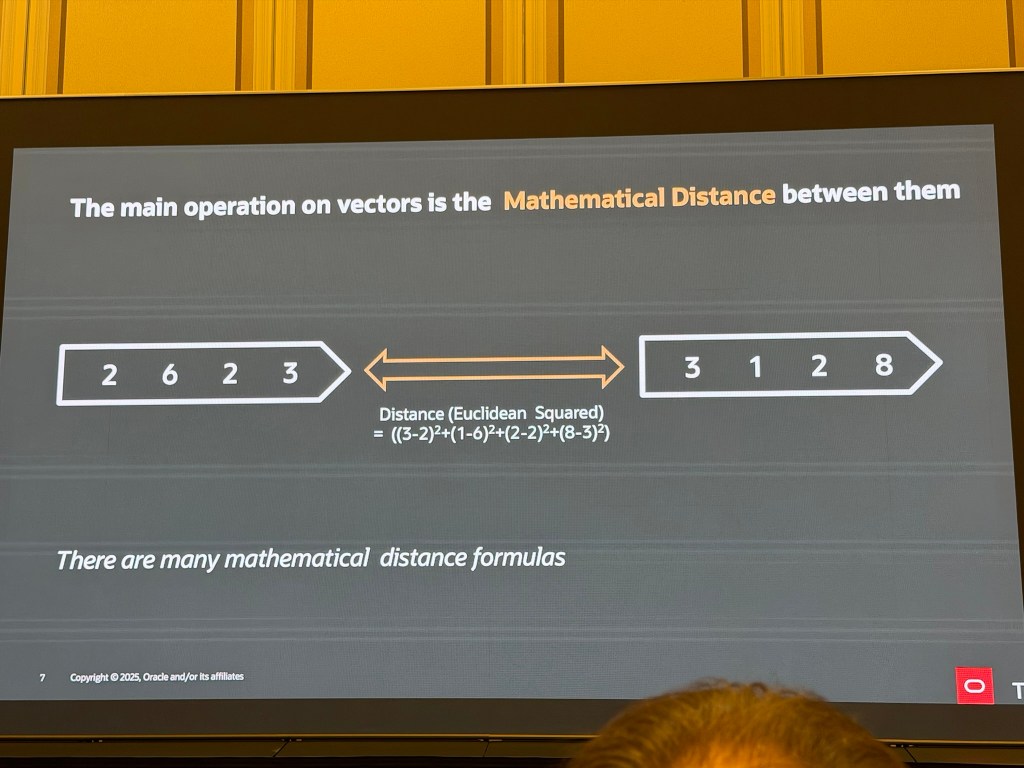

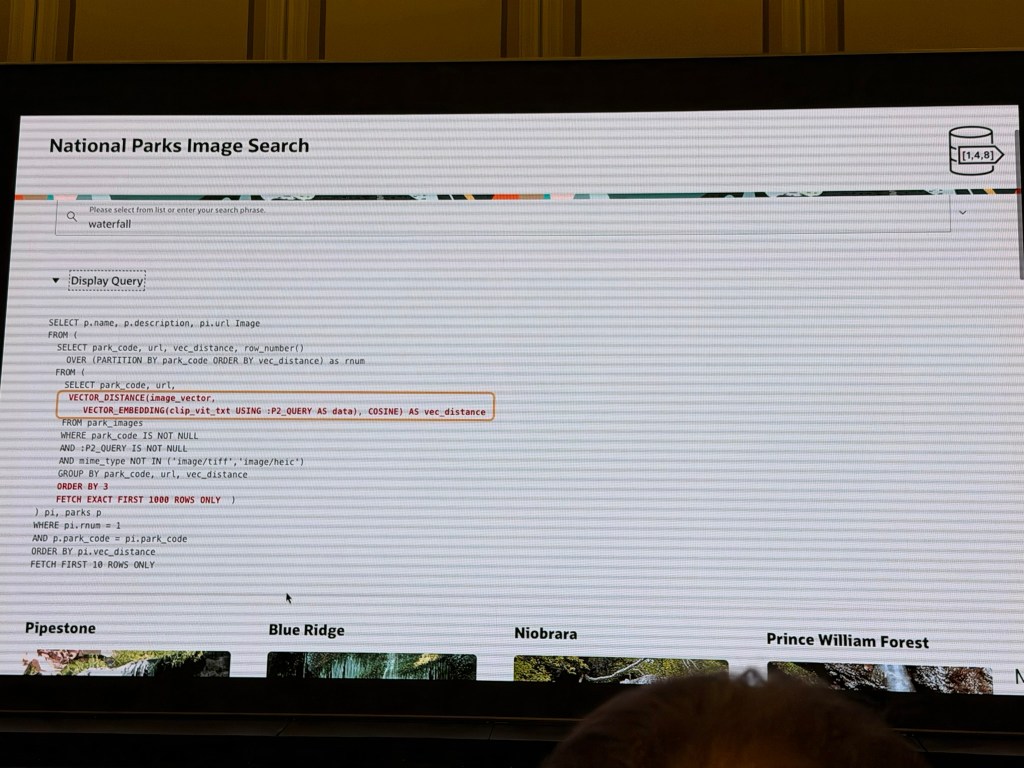

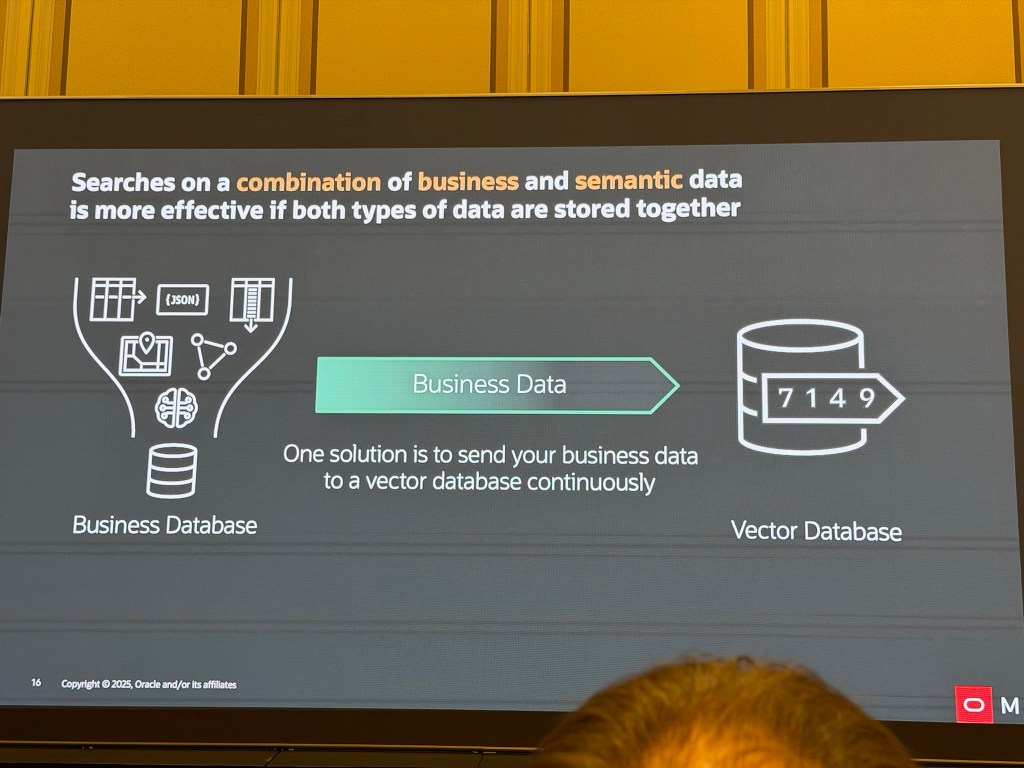

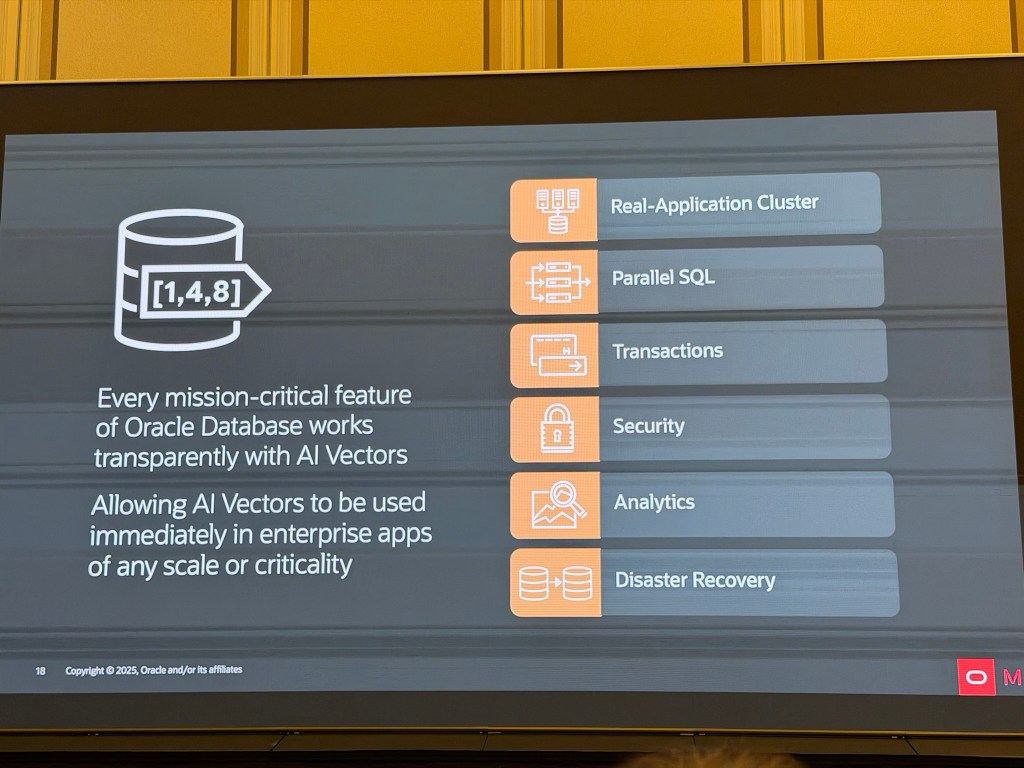

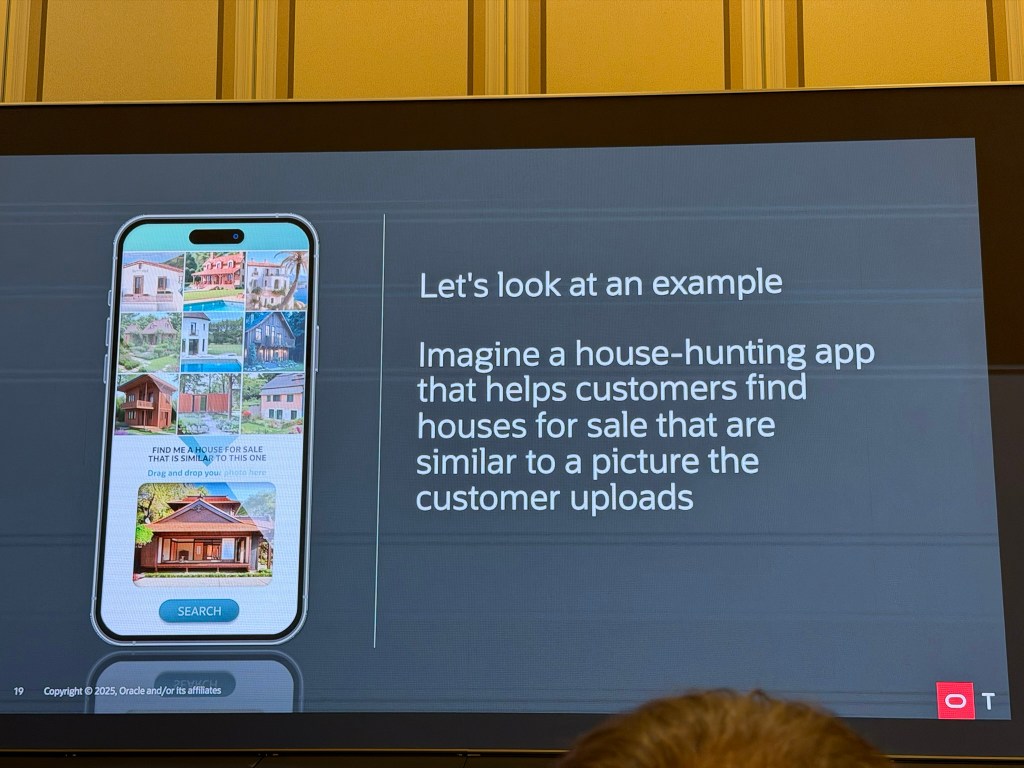

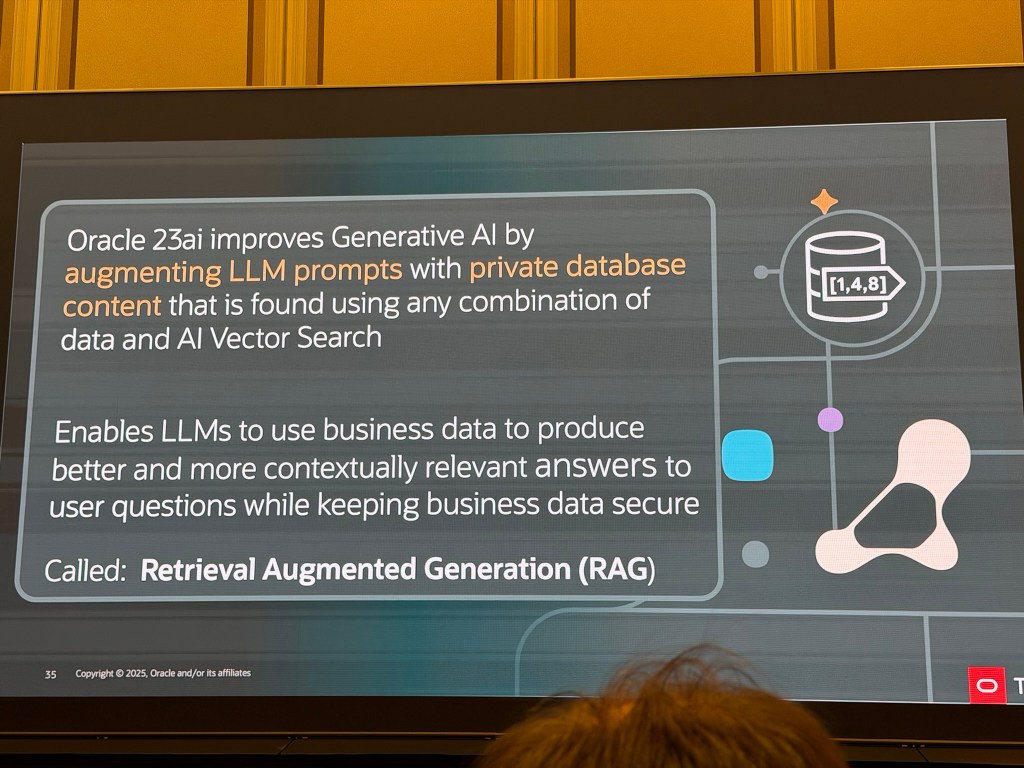

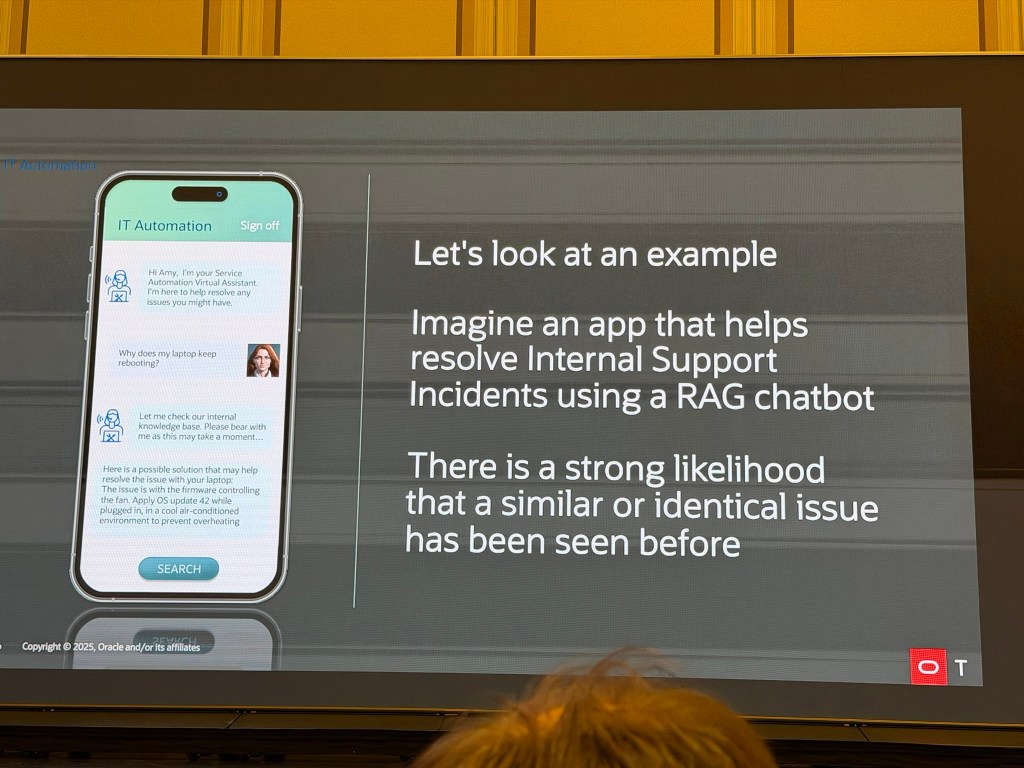

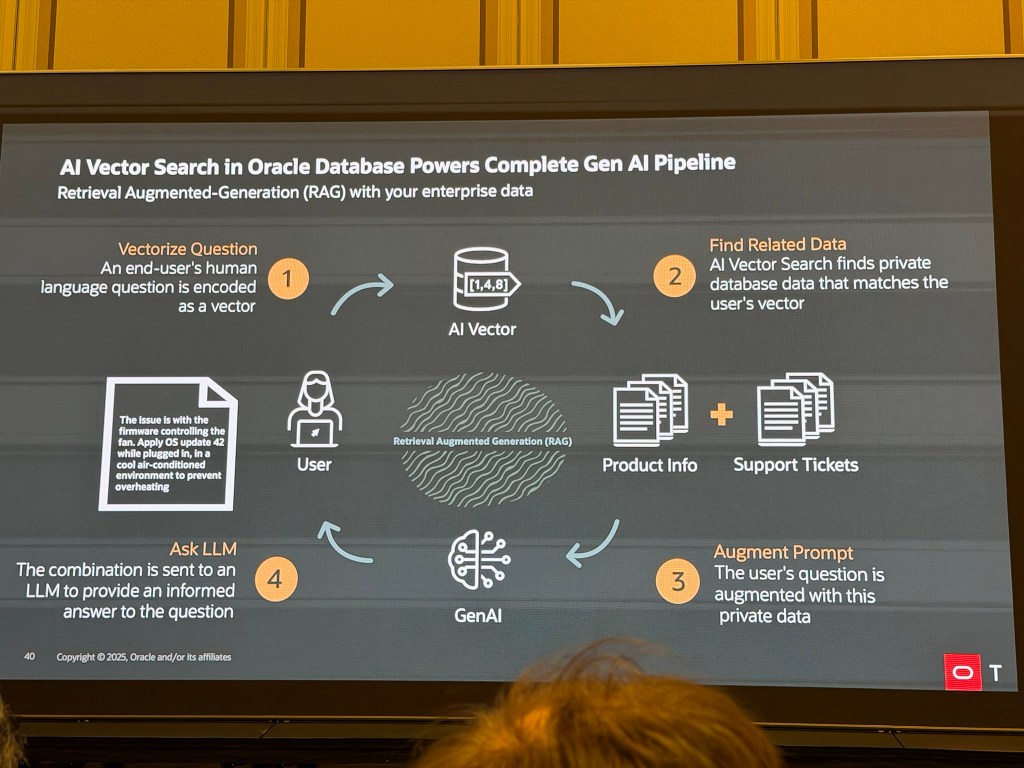

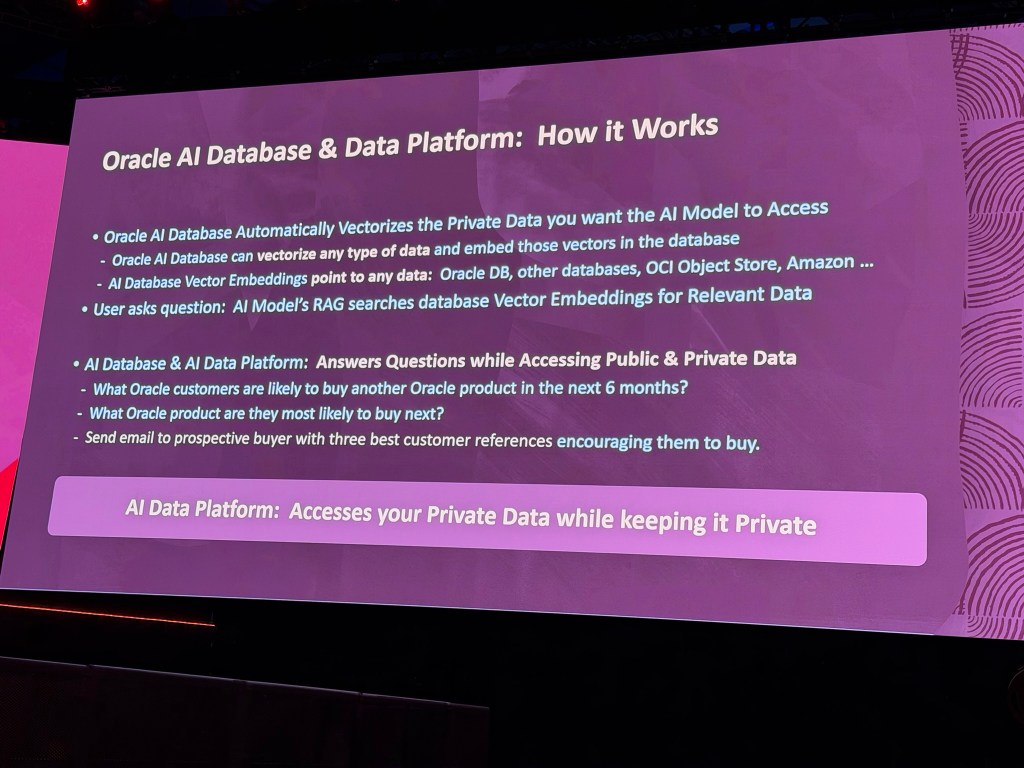

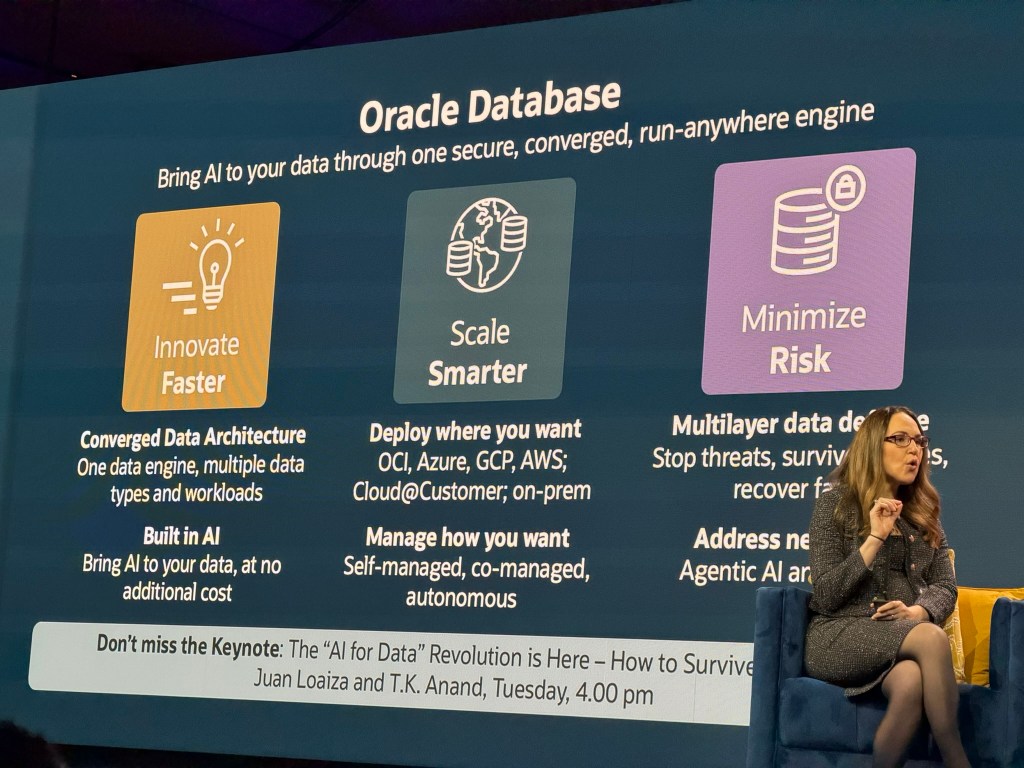

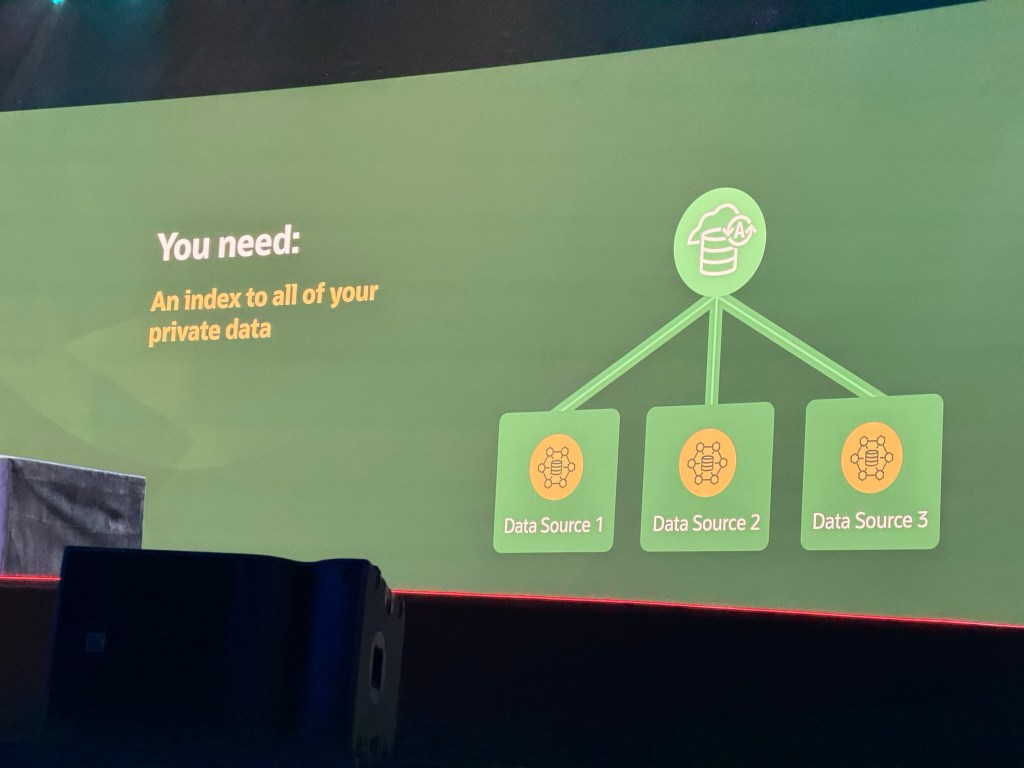

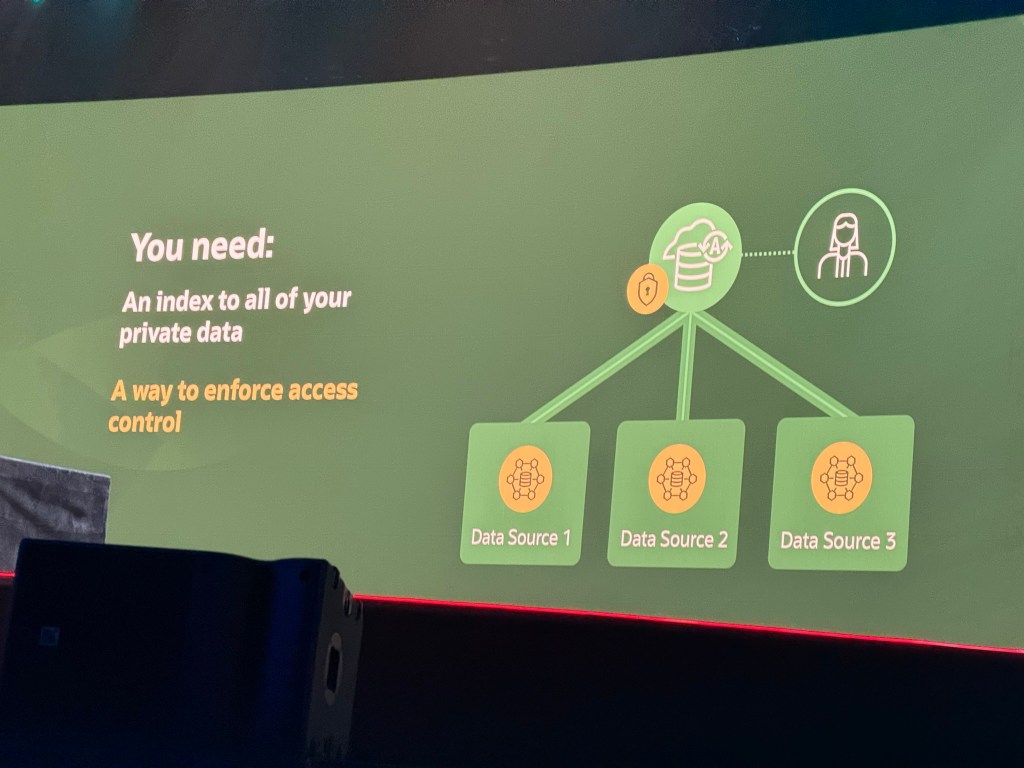

Your private data must be indexed, secured with enforced access controls, and integrated with Generative AI services to answer queries that combine public data overlaid with your private data powered by Oracle AI Database 26ai.

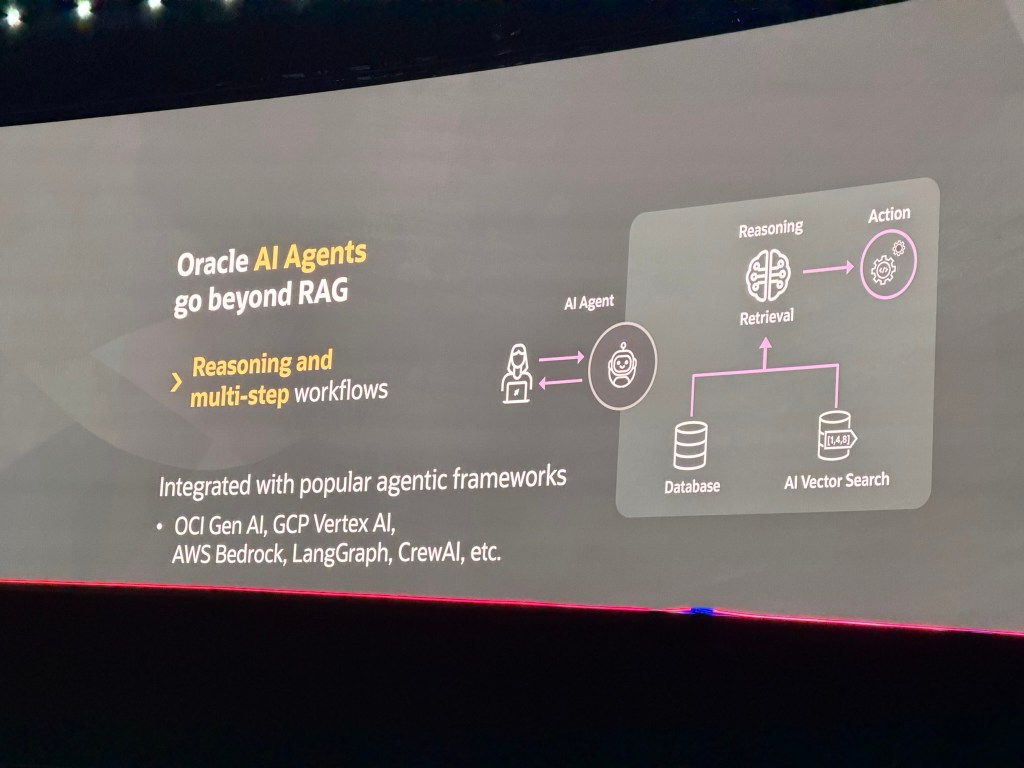

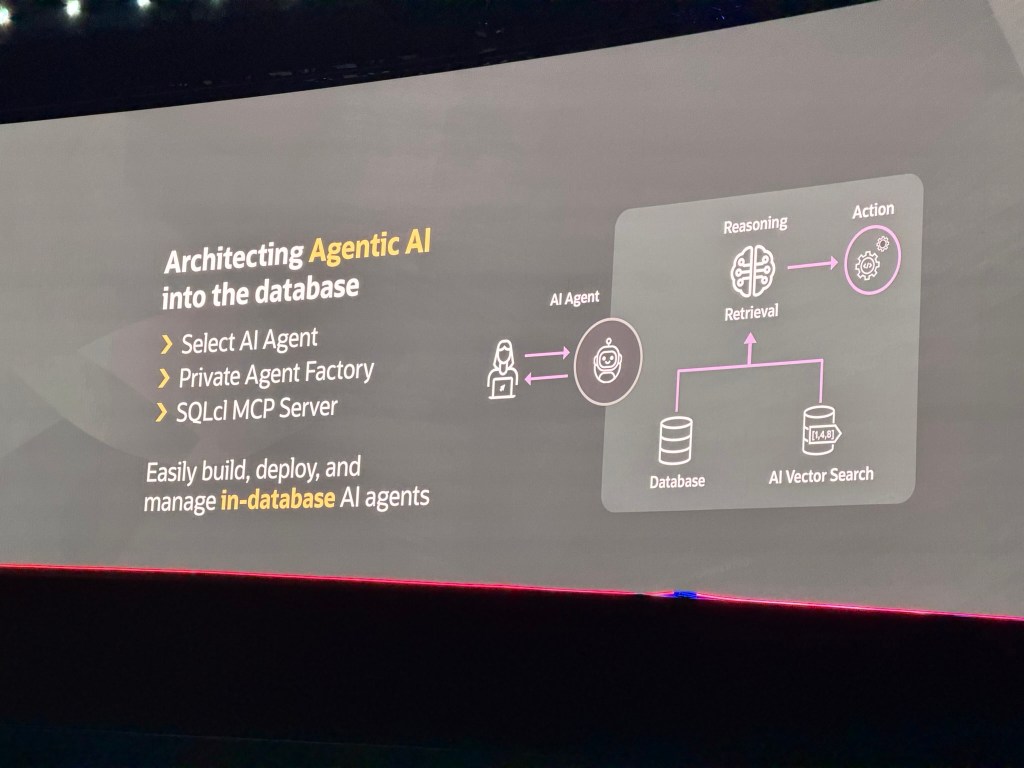

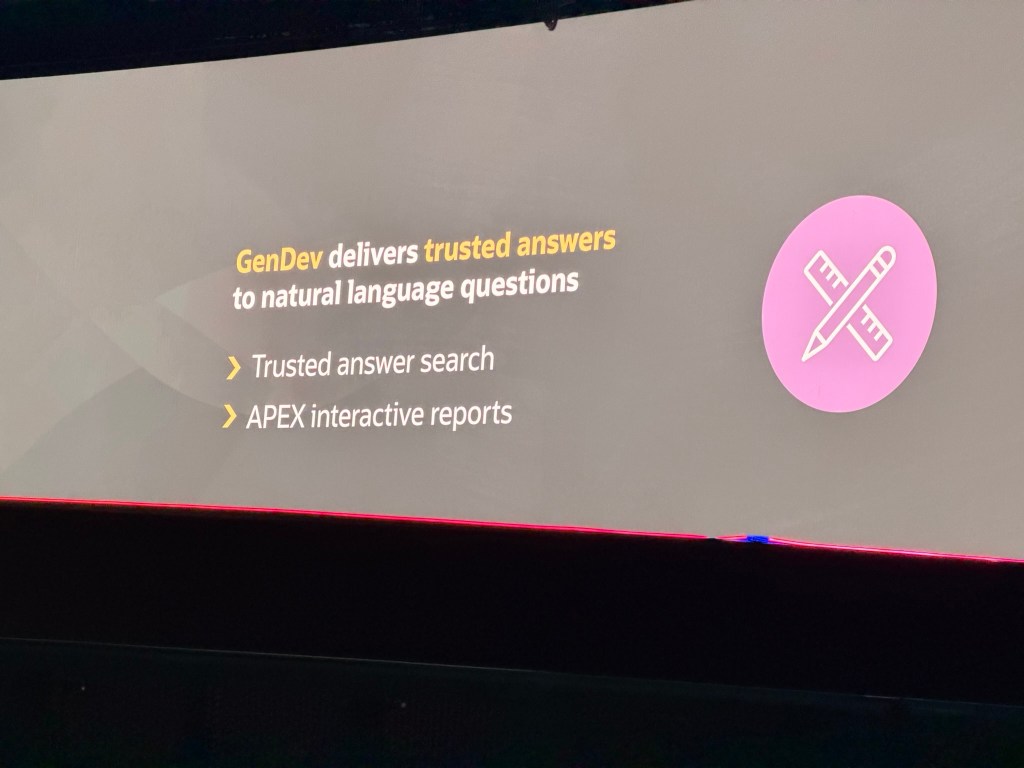

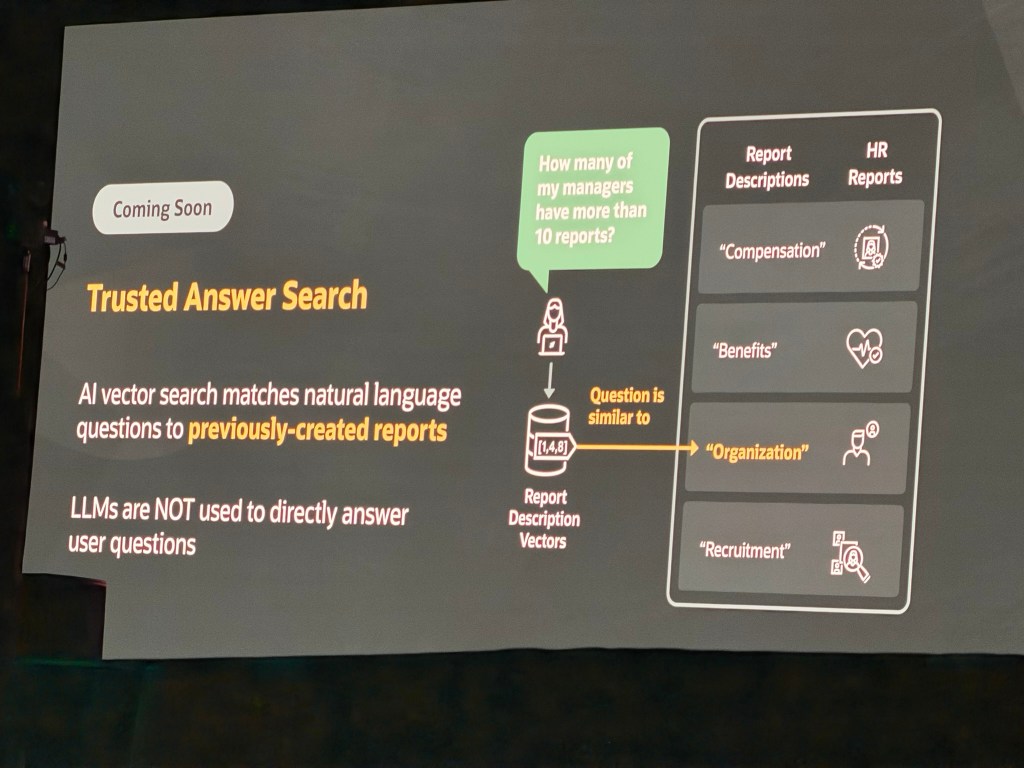

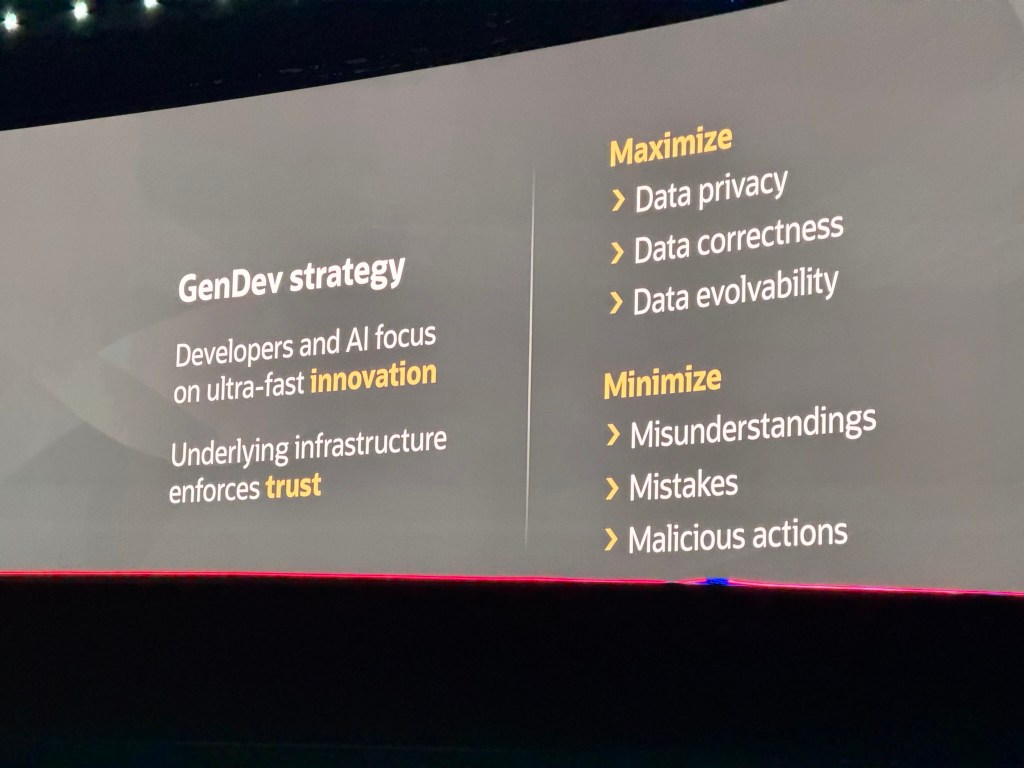

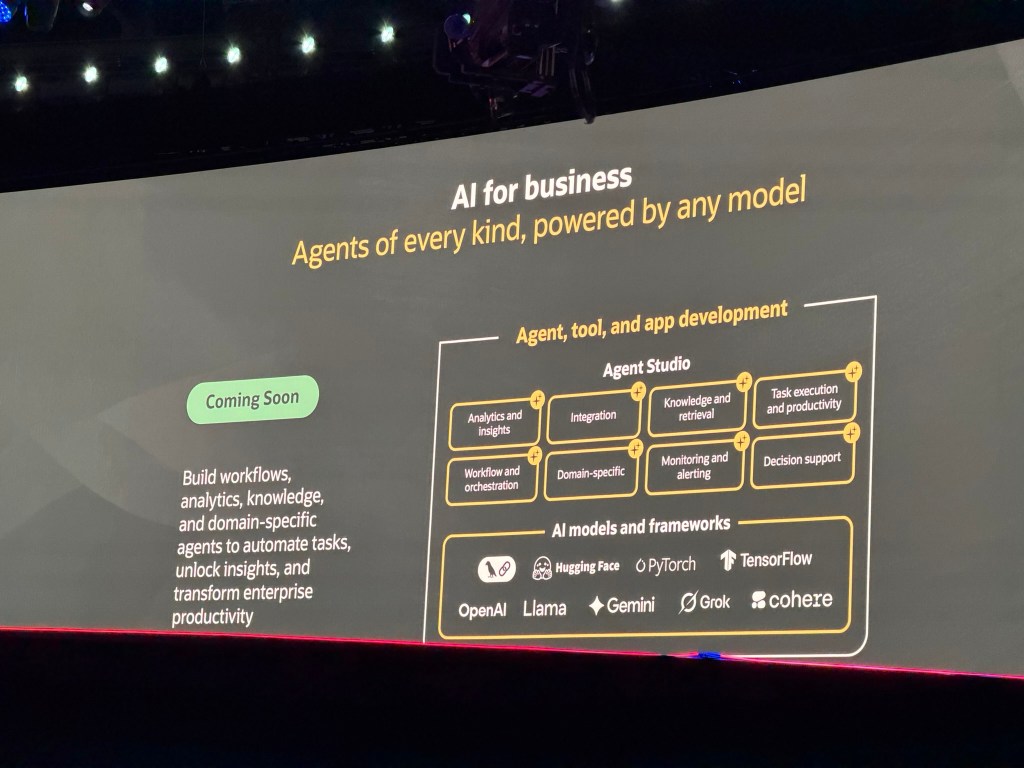

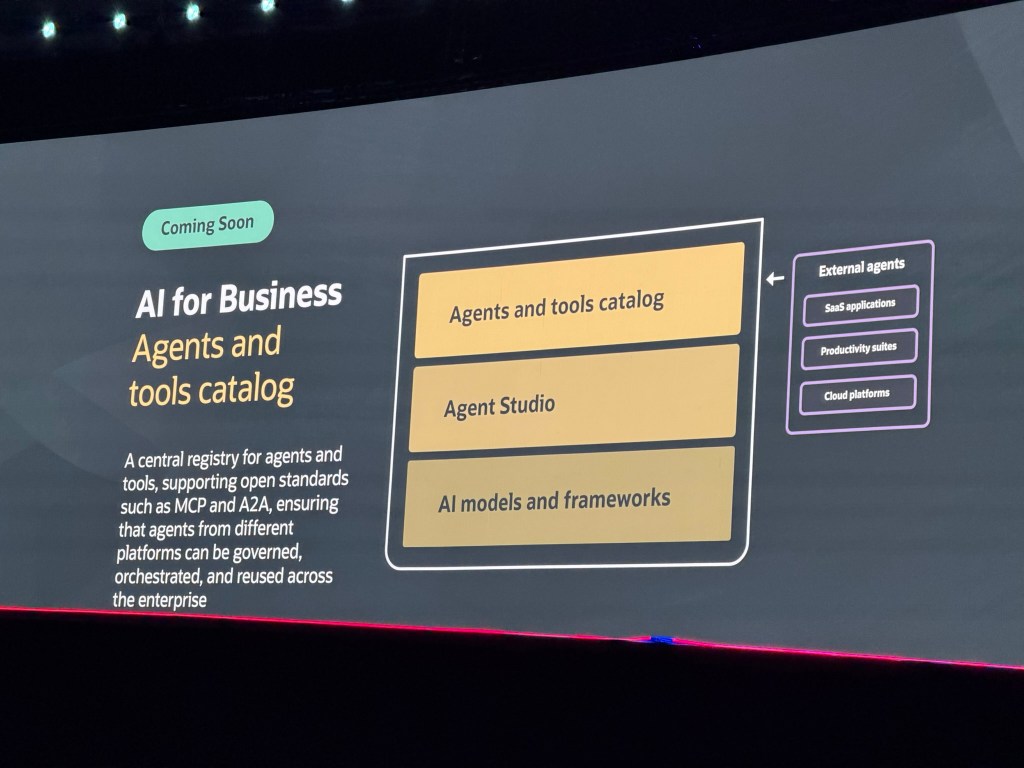

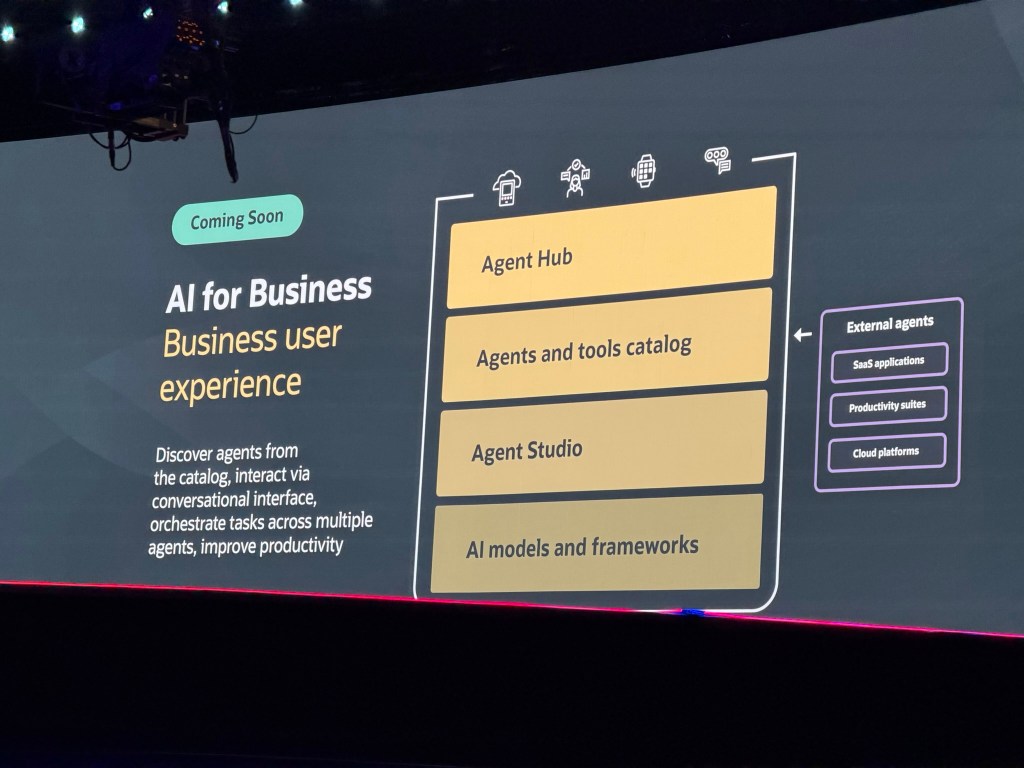

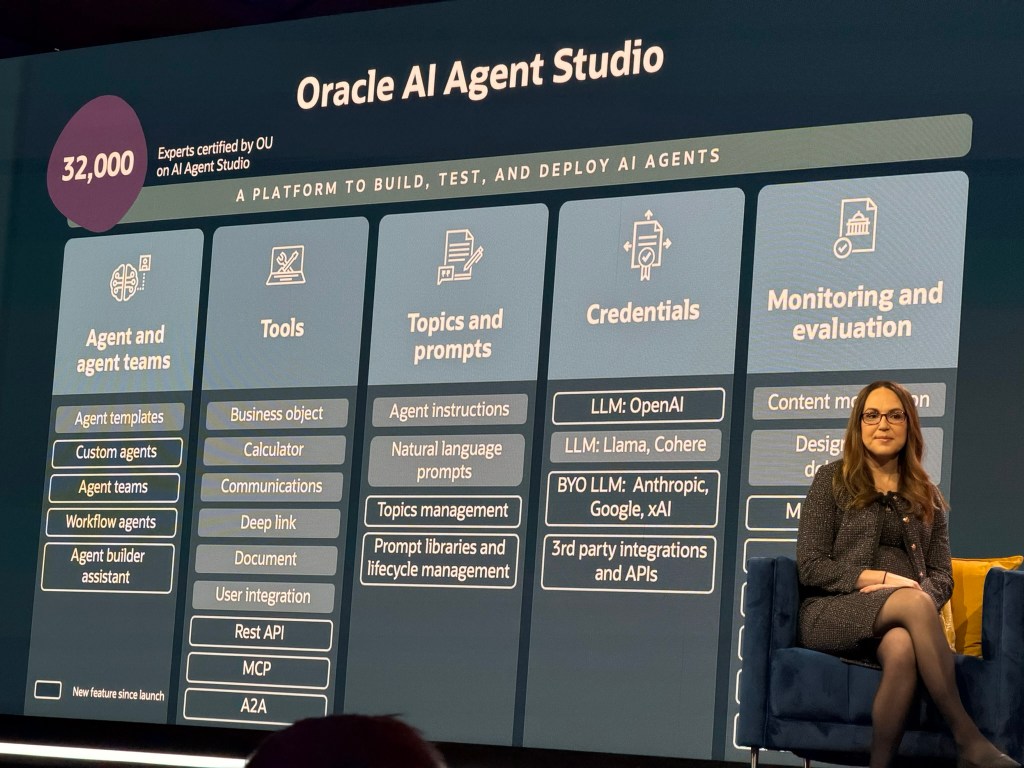

Clay also introduced the GenAI Agent Platform, which offers managed, pre-built agents for Retrieval-Augmented Generation (RAG), SQL, and code generation. It supports open-source frameworks such as LangChain, LangGraph, Semantic Kernel, AutoGen, and CrewAI, and provides full-stack integration with Oracle Fusion, Oracle Analytics Cloud (OAC), NetSuite, and Identity Provider (IDP) services.

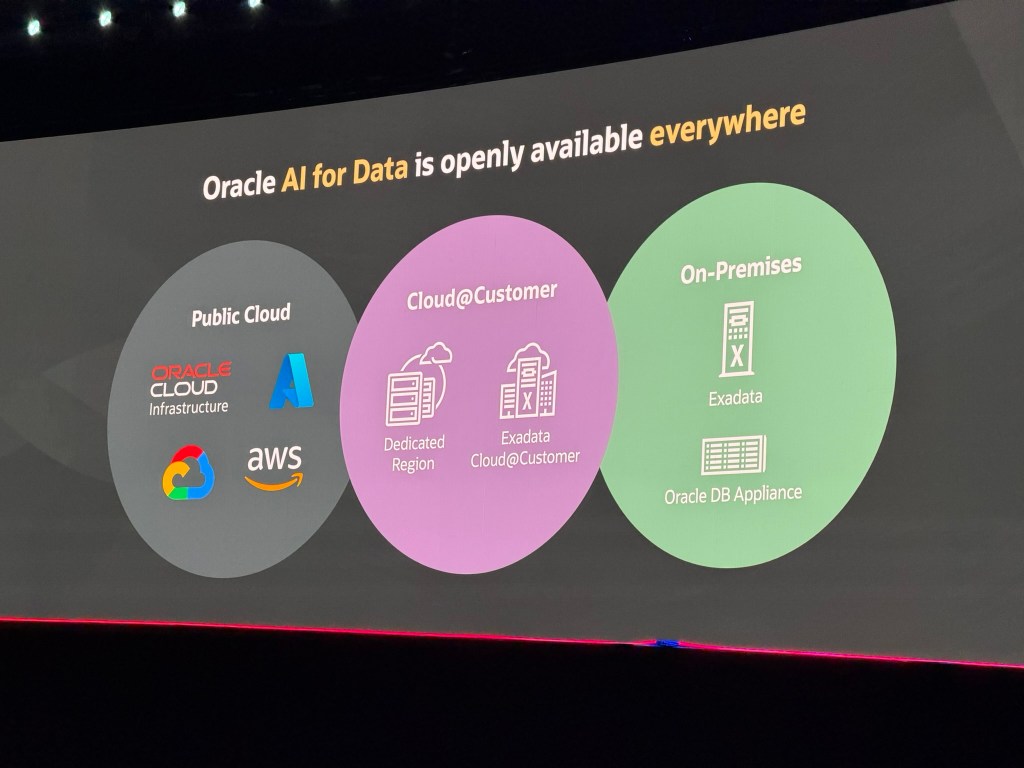

Available wherever you need it, whether in any cloud (via Oracle Database @ Multicloud) or in your own data centre.

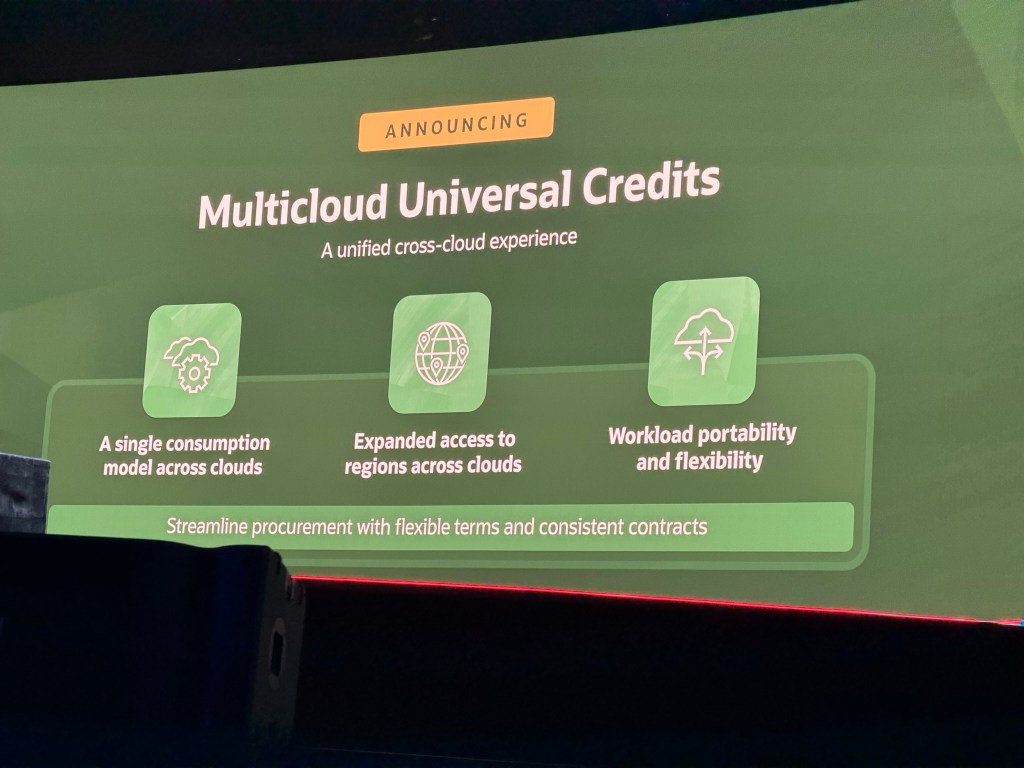

With this, he announced Oracle’s Multicloud Universal Credits, enabling a single consumption model across all clouds, pay Oracle once and use Oracle anywhere. This is especially appealing for multicloud customers seeking workload portability and the flexibility to apply credits wherever they need. More info here.

Clay’s final announcement was the General Availability (GA) of Dedicated Region 25, originally announced last year, as highlighted in my blog here.

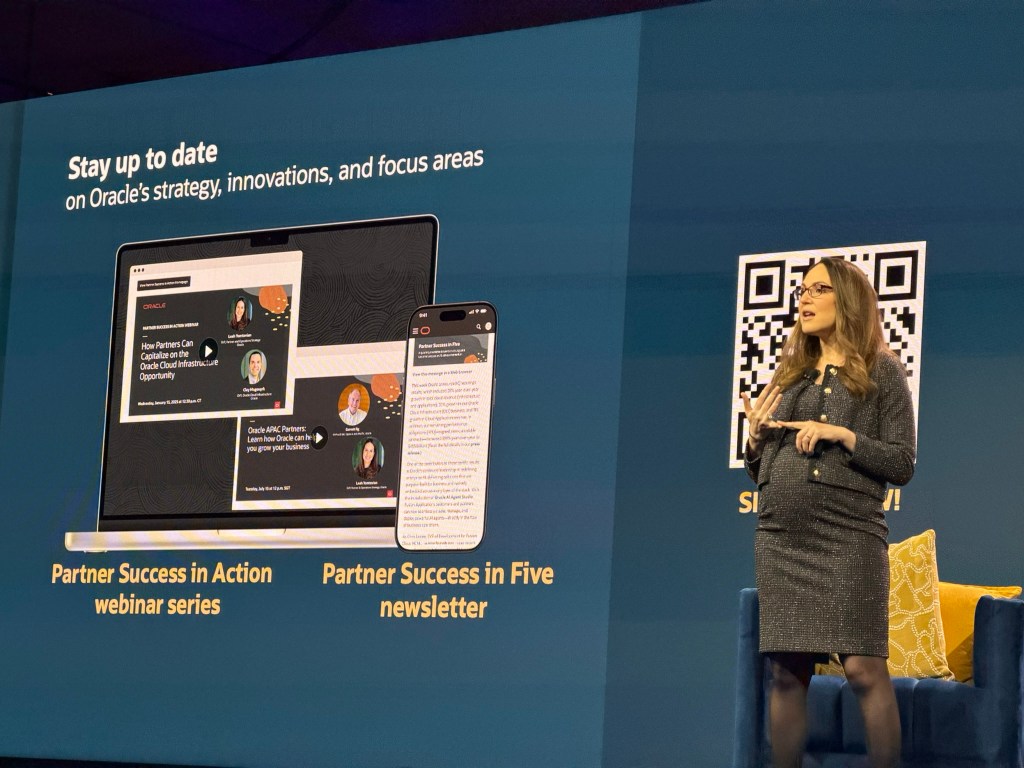

If you found this blog post useful, please like as well as follow me through my various Social Media avenues available on the sidebar and/or subscribe to this Oracle blog via WordPress/e-mail.

Thanks

Zed DBA (Zahid Anwar)