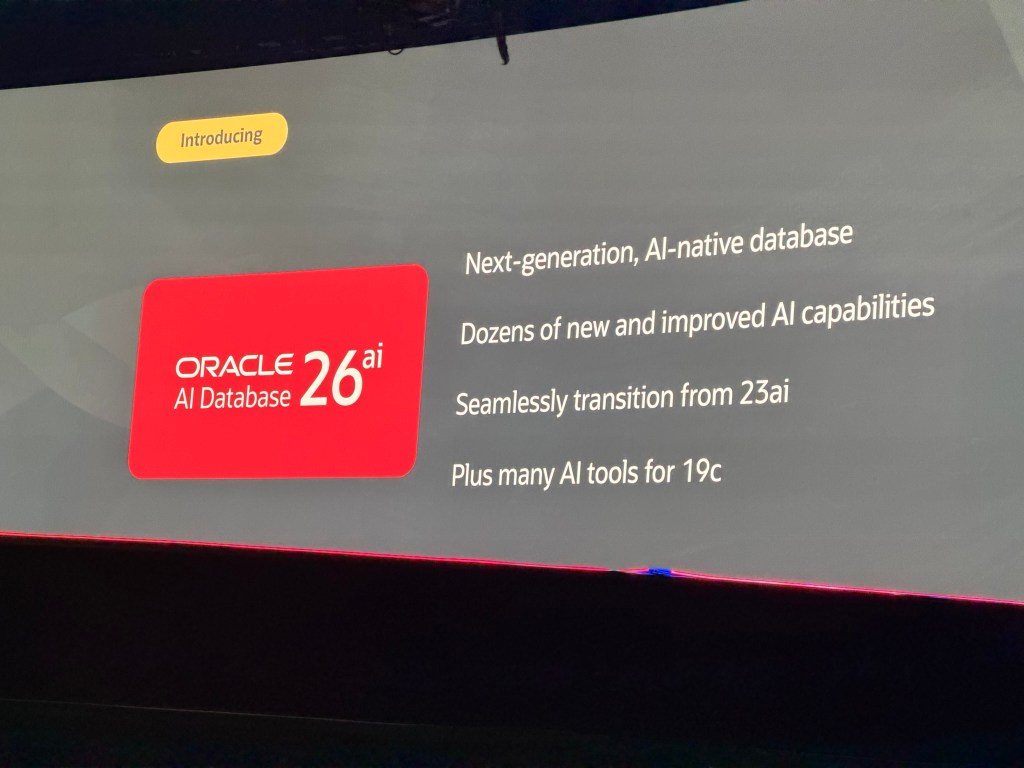

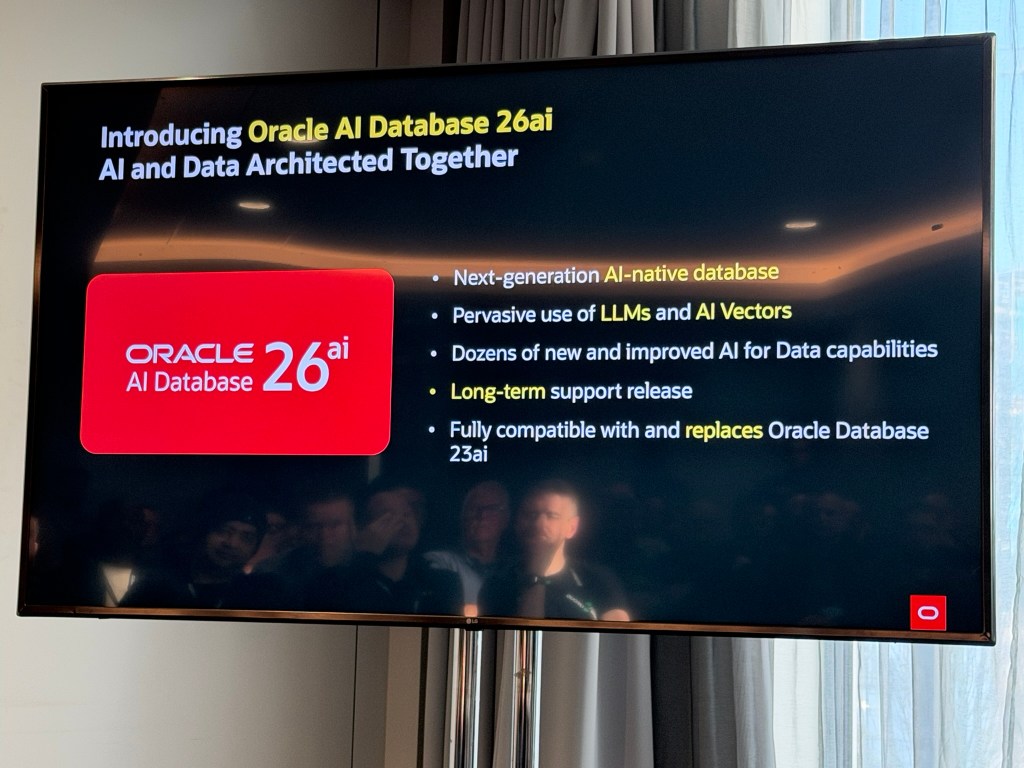

Announcement of Oracle AI Database 26ai

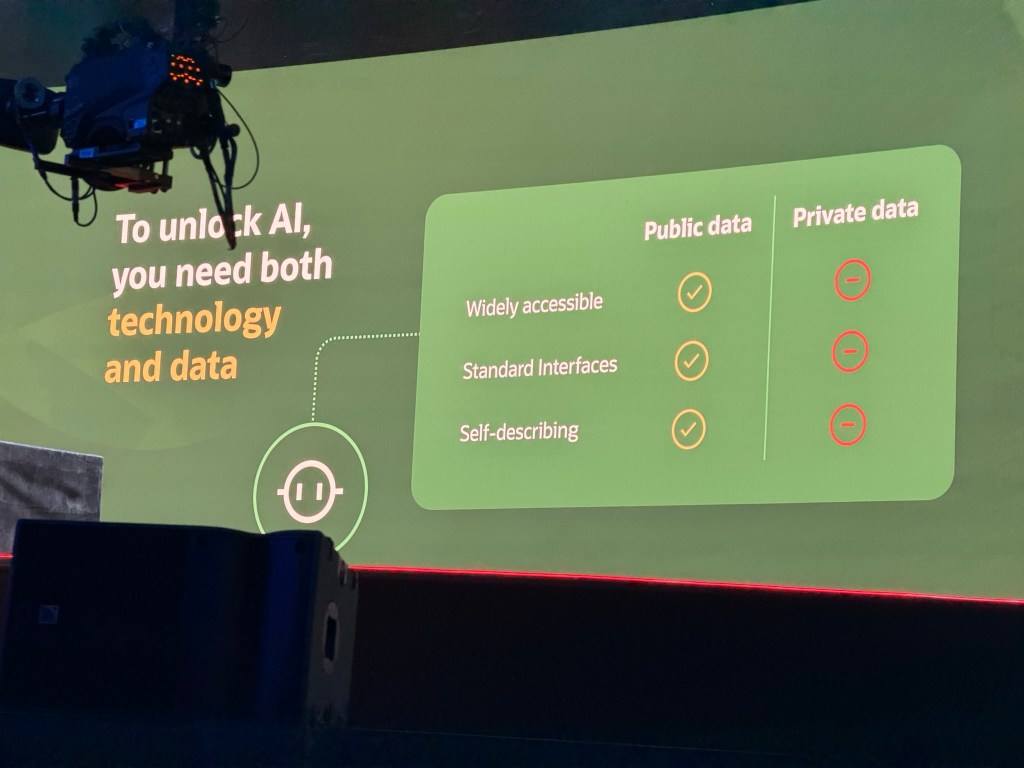

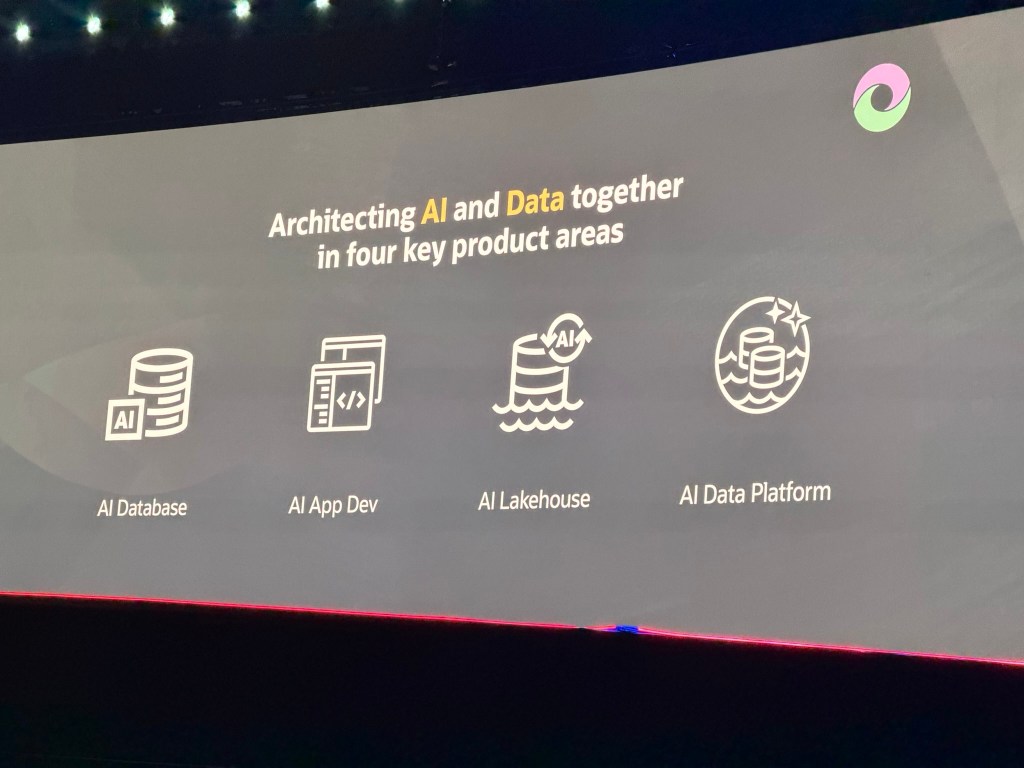

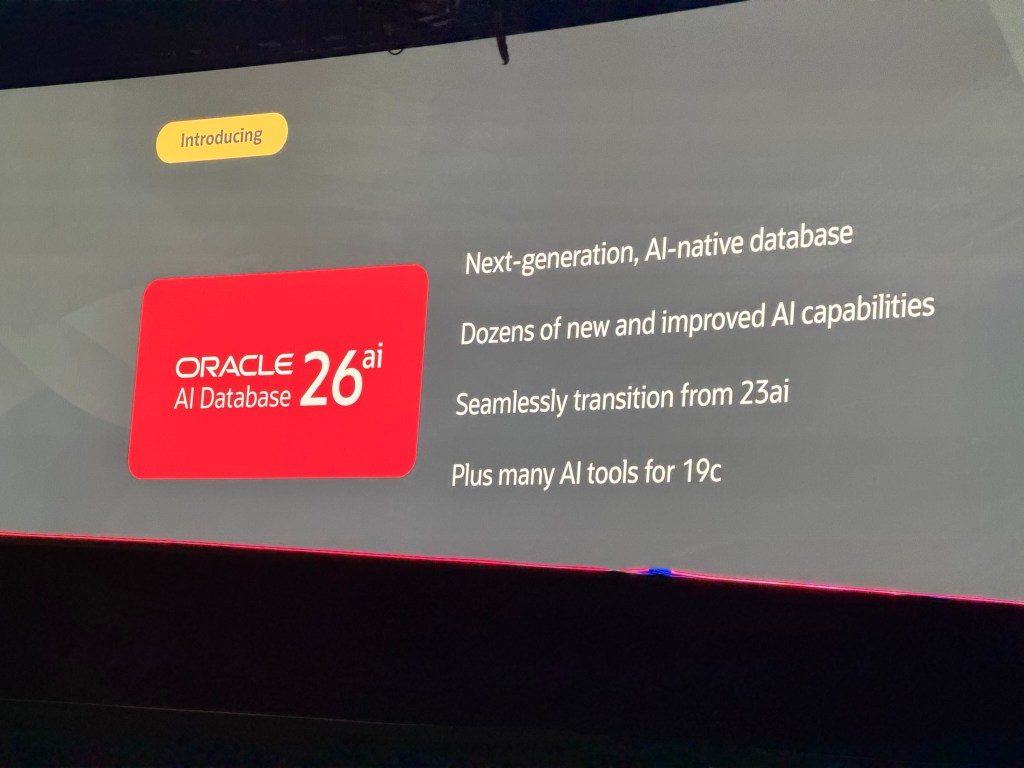

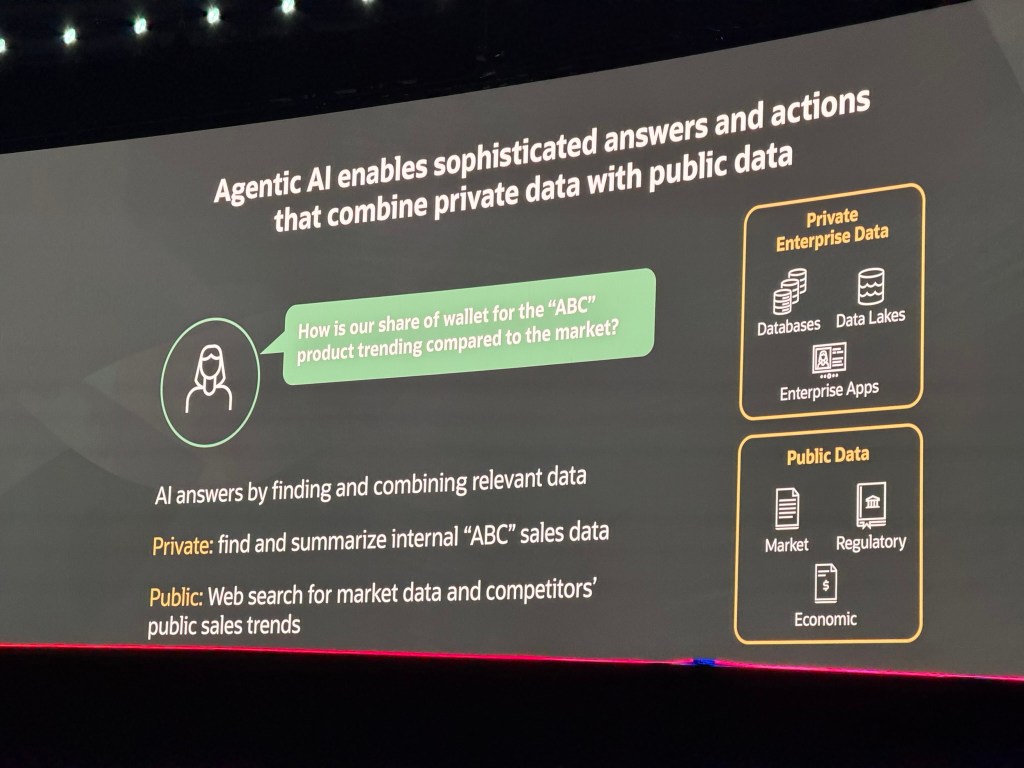

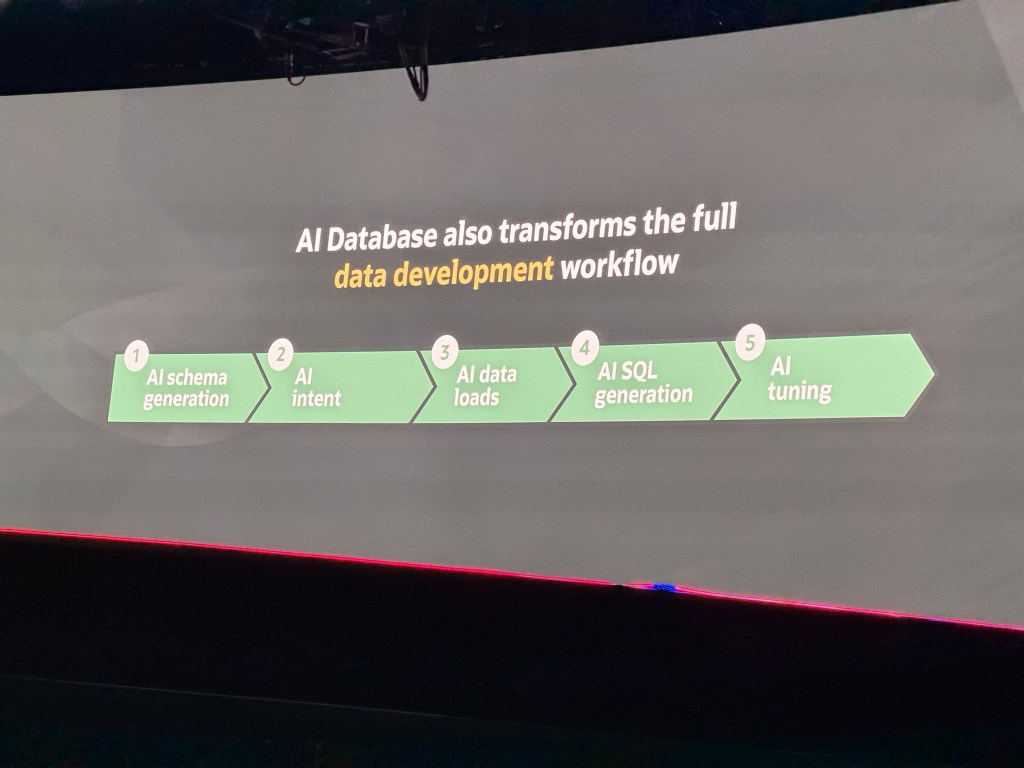

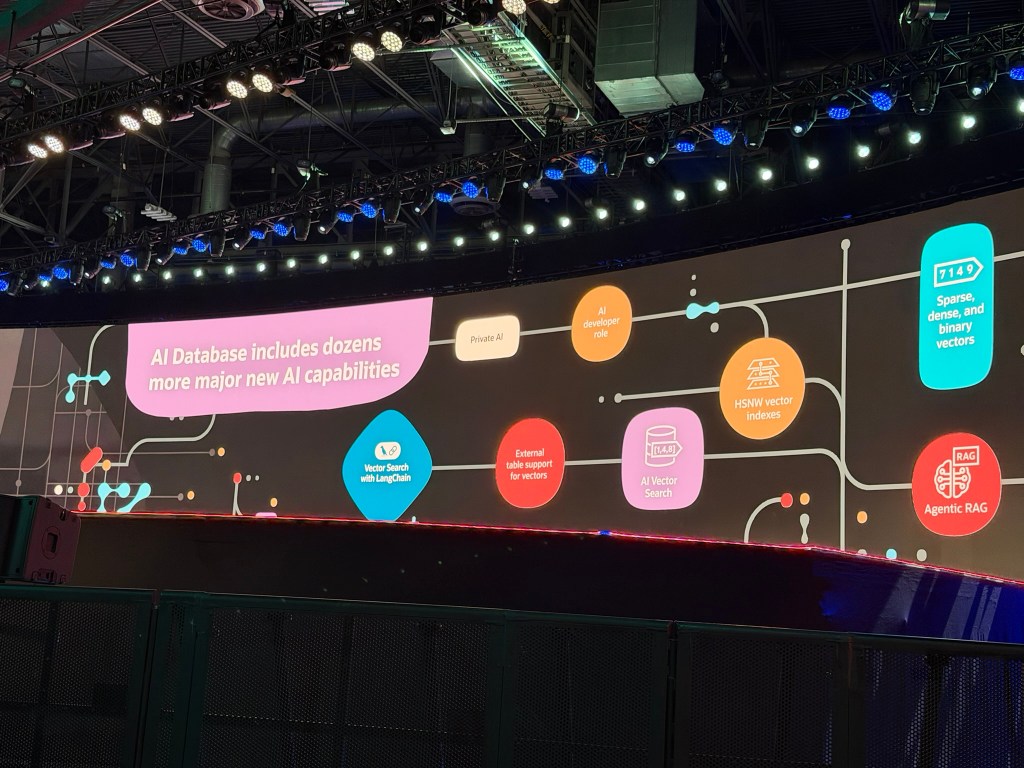

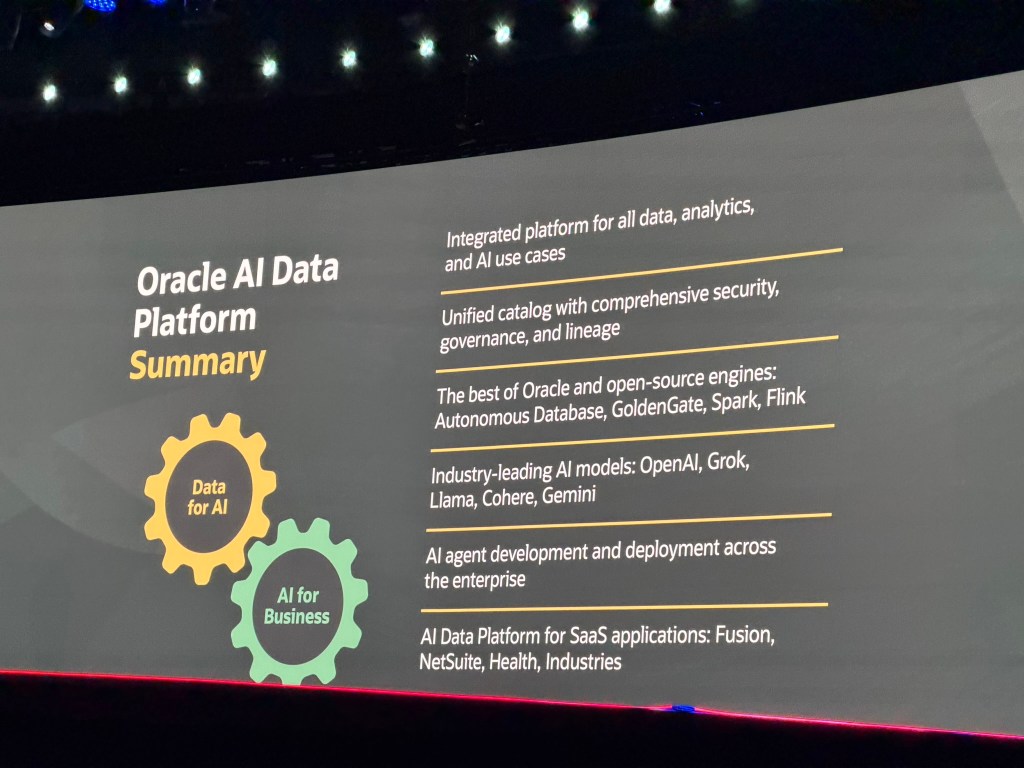

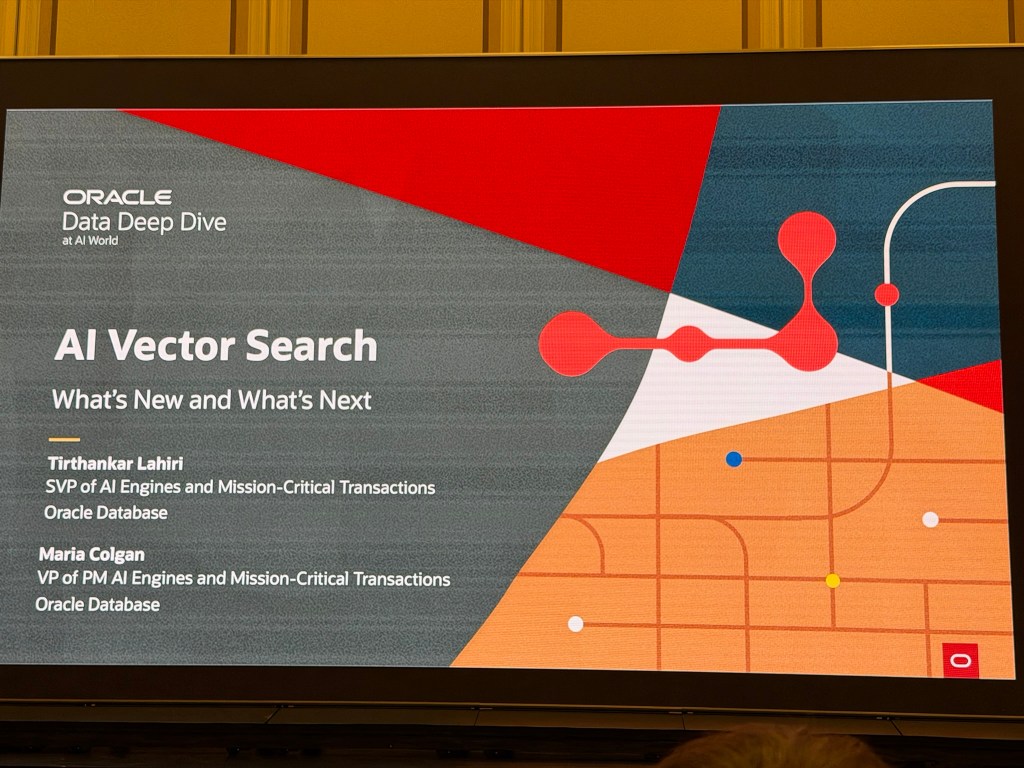

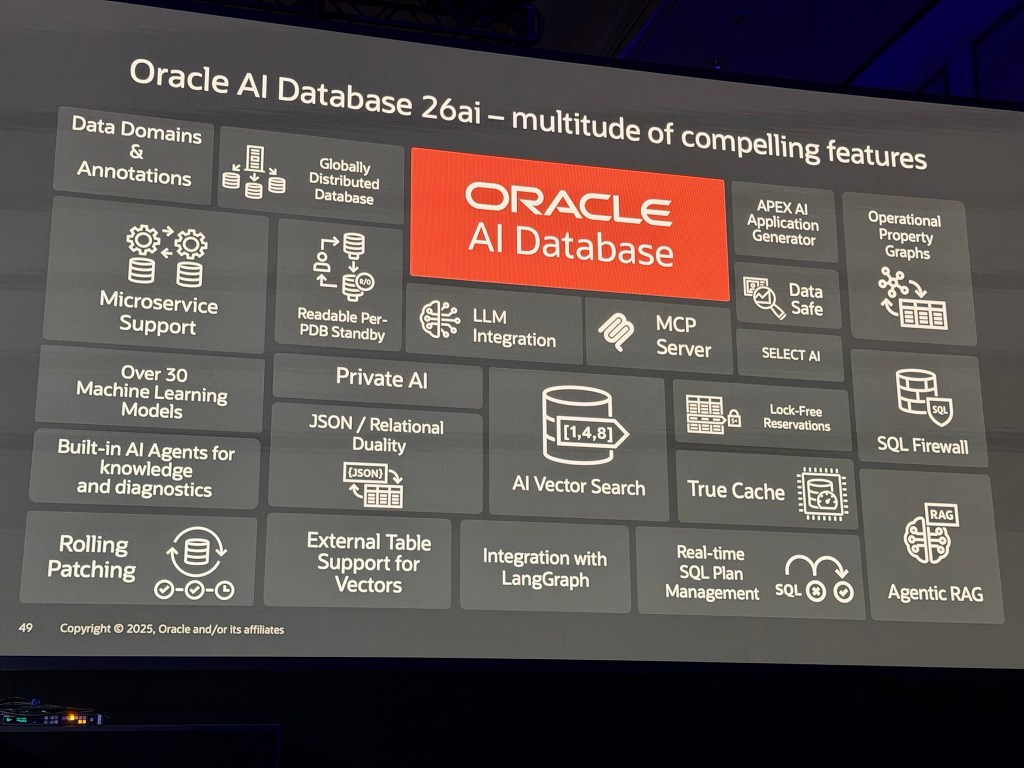

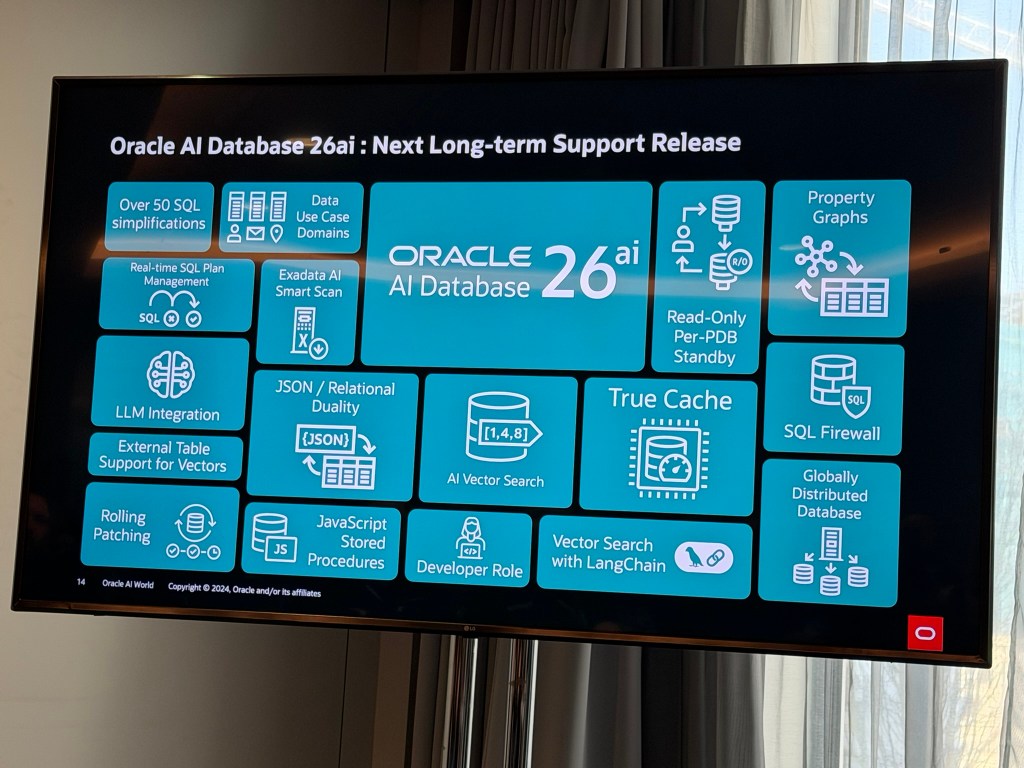

At Oracle AI World 2025, one of the biggest announcements from my perspective was the launch of Oracle AI Database 26ai, tying into Oracle’s vision for AI-driven data management. The official press release provides full details here, but the highlight came during Juan Loaiza (Executive Vice President, Oracle Database Technologies) keynote, titled: The “AI for Data” Revolution is Here – How to Survive and Thrive. In his session, Juan outlined how this new release integrates advanced AI capabilities directly into the database, architected for mission-critical workloads, and that the AI database includes dozens more major new AI capabilities. I’ve shared my thoughts and key takeaways from his keynote in a dedicated blog post, which you can read here.

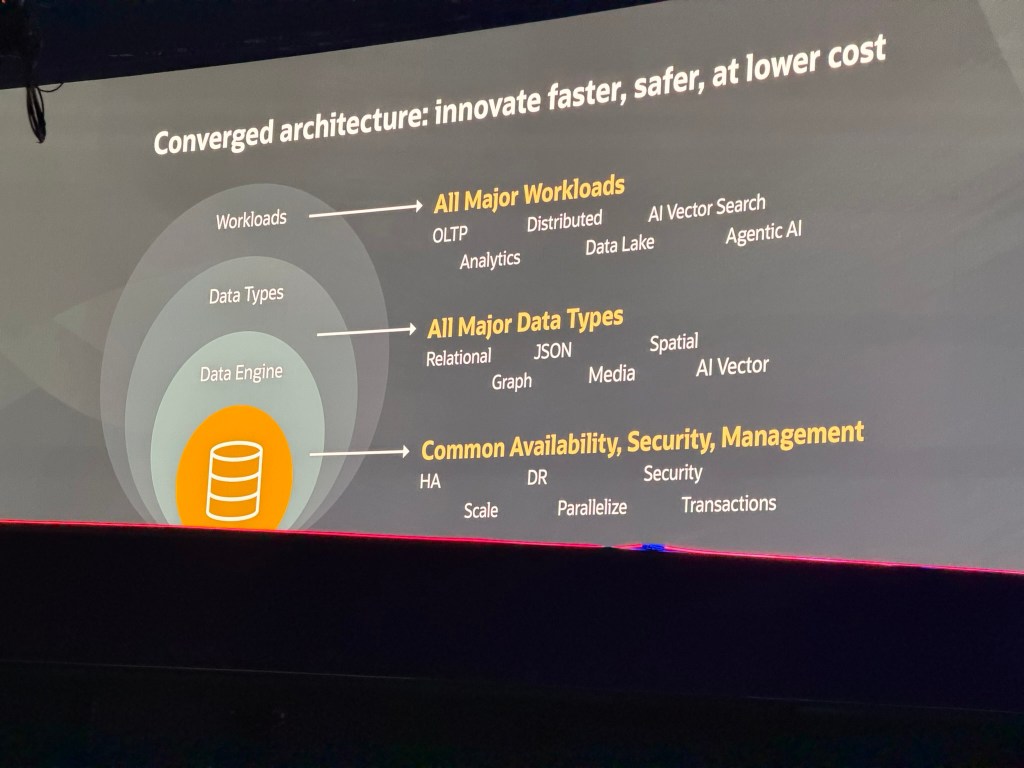

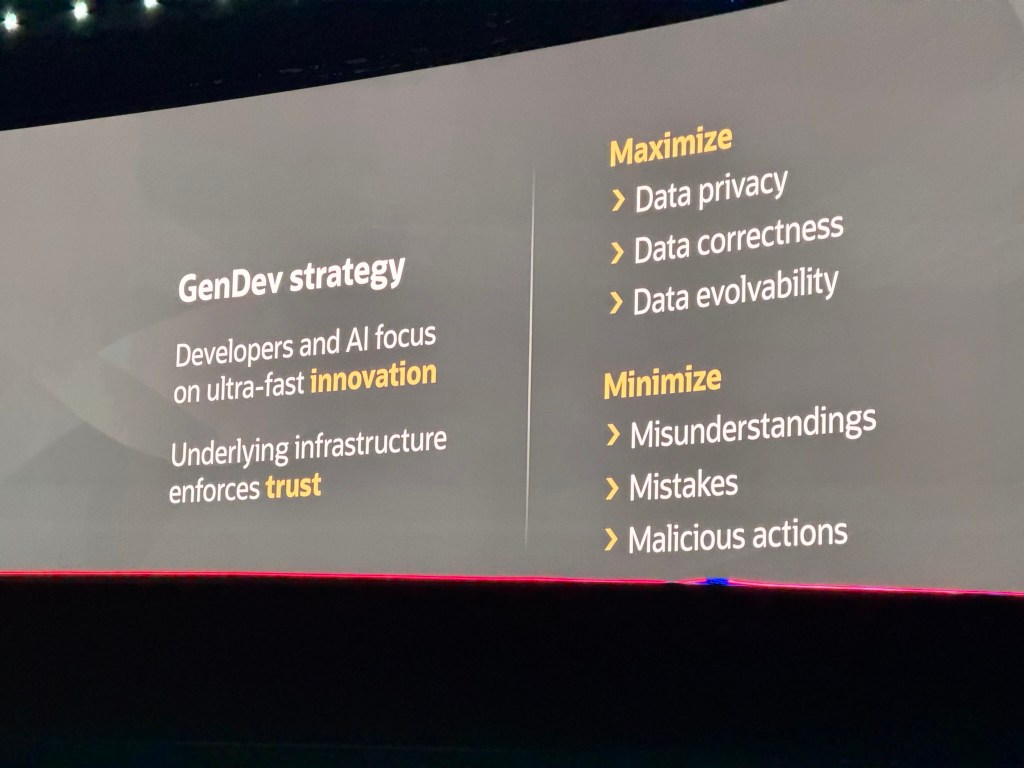

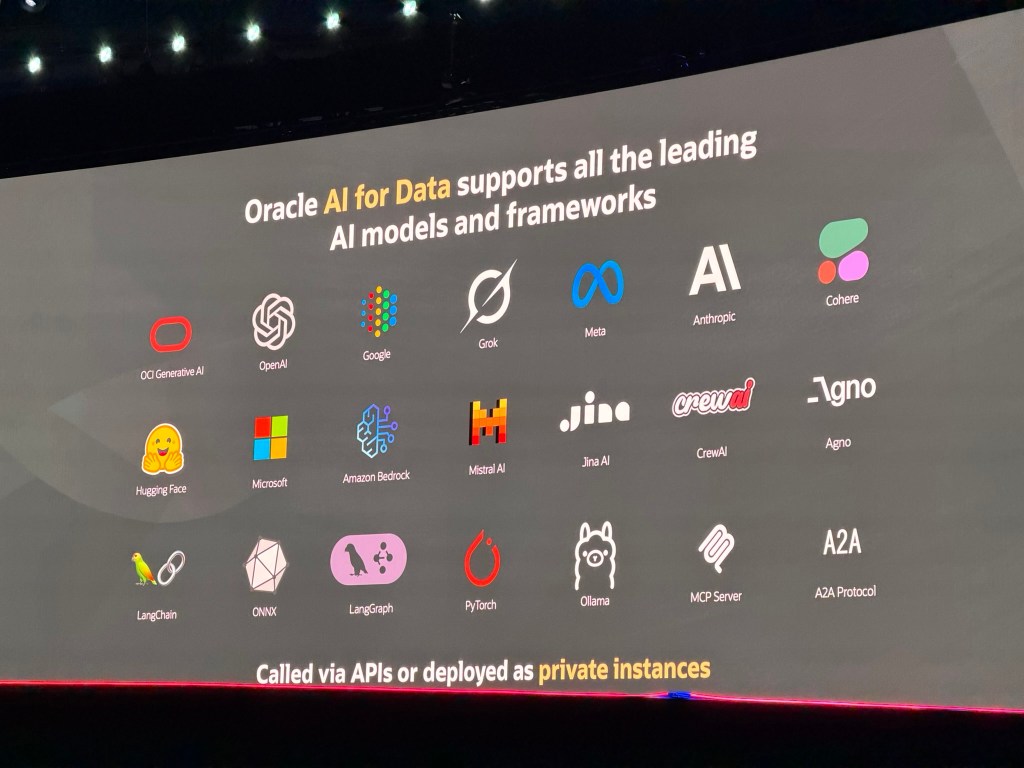

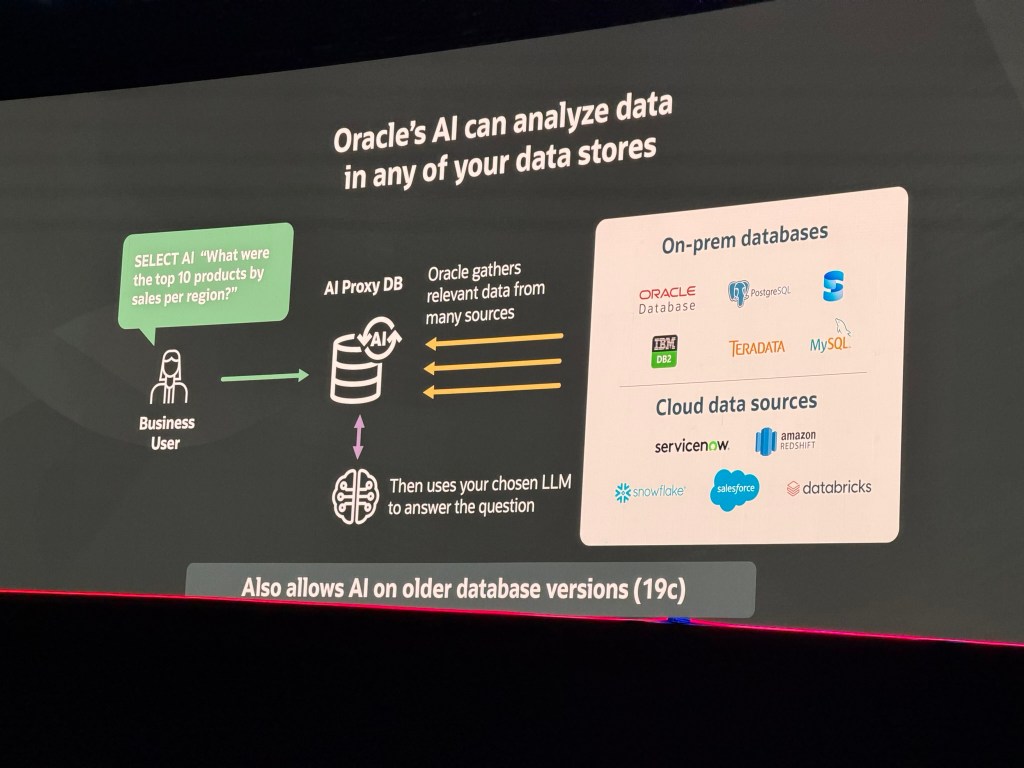

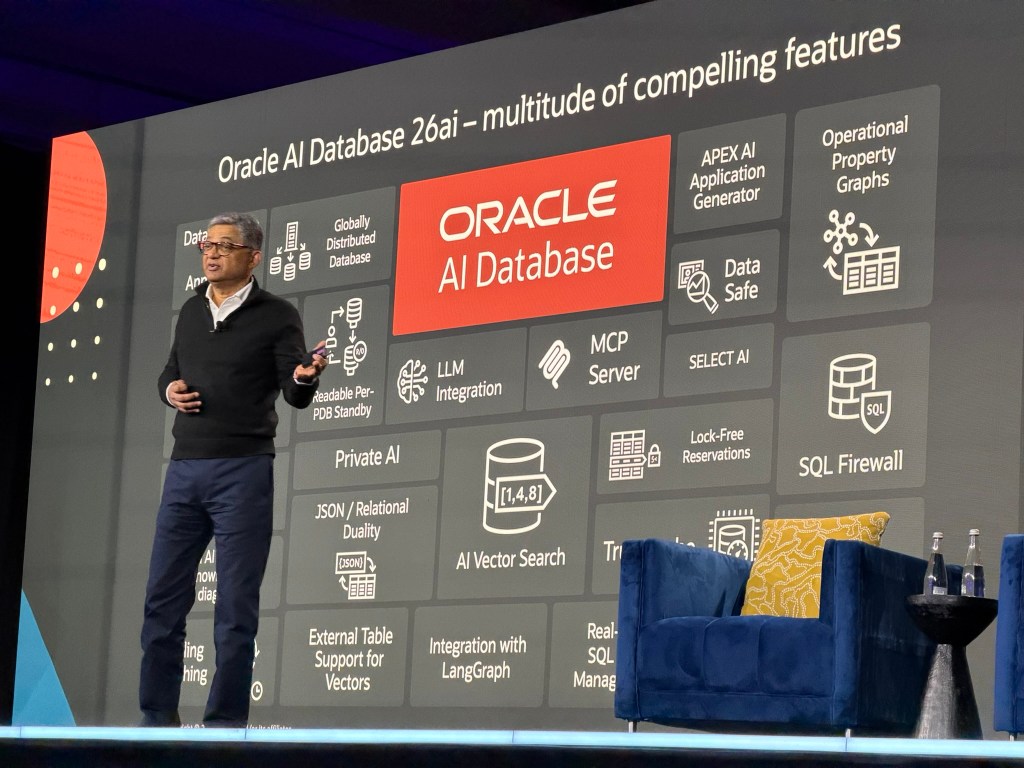

Hasan Rizvi, EVP, Database Engineering, Oracle also spoke about Oracle AI Database 26ai in his session “Oracle AI Database Directions: Innovate Faster, Scale Smarter and Minimize Risk”:

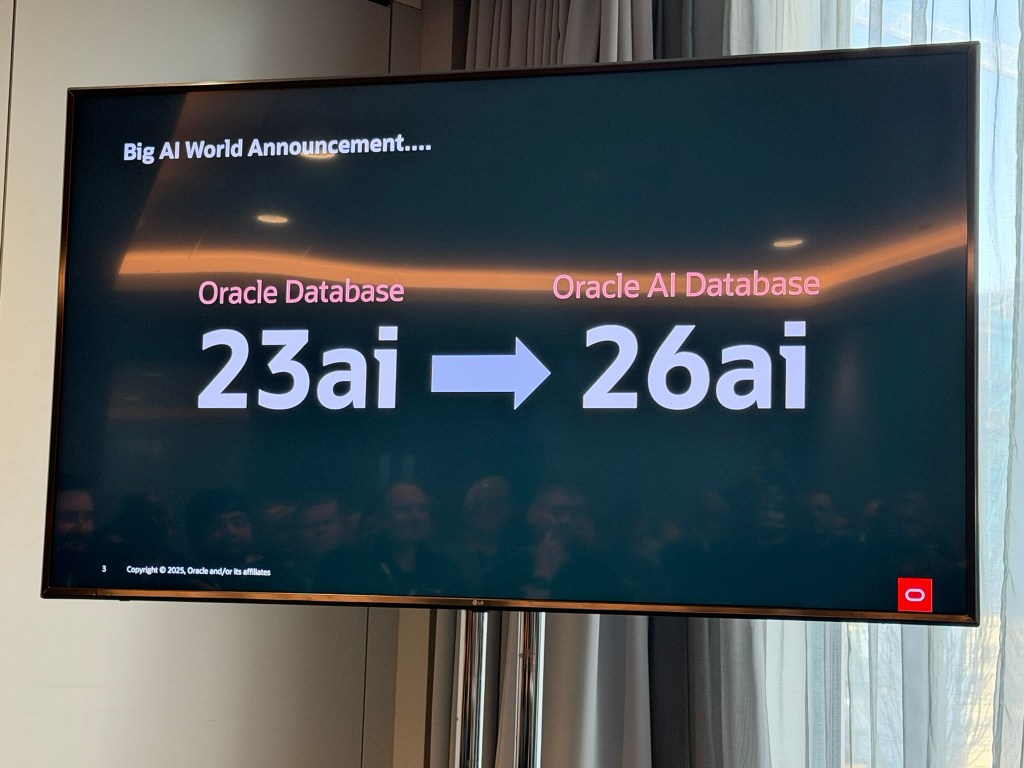

Is Oracle AI Database 26ai new release

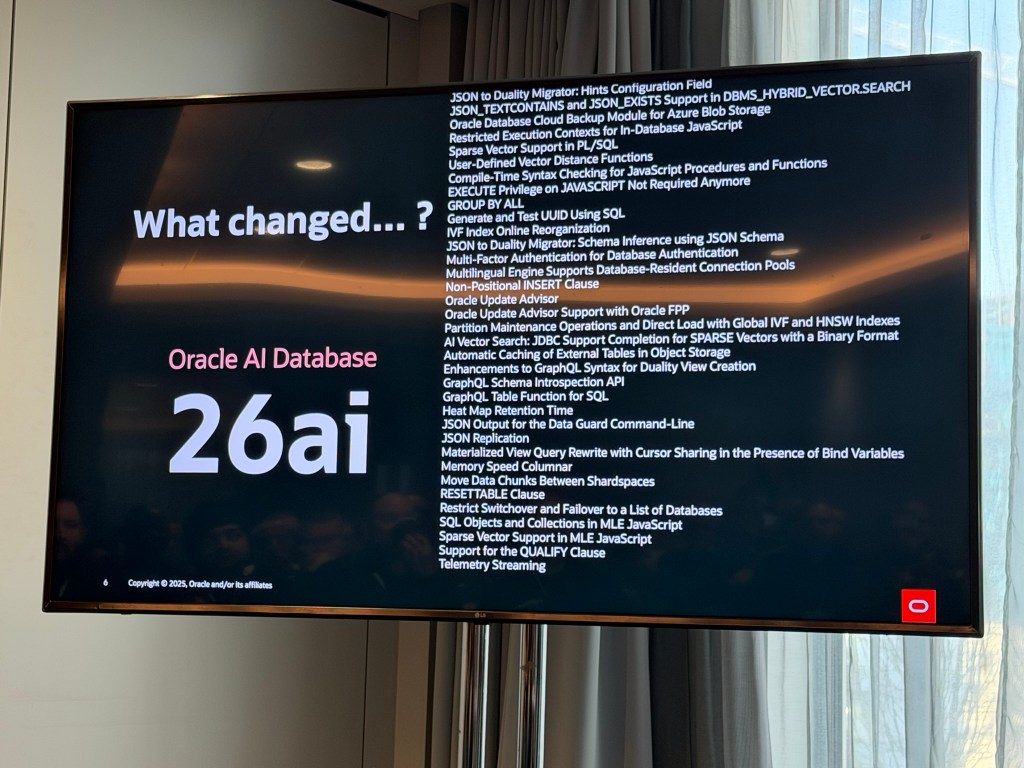

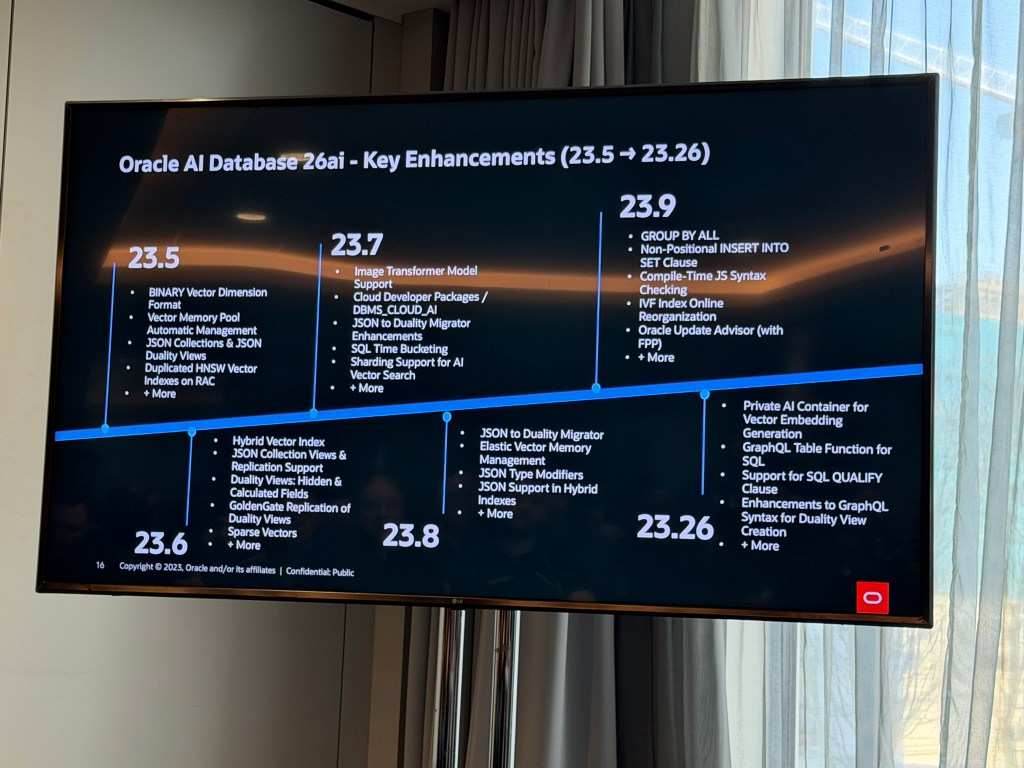

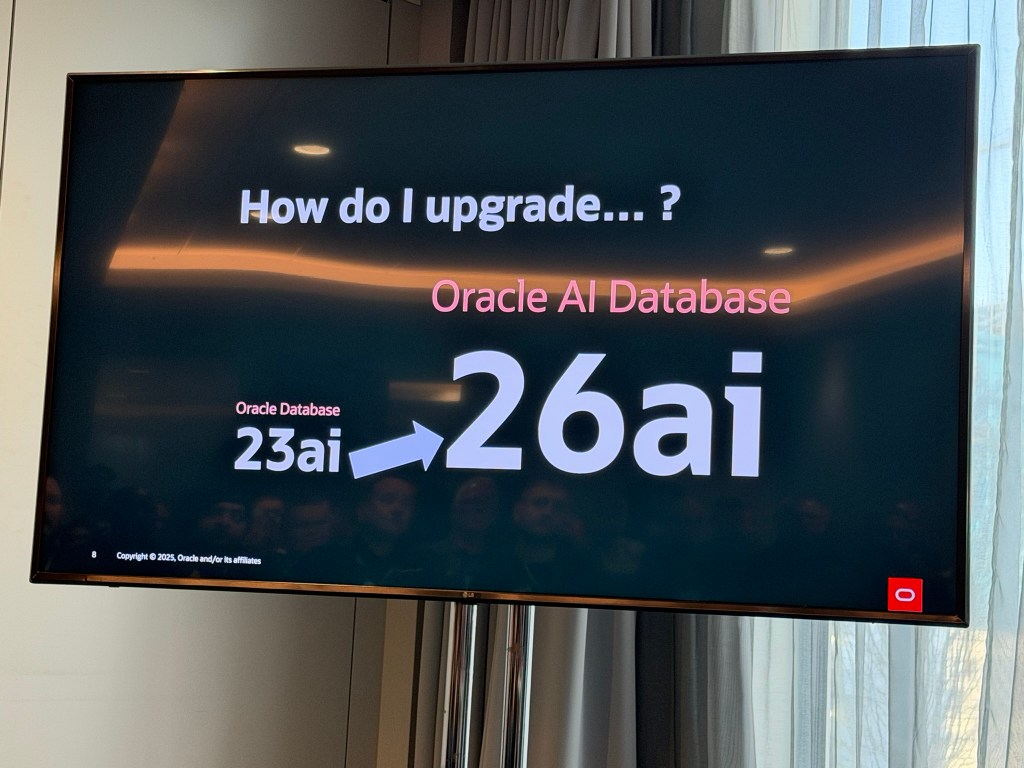

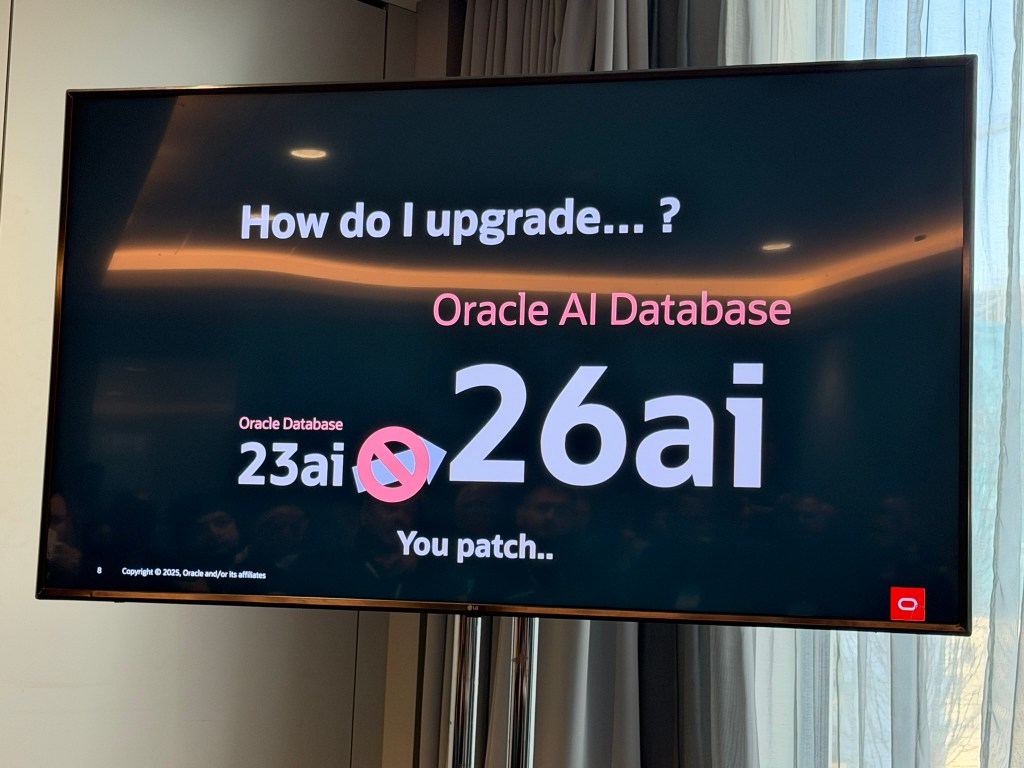

Interestingly, under the hood, Oracle AI Database 26ai is essentially 23ai, but it has been rebranded as 26ai. Why has Oracle done this? Because Oracle has packed so many new features and enhancements into this release that they consider it a major version upgrade. In fairness, this strategy works in their favour, as you’ll see later on in this blog post 😎.

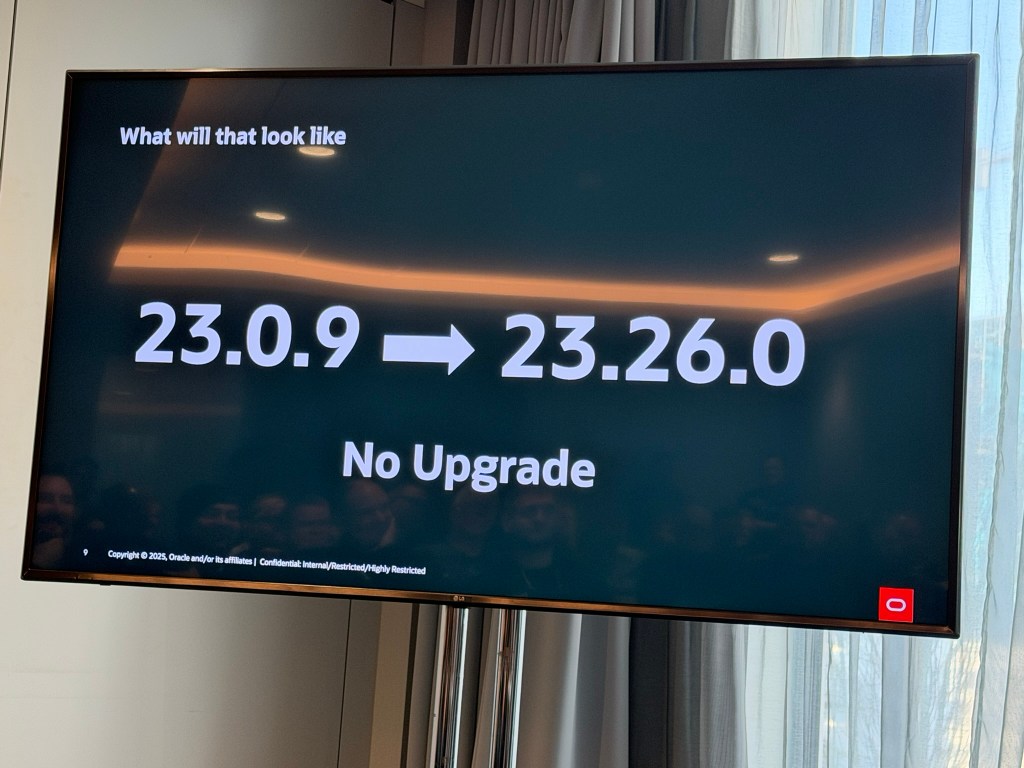

It’s important to note that Oracle AI Database 26ai is delivered as a Release Update (RU) rather than a full version upgrade. This means an implicit upgrade is not required, making adoption simpler for existing customers. In fact, this RU effectively replaces RU 23.10, as illustrated below:

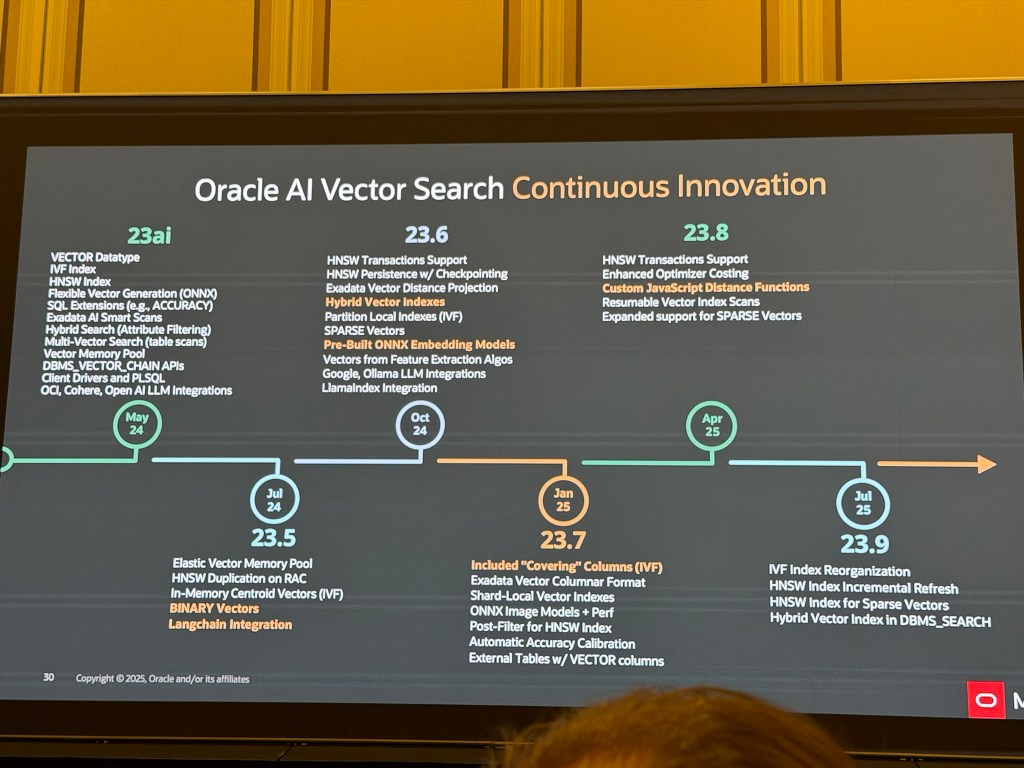

- 23.8 – Oracle Database 23ai (April 2025)

- 23.9 – Oracle Database 23ai (July 2025)

- 23.26.0 (replacing 23.10) – Oracle AI Database 26ai (October 2025)

- 23.26.1 – Oracle AI Database 26ai (January 2026)

- 23.26.2 – Oracle AI Database 26ai (April 2026)

If you’re already on Oracle Database 23ai (blog post here), the transition to Oracle AI Database 26ai is straightforward, it’s simply a matter of applying the latest RU. For those on older versions, Oracle has made the upgrade path equally simple: you can move directly from 19c to Oracle AI Database 26ai, just as you would have upgraded to 23ai.

Oracle AI Database 26ai, will effectively be the replacement long-term support release:

For more info, see Mike Dietrich, Oracle Database Upgrade Product Manager, blog post here.

Where is Oracle AI Database 26ai available

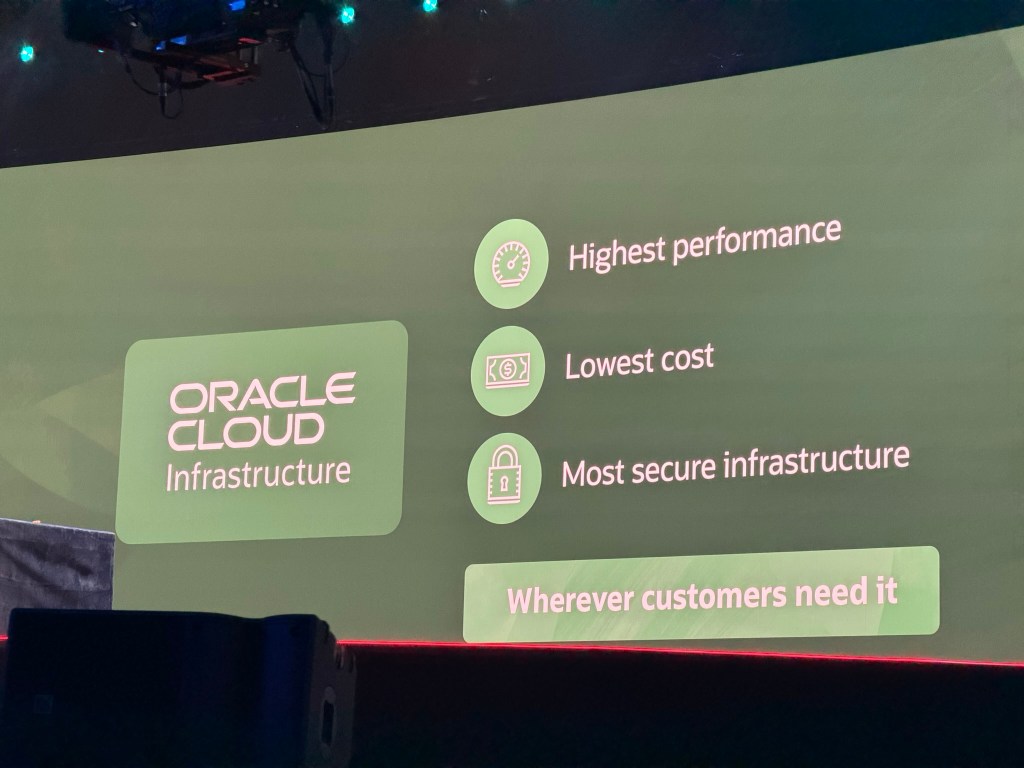

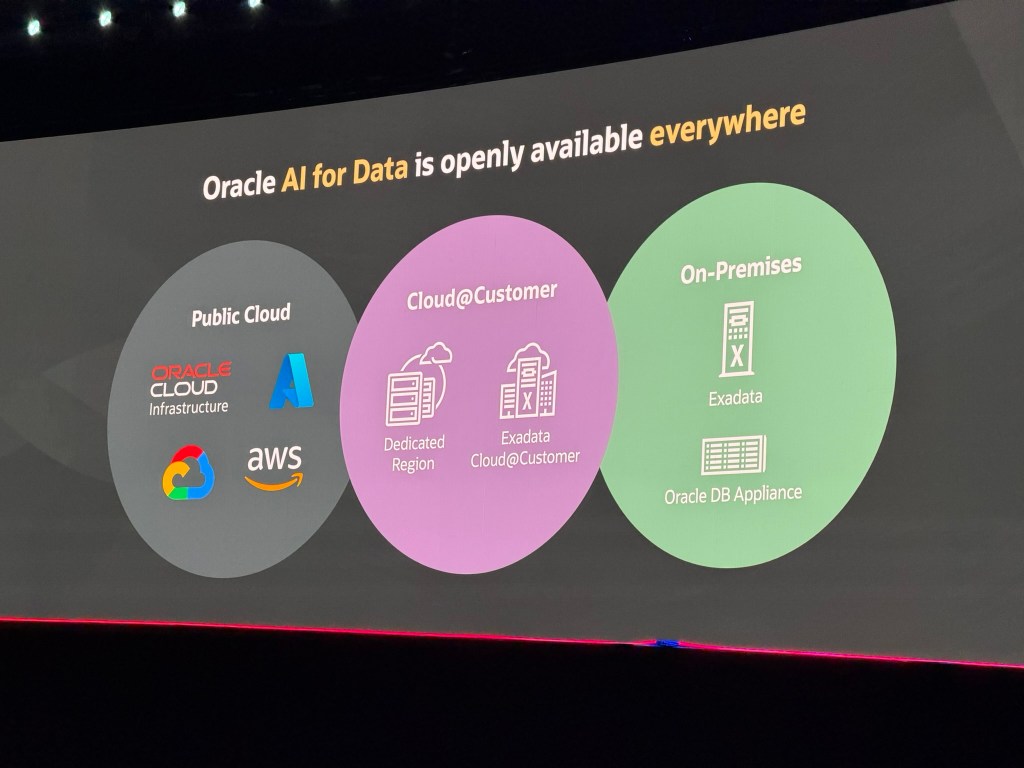

As with the same narrative as Oracle Database 23ai, at the time of announcement, Oracle AI Database 26ai is available on:

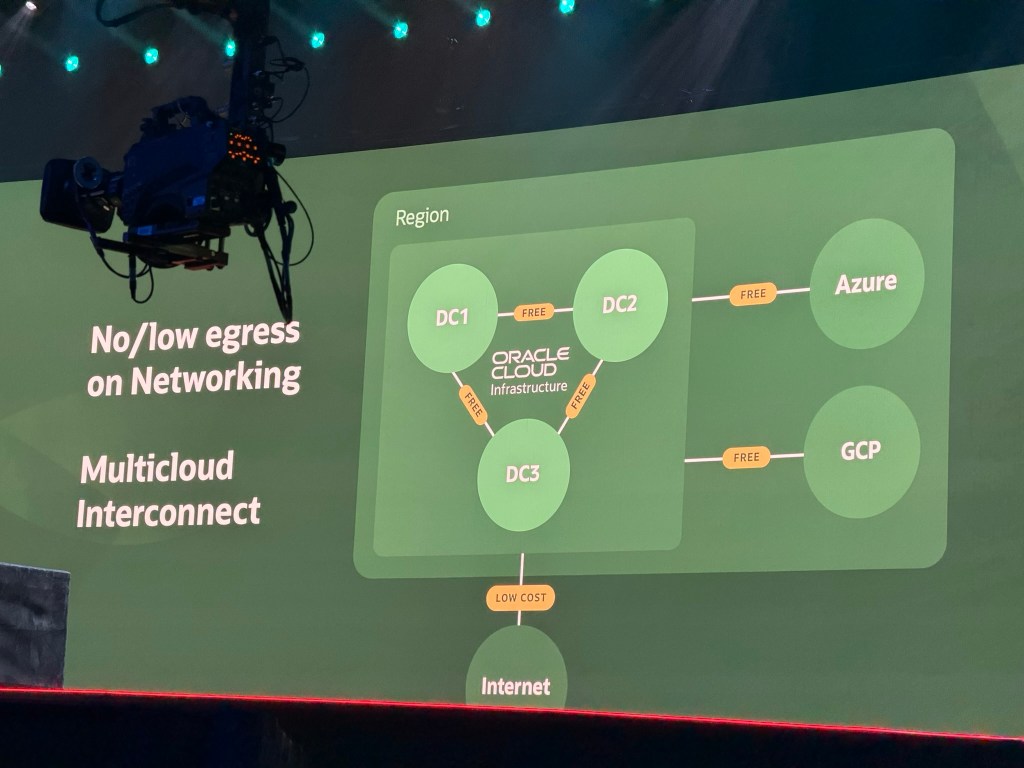

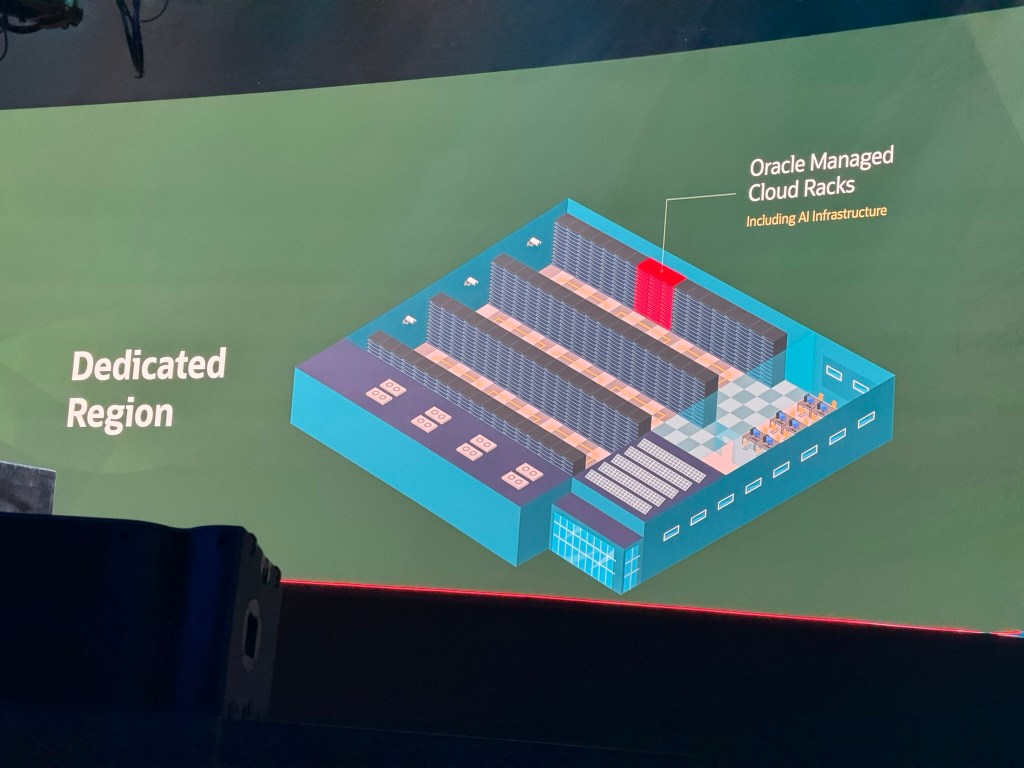

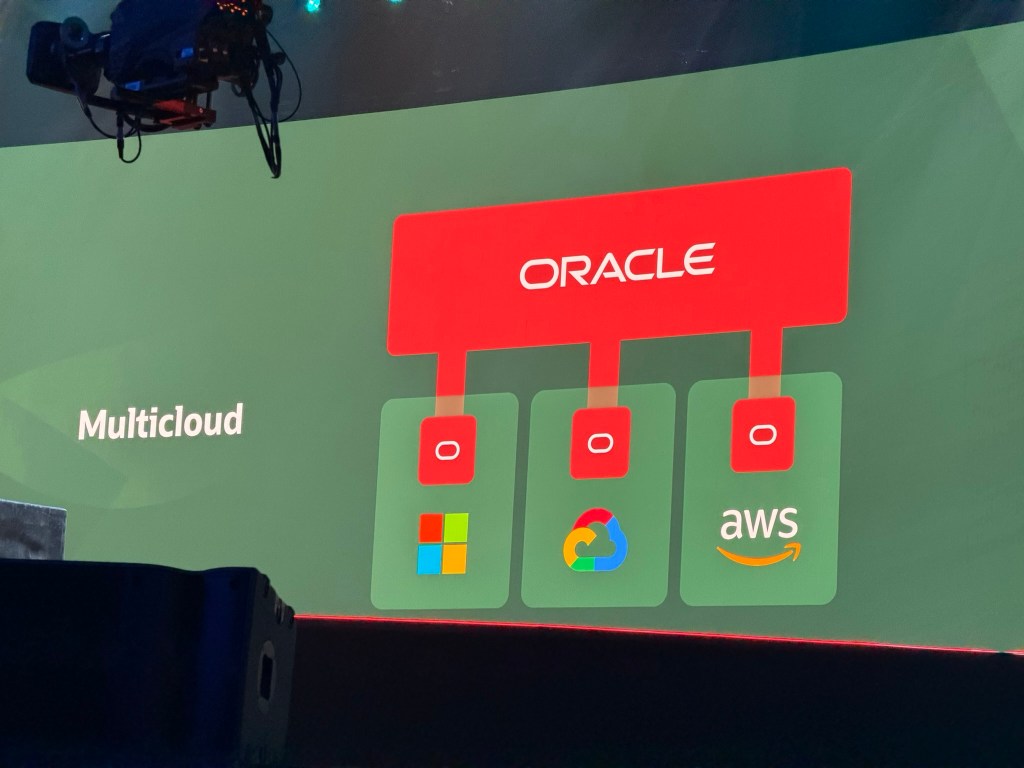

- Oracle Cloud: which includes OCI, Exadata Cloud@Customer, Dedicated Region, Oracle Database @ Azure | GCP | AWS

- Oracle Engineered Systems: Oracle Exadata, Oracle Database Appliance (ODA)

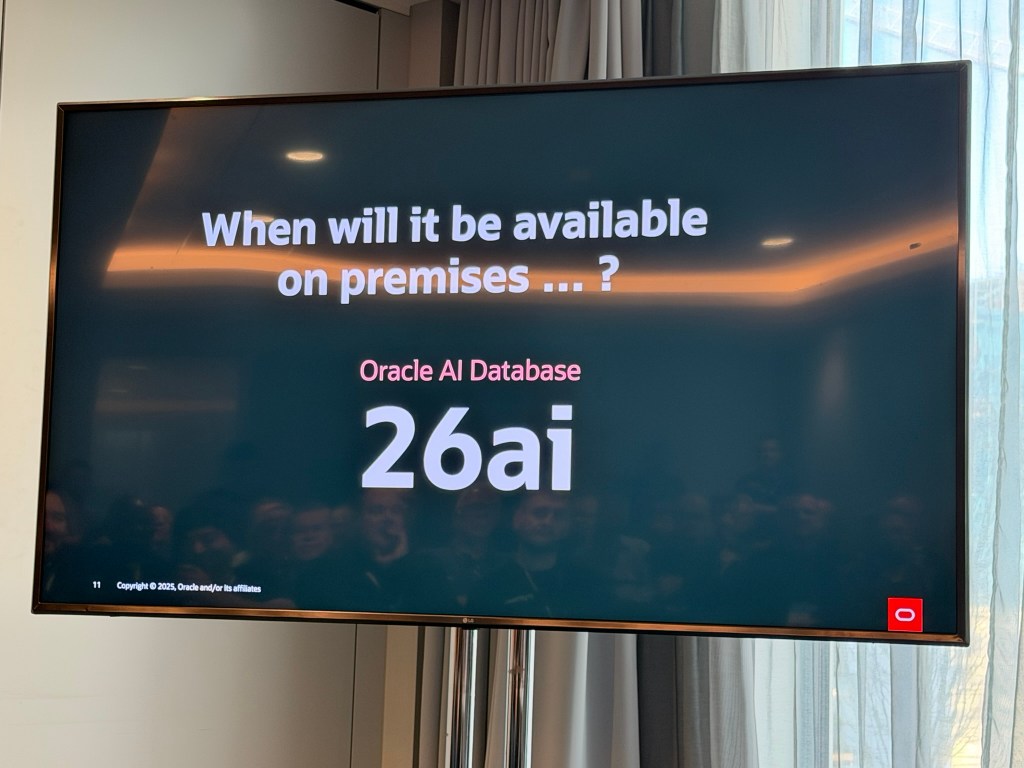

Will Oracle AI Database 26ai be available on-premises

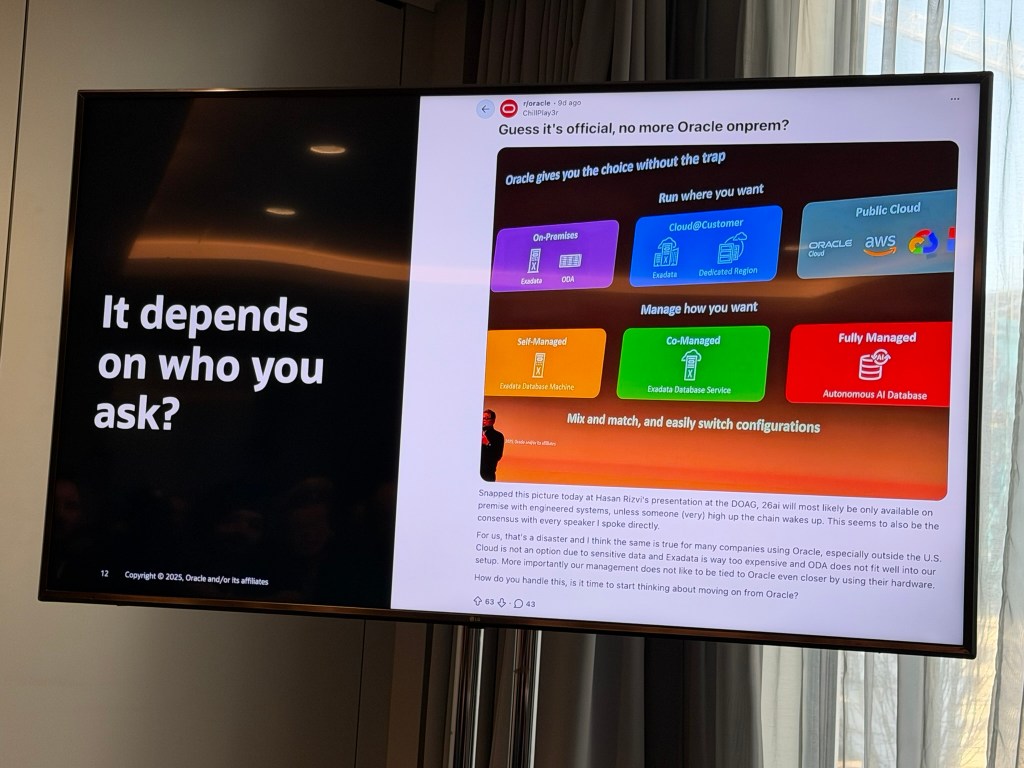

This is the question on so many minds! Hopefully, you heard it here first: at the UKOUG Discover 2025 conference in Birmingham, UK, Dom Giles, Vice President of Product Management at Oracle, confirmed that on-premises was never off the cards, it was just a matter of when. This reassurance is significant for customers that rely on on-premises infrastructure, and shows Oracle’s continued commitment to hybrid and flexible deployment models.

This effectively debunks the online speculation suggesting that Oracle would abandon on-premises deployments for its latest database release, which caused quite a stir!

Just to be absolutely clear! Dom confirmed that an on-premises version of Oracle AI Database 26ai will be available, but he categorically did not provide a release date! His slide reinforced this point, by masking the date, meaning the timing is still to be announced.

This is purely my own speculation, but based on the naming convention of 26ai, I believe the on-premises release could arrive very soon 😁, potentially as early as January 2026! It seems logical that Linux would be the first on-premises platform to be available, given that it’s the standard for OCI, Engineered Systems, and was the environment offered during the beta programme. Other platforms would likely follow throughout 2026, completing the rollout and conforming to the new name 😎. Do you agree? Let me know your thoughts in the comments.

UPDATE: see next section for confirmed release date for on-premises 🥳

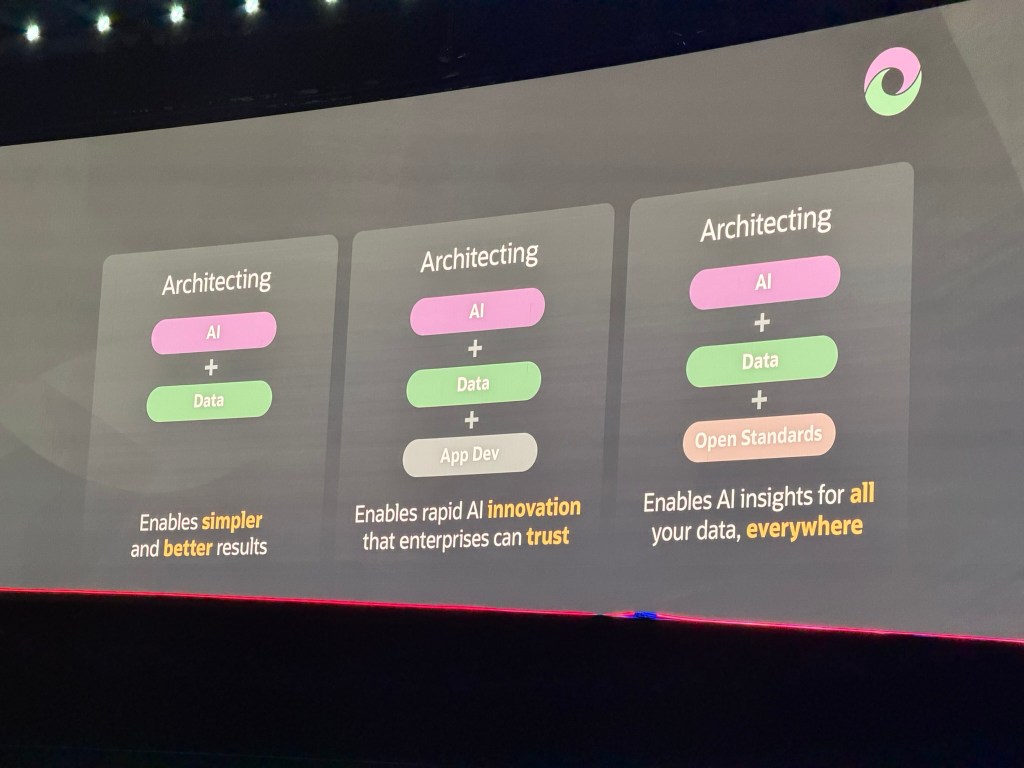

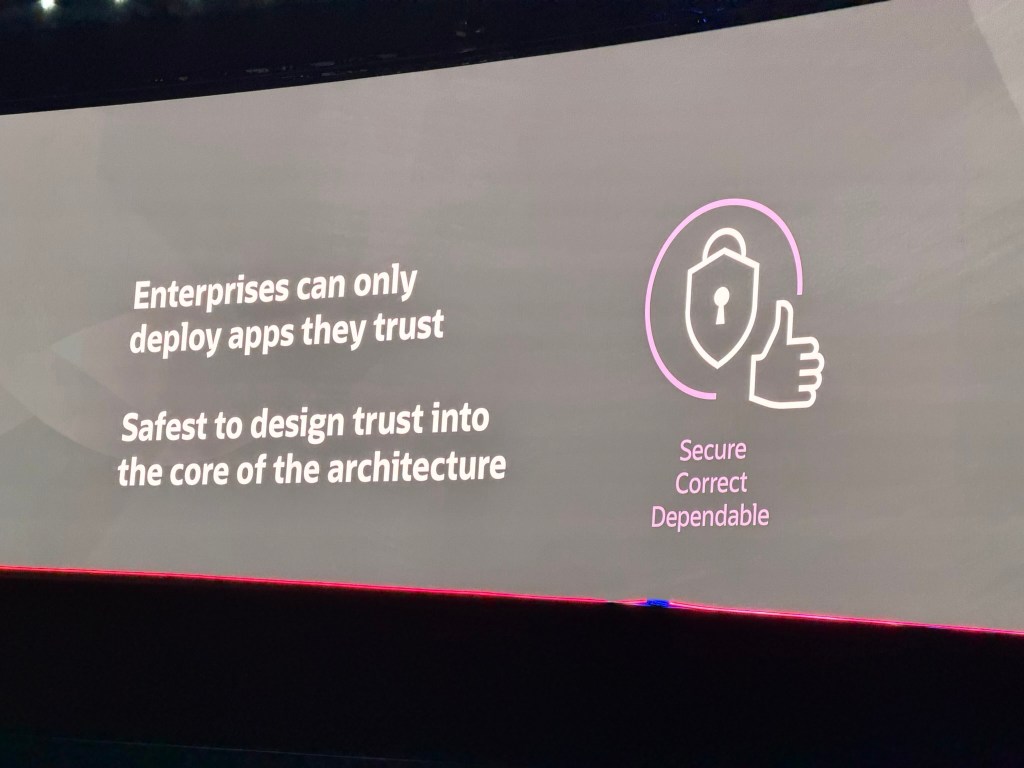

He concluded with Oracle AI Database 26ai is AI and data architected together, very similar to how hardware and software are architected together for Exadata and we know how well that went. Let’s hope the same success happens with this new release, bringing AI to your data!

Update: On-Premises Release Date Confirmed

Only matter of hours later, I saw that it was announced and my speculation of January 2026 for on-premises x86-64 is now confirmed 🥳

See Jenny Tsai-Smith (Senior Vice President, Product Management, Oracle) blog post confirming the release date here.

If you found this blog post useful, please like as well as follow me through my various Social Media avenues available on the sidebar and/or subscribe to this oracle blog via WordPress/e-mail.

Thanks

Zed DBA (Zahid Anwar)