Tablespace management in an Oracle database is important and something a DBA will need to do quite frequently. Therefore I wrote a query, which I believe I originally got from the internet and then it evolved by me adding in temp tablespace and few other things 🙂

Query for Tablespace Usage

Query to obtain Tablespace Usage:

set pages 999

set lines 400

SELECT df.tablespace_name tablespace_name,

max(df.autoextensible) auto_ext,

round(df.maxbytes / (1024 * 1024), 2) max_ts_size,

round((df.bytes - sum(fs.bytes)) / (df.maxbytes) * 100, 2) max_ts_pct_used,

round(df.bytes / (1024 * 1024), 2) curr_ts_size,

round((df.bytes - sum(fs.bytes)) / (1024 * 1024), 2) used_ts_size,

round((df.bytes-sum(fs.bytes)) * 100 / df.bytes, 2) ts_pct_used,

round(sum(fs.bytes) / (1024 * 1024), 2) free_ts_size,

nvl(round(sum(fs.bytes) * 100 / df.bytes), 2) ts_pct_free

FROM dba_free_space fs,

(select tablespace_name,

sum(bytes) bytes,

sum(decode(maxbytes, 0, bytes, maxbytes)) maxbytes,

max(autoextensible) autoextensible

from dba_data_files

group by tablespace_name) df

WHERE fs.tablespace_name (+) = df.tablespace_name

GROUP BY df.tablespace_name, df.bytes, df.maxbytes

UNION ALL

SELECT df.tablespace_name tablespace_name,

max(df.autoextensible) auto_ext,

round(df.maxbytes / (1024 * 1024), 2) max_ts_size,

round((df.bytes - sum(fs.bytes)) / (df.maxbytes) * 100, 2) max_ts_pct_used,

round(df.bytes / (1024 * 1024), 2) curr_ts_size,

round((df.bytes - sum(fs.bytes)) / (1024 * 1024), 2) used_ts_size,

round((df.bytes-sum(fs.bytes)) * 100 / df.bytes, 2) ts_pct_used,

round(sum(fs.bytes) / (1024 * 1024), 2) free_ts_size,

nvl(round(sum(fs.bytes) * 100 / df.bytes), 2) ts_pct_free

FROM (select tablespace_name, bytes_used bytes

from V$temp_space_header

group by tablespace_name, bytes_free, bytes_used) fs,

(select tablespace_name,

sum(bytes) bytes,

sum(decode(maxbytes, 0, bytes, maxbytes)) maxbytes,

max(autoextensible) autoextensible

from dba_temp_files

group by tablespace_name) df

WHERE fs.tablespace_name (+) = df.tablespace_name

GROUP BY df.tablespace_name, df.bytes, df.maxbytes

ORDER BY 4 DESC;

Output from Tablespace Usage Query

This is the output you will get from the query:

TABLESPACE_NAME AUT MAX_TS_SIZE MAX_TS_PCT_USED CURR_TS_SIZE USED_TS_SIZE TS_PCT_USED FREE_TS_SIZE TS_PCT_FREE --------------- --- ----------- --------------- ------------ ------------ ----------- ------------ ----------- VERS_DATA YES 6061895 85.81 5270187 5201788.31 98.7 68398.69 1 VERS_INDX YES 2391991.98 85.55 2101084.98 2046384.98 97.4 54700 3 SYSAUX YES 45055.98 73.43 43788 33084.44 75.56 10703.56 24 UNDOTBS1 YES 32767.98 53.95 30565 17678.44 57.84 12886.56 42 VERS_INDEX YES 32767 6.95 3072 2278.88 74.18 793.13 26 TEMP YES 1245165.69 4.72 134141 58728 43.78 75413 56 SYSTEM YES 32767.98 2.69 890 882.19 99.12 7.81 1 UNDOTBS2 YES 32767.98 .2 20555 64.38 .31 20490.63 100 SNP_DATA YES 32767 0 1024 1 .1 1023 100 USERS YES 32767.98 0 5 1.38 27.5 3.63 73 10 rows selected.

Column definitions

TABLESPACE_NAME: This is the Tablespace Name.

AUTO_EXT: If the datafiles are ‘Auto Extendable’ or not.

Please Note: This is using a max function, so if all are ‘NO’, then the ‘NO’ is true for all datafiles, however if one is ‘YES’, then the ‘YES’ is possible for one through to all of the datafiles.

MAX_TS_SIZE: This is the maximum Tablespace Size if all the datafile reach their max size.

MAX_TS_PCT_USED: This is the percent of MAX_TS_SIZE reached and is the most important value in the query, as this reflects the true usage before DBA intervention is required.

CURR_TS_SIZE: This is the current size of the Tablespace.

USED_TS_SIZE: This is how much of the CURR_TS_SIZE is used.

TS_PCT_USED: This is the percent of CURR_TS_SIZE which if ‘Auto Extendable’ is on, is a little meaningless. Use MAX_TS_PCT_USED for actual usage.

FREE_TS_SIZE: This is how much is free in CURR_TS_SIZE.

TS_PCT_FREE: This is how much is free in CURR_TS_SIZE as a percent.

Please Note: All sizes are in Megabytes, this can be changed to Gigabytes by added a ‘/1024’ to the columns.

Related Blog Posts

Adding a datafile to tablespace

Adding a Datafile to Temp Tablespace

If you found this blog post useful, please like as well as follow me through my various Social Media avenues available on the sidebar and/or subscribe to this oracle blog via WordPress/e-mail.

Thanks

Zed DBA (Zahid Anwar)

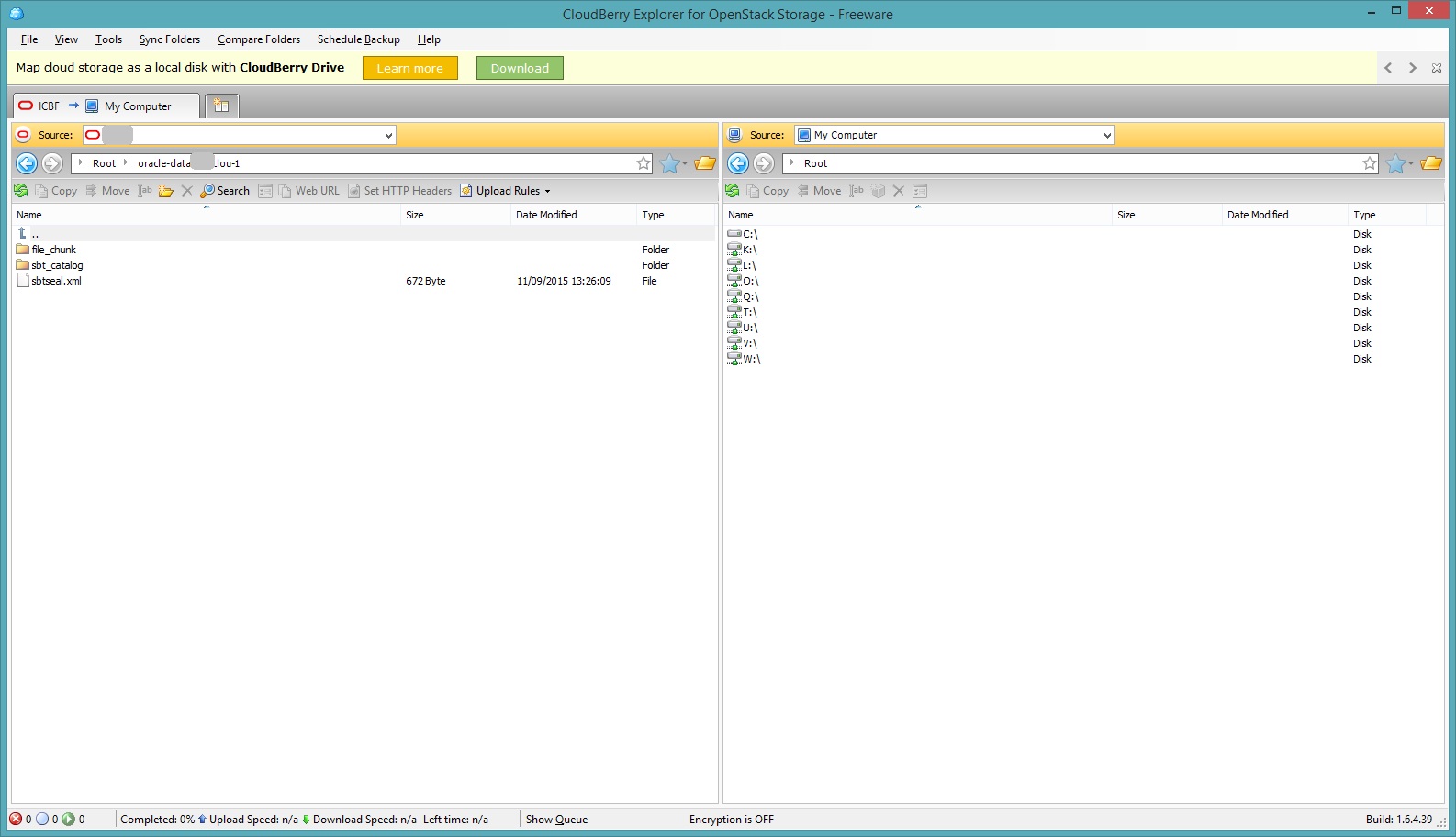

Now your freebie is expiring and you decide you want to retain the service but now as metered, so you want to delete all the unnecessary files, which in my case are old Oracle database backups.

Now your freebie is expiring and you decide you want to retain the service but now as metered, so you want to delete all the unnecessary files, which in my case are old Oracle database backups.